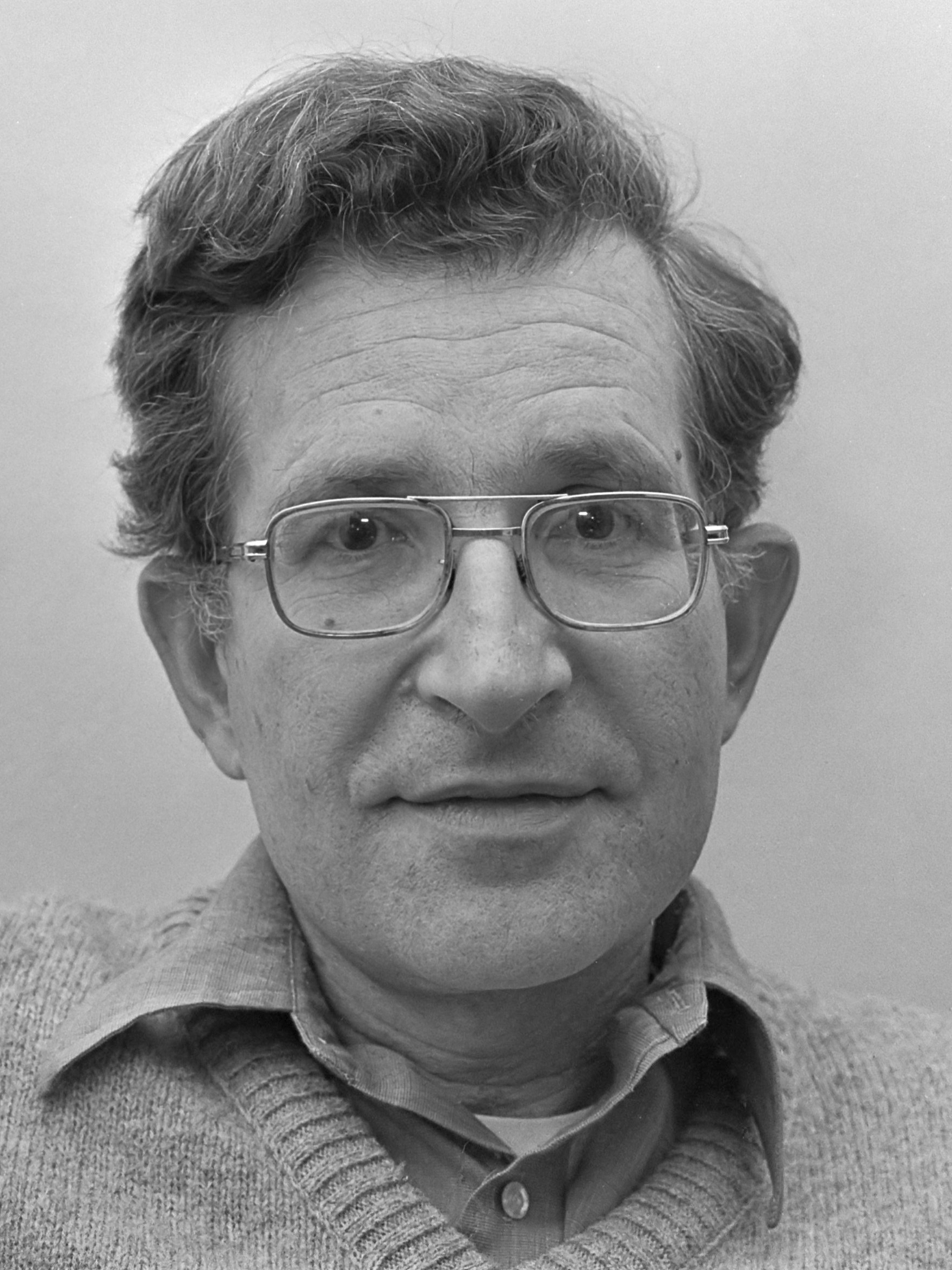

Noam Chomsky (1928 – )

Noam Chomsky is an American linguist who has had a profound impact on philosophy. Chomsky’s linguistic work has been motivated by the observation that nearly all adult human beings have the ability to effortlessly produce and understand a potentially infinite number of sentences. For instance, it is very likely that before now you have never encountered this very sentence you are reading, yet if you are a native English speaker, you easily understand it. While this ability often goes unnoticed, it is a remarkable fact that every developmentally normal person gains this kind of competence in their first few years, no matter their background or general intellectual ability. Chomsky’s explanation of these facts is that language is an innate and universal human property, a species-wide trait that develops as one matures in much the same manner as the organs of the body. A language is, according to Chomsky, a state obtained by a specific mental computational system that develops naturally and whose exact parameters are set by the linguistic environment that the individual is exposed to as a child. This definition, which is at odds with the common notion of a language as a public system of verbal signals shared by a group of speakers, has important implications for the nature of the mind.

Noam Chomsky is an American linguist who has had a profound impact on philosophy. Chomsky’s linguistic work has been motivated by the observation that nearly all adult human beings have the ability to effortlessly produce and understand a potentially infinite number of sentences. For instance, it is very likely that before now you have never encountered this very sentence you are reading, yet if you are a native English speaker, you easily understand it. While this ability often goes unnoticed, it is a remarkable fact that every developmentally normal person gains this kind of competence in their first few years, no matter their background or general intellectual ability. Chomsky’s explanation of these facts is that language is an innate and universal human property, a species-wide trait that develops as one matures in much the same manner as the organs of the body. A language is, according to Chomsky, a state obtained by a specific mental computational system that develops naturally and whose exact parameters are set by the linguistic environment that the individual is exposed to as a child. This definition, which is at odds with the common notion of a language as a public system of verbal signals shared by a group of speakers, has important implications for the nature of the mind.

Over decades of active research, Chomsky’s model of the human language faculty—the part of the mind responsible for the acquisition and use of language—has evolved from a complex system of rules for generating sentences to a more computationally elegant system that consists essentially of just constrained recursion (the ability of a function to apply itself repeatedly to its own output). What has remained constant is the view of language as a mental system that is based on a genetic endowment universal to all humans, an outlook that implies that all natural languages, from Latin to Kalaallisut, are variations on a Universal Grammar, differing only in relatively unimportant surface details. Chomsky’s research program has been revolutionary but contentious, and critics include prominent philosophers as well as linguists who argue that Chomsky discounts the diversity displayed by human languages.

Chomsky is also well known as a champion of liberal political causes and as a trenchant critic of United States foreign policy. However, this article focuses on the philosophical implications of his work on language. After a biographical sketch, it discusses Chomsky’s conception of linguistic science, which often departs sharply from other widespread ideas in this field. It then gives a thumbnail summary of the evolution of Chomsky’s research program, especially the points of interest to philosophers. This is followed by a discussion of some of Chomsky’s key ideas on the nature of language, language acquisition, and meaning. Finally, there is a section covering his influence on the philosophy of mind.

Table of Contents

- Life

- Philosophy of Linguistics

- The Development of Chomsky’s Linguistic Theory

- Language and Languages

- Cognitive Science and Philosophy of Mind

- References and Further Reading

1. Life

Avram Noam Chomsky was born in Philadelphia in 1928 to Jewish parents who had immigrated from Russia and Ukraine. He manifested an early interest in politics and, from his teenage years, frequented anarchist bookstores and political circles in New York City. Chomsky attended the University of Pennsylvania at the age of 16, but he initially found his studies unstimulating. After meeting the mathematical linguist Zellig Harris through political connections, Chomsky developed an interest in language, taking graduate courses with Harris and, on his advice, studying philosophy with Nelson Goodman. Chomsky’s 1951 undergraduate honors thesis, on Modern Hebrew, would form the basis of his MA thesis, also from the University of Pennsylvania. Although Chomsky would later have intellectual fallings out with both Harris and Goodman, they were major influences on him, particularly in their rigorous approach, informed by mathematics and logic, which would become a prominent feature of his own work.

After earning his MA, Chomsky spent the next four years with the Society of Fellows at Harvard, where he had applied largely because of his interest in the work of W.V.O. Quine, a Harvard professor and major figure in analytic philosophy. This would later prove to be somewhat ironic, as Chomsky’s work developed into the antithesis of Quine’s behaviorist approach to language and mind. In 1955, Chomsky was awarded his doctorate and became an assistant professor at the Massachusetts Institute of Technology, where he would continue to work as an emeritus professor even after his retirement in 2002. Throughout this long tenure at MIT, Chomsky produced an enormous volume of work in linguistics, beginning with the 1957 publication of Syntactic Structures. Although his work initially met with indifference or even hostility, including from his former mentors, it gradually altered the very nature of the field, and Chomsky grew to be widely recognized as one of the most important figures in the history of language science. Since 2017, he has been a laureate professor in the linguistics department at the University of Arizona.

Throughout his career, Chomsky has been at least as prolific in social, economic, and political criticism as in linguistics. Chomsky became publicly outspoken about his political views with the escalation of the Vietnam War, which he always referred to as an “invasion”. He was heavily involved in the anti-war movement, sometimes risking both his professional and personal security, and was arrested several times. He remained politically active and, among many other causes, was a vocal critic of US interventions in Latin America during the 1980s, the reaction to the September 2001 attacks, and the invasion of Iraq. Chomsky has opposed, since his early youth, the capitalist economic model and supported the Occupy movement of the early 2010s. He has also been an unwavering advocate of intellectual freedom and freedom of speech, a position that has at times pitted him against other left-leaning intellectuals and caused him to defend the rights of others who have very different views from his own. Despite the speculations of many biographers, Chomsky has always denied any connection between his work in language and politics, sometimes quipping that someone was allowed to have more than one interest.

In 1947, Chomsky married the linguist Carol Doris Chomsky (nee Schatz), a childhood friend from Philadelphia. They had three children and remained married until her death in 2008. Chomsky remarried Valeria Wasserman, a Brazilian professional translator, in 2014.

2. Philosophy of Linguistics

Chomsky’s approach to linguistic science, indeed his entire vision of what the subject matter of the discipline consists of, is a sharp departure from the attitudes prevalent in the mid-20th century. To simplify, prior to Chomsky, language was studied as a type of communicative behavior, an approach that is still widespread among those who do not accept Chomsky’s ideas. In contrast, his focus is on language as a type of (often unconscious) knowledge. The study of language has, as Chomsky states, three aspects: determining what the system of knowledge a language user has consists of, how that knowledge is acquired, and how that knowledge is used. A number of points in Chomsky’s approach are of interest to the philosophy of linguistics and to the philosophy of science more generally, and some of these points are discussed below.

a. Behaviorism and Linguistics

When Chomsky was first entering academics in the 1950s, the mainstream school of linguistics for several decades had been what is known as structuralism. The structuralist approach, endorsed by Chomsky’s mentor Zellig Harris, among others, concentrated on analyzing corpora, or records of the actual use of a language, either spoken or written. The goal of the analysis was to identify patterns in the data that might be studied to yield, among other things, the grammatical rules of the language in question. Reflecting this focus on language as it is used, structuralists viewed language as a social phenomenon, a communicative tool shared by groups of speakers. Structuralist linguistics might well be described as consisting of the study of what happens between a speaker’s mouth and a listener’s ear; as one well -known structuralist put it, “the linguist deals only with the speech signal” (Bloomfield, 1933: 32). This is in marked contrast to Chomsky and his followers, who concentrate on what is going on in the mind of a speaker and who look there to identify grammatical rules.

Structuralist linguistics was itself symptomatic of behaviorism, a paradigm prominently championed in psychology by B.F. Skinner and in philosophy by W.V.O. Quine and which was dominant in the midcentury. Behaviorism held that science should restrict itself to observable phenomena. In psychology, this meant seeking explanations entirely in terms of external behavior without discussing minds, which are, by their very nature, unobservable. Language was to be studied in terms of subjects’ responses to stimuli and their resulting verbal output. Behaviorist theories were often formed on the basis of laboratory experiments in which animals were conditioned by being given food rewards or tortured with electric shock in order to shape their behavior. It was thought that human behavior could be similarly explained in terms of conditioning that shapes reactions to specific stimuli. This approach perhaps reached its zenith with the publication of Skinner’s Verbal Behavior (1957), which sought to reduce human language to conditioned responses. According to Skinner, speakers are conditioned as children, through training by adults, to respond to stimuli with an appropriate verbal response. For example, a child might realize that if they see a piece of candy (the stimulus) and respond by saying “candy”, they might be rewarded by adults with the desired sweet, reinforcing that particular response. For an adult speaker, the pattern of stimuli and response could be very complex, and what specific aspect of a situation is being responded to might be difficult to ascertain, but the underlying principle was held to be the same.

Chomsky’s scathing 1959 review of Verbal Behavior has actually become far better known than the original book. Although Chomsky conceded to Skinner that the only data available for the study of language consisted of what people say, he denied that meaningful explanations were to be found at that level. He argued that in order to explain a complex behavior, such as language use, exhibited by a complex organism such as a human being, it is necessary to inquire into the internal organization of the organism and how it processes information. In other words, it was necessary to make inferences about the language user’s mind. Elsewhere, Chomsky likened the procedure of studying language to what engineers would do if confronted with a hypothetical “black box”, a mysterious machine whose input and output were available for inspection but whose internal functioning was hidden. Merely detecting patterns in the output would not be accepted as real understanding; instead, that would come from inferring what internal processes might be at work.

Chomsky particularly criticized Skinner’s theory that utterances could be classified as responses to subtle properties of an object or event. The observation that human languages seem to exhibit stimulus-freedom goes back at least to Descartes in the 17th century, and about the same time as Chomsky was reviewing Skinner, the linguist Charles Hockett (later one of Chomsky’s most determined critics) suggested that this is one of the features that distinguish human languages from most examples of animal communication. For instance, a vervet monkey will give a distinct alarm call any time she spots an eagle and at no other times. In contrast, a human being might say anything or nothing in response to any given stimulus. Viewing a paining one might say, “Dutch…clashes with the wallpaper…. Tilted, hanging too low, beautiful, hideous, remember our camping trip last summer? or whatever else might come to our minds when looking at a picture.” (Chomsky, 1959:2). What aspect of an object, event, or environment triggers a particular response rather than another can only be explained in mental terms. The most relevant fact is what the speaker is thinking about, so a true explanation must take internal psychology into account.

Chomsky’s observation concerning speech was part of his more general criticism of the behaviorist approach. Chomsky held that attempts to explain behavior in terms of stimuli and responses “will be in general a vain pursuit. In all but the most elementary cases, what a person does depends in large measure on what he knows, believes, and anticipates” (Chomsky, 2006: xv). This was also meant to apply to the behaviorist and empiricist philosophy exemplified by Quine. Although Quine has remained important in other aspects of analytic philosophy, such as logic and ontology, his behaviorism is largely forgotten. Chomsky is widely regarded as having inaugurated the era of cognitive science as it is practiced today, that is, as a study of the mental.

b. The Galilean Method

Chomsky’s fundamental approach to doing science was and remains different from that of many other linguists, not only in his concentration on mentalistic explanation. One approach to studying any phenomenon, including language, is to amass a large amount of data, look for patterns, and then formulate theories to explain those patterns. This method, which might seem like the obvious approach to doing any type of science, was favored by structuralist linguists, who valued the study of extensive catalogs of actual speech in the world’s languages. The goal of the structuralists was to provide descriptions of a language at various levels, starting with the analysis of pronunciation and eventually building up to a grammar for the language that would be an adequate description of the regularities identifiable in the data.

In contrast, Chomsky’s method is to concentrate not on a comprehensive analysis but rather on certain crucial data, or data that is better explained by his theory than by its rivals. This sort of methodology is often called “Galilean”, since it takes as its model the work of Galileo and Newton. These physicists, judiciously, did not attempt to identify the laws of motion by recording and studying the trajectory of as many moving objects as possible. In the normal course of events, the exact paths traced by objects in motion are the results of the complex interactions of numerous phenomena such as air resistance, surface friction, human interference, and so on. As a result, it is difficult to clearly isolate the phenomena of interest. Instead, the early physicists concentrated on certain key cases, such as the behavior of masses in free fall or even idealized fictions such as objects gliding over frictionless planes, in order to identify the principles that, in turn, could explain the wider data. For similar reasons, Chomsky doubts that the study of actual speech—what he calls performance—will yield theoretically important insights. In a widely cited passage (Chomsky, 1962, 531), he noted that:

Actual discourse consists of interrupted fragments, false starts, lapses, slurring, and other phenomena that can only be understood as distortions of an underlying idealized pattern.

Like the ordinary movements of objects observable in nature, which Galileo largely ignored, actual speech performance is likely to be the product of a mass of interacting factors, such as the social conventions governing the speech exchange, the urgency of the message and the time available, the psychological states of the speakers (excited, panicked, drunk), and so on, of which purely linguistic phenomena will form only a small part. It is the idealized patterns concealed by these effects and the mental system that generates those patterns —the underlying competence possessed by language users —that Chomsky regards as the proper subject of linguistic study. (Although the terms competence and performance have been superseded by the I-Language/E-Language distinction, discussed in 4.c. below, these labels are fairly entrenched and still widely used.)

c. The Nature of the Evidence

Early in his career (1965), Chomsky specified three levels of adequacy that a theory of language should satisfy, and this has remained a feature of his work. The first level is observational, to determine what sentences are grammatically acceptable in a language. The second is descriptive, to provide an account of what the speaker of the language knows, and the third is explanatory, to give an explanation of how such knowledge is acquired. Only the observational level can be attained by studying what speakers actually say, which cannot provide much insight into what they know about language, much less how they came to have that knowledge. A source of information about the second and third levels, perhaps surprisingly, is what speakers do not say, and this has been a focus of Chomsky’s program. This negative data is drawn from the judgments of native speakers about what they feel they can’t say in their language. This is not, of course, in the sense of being unable to produce these strings of words or of being unable, with a little effort, to understand the intended message, but simply a gut feeling that “you can’t say that”. Chomsky himself calls these interpretable but unsayable sentences “perfectly fine thoughts”, while the philosopher Georges Rey gave them the pithier name “WhyNots”. Consider the following examples from Rey 2022 (the “*” symbol is used by linguists to mark a string that is ill-formed in that it violates some principle of grammar):

(1) * Who did John and kiss Mary? (Compared to John, and who kissed Mary? and who-initial questions like Who did John kiss?)

(2) * Who did stories about terrify Mary? (Compared to stories about who terrified Mary?)

Or the following question/answer pairs:

(3) Which cheese did you recommend without tasting it? * I recommended the brie without tasting it. (Compared to… without tasting it.)

(4) Have you any wool? * Yes, I have any wool.

An introductory linguistics textbook provides two further examples (O’Grady et al. 2005):

(5) * I went to movie. (Compared to I went to school.)

(6) *May ate a cookie, and then Johnnie ate some cake, too. (Compared to Mary ate a cookie, and then Johnnie ate a cookie too/ate a snack too.)

The vast majority of English speakers would balk at these sentences, although they would generally find it difficult to say precisely what the issue is (the textbook challenges the reader to try to explain). Analogous “whynot” sentences exist in every language yet studied.

What Chomsky holds to be significant about this fact is that almost no one, aside from those who are well read in linguistics or philosophy of language, has ever been exposed to (1) –(6) or any sentences like them. Analysis of corpora shows that sentences constructed along these lines virtually never occur, even in the speech of young children. This makes it very difficult to accept the explanation, favored by behaviorists, that we recognize them to be unacceptable as the result of training and conditioning. Since children do not produce utterances like (1) –(6), parents never have a chance to explain what is wrong, to correct them, and to tell them that such sentences are not part of English. Further, since they are almost never spoken by anyone, it is vanishingly unlikely that a parent and child would overhear them so that the parent could point them out as ill-formed. Neither is this knowledge learned through formal instruction in school. Instruction in not saying sentences like (1)–(6) is not a part of any curriculum, and an English speaker who has never attended a day of school is as capable of recognizing the unacceptability of (1)–(6) as any college graduate.

Examples can be multiplied far beyond (1)–(6); there are indefinite numbers of strings of English words (or words of any language) that are comprehensible but unacceptable. If speakers are not trained to recognize them as ill-formed, how do they acquire this knowledge? Chomsky argues that this demonstrates that human beings possess an underlying competence capable of forming and identifying grammatical structures—words, phrases, clauses, and sentences —in a way that operates almost entirely outside of conscious awareness, computing over structural features of language that are not actually pronounced or written down but which are critical to the production and understanding of sentences. This competence and its acquisition are the proper subject matter for linguistic science, as Chomsky defines the field.

d. Linguistic Structures

An important part of Chomsky’s linguistic theory (although it is an idea that predates him by several decades and is also endorsed by some rival theories) is that it postulates structures that lie below the surface of language. The presence of such structures is supported by, among other evidence, considering cases of non-linear dependency between the words in a sentence, that is, cases where a word modifies another word that is some distance away in the linear order of the sentence as it is pronounced. For instance, in the sentence (from Berwick and Chomsky, 2017: 117):

(7) Instinctively, birds who fly swim.

we know that instinctively applies to swim rather than fly, indicating an unspoken connection that bypasses the three intervening words and which the language faculty of our mind somehow detects when parsing the sentence. Chomsky’s hypothesis of a dedicated language faculty —a part of the mind existing for the sole purpose of forming and interpreting linguistic structures, operating in isolation from other mental systems —is supported by the fact that nonlinguistic knowledge does not seem to be relied on to arrive at the correct interpretation of sentences such as (7). Try replacing swim with play chess. Although you know that birds instinctively fly and do not play chess, your language faculty provides the intended meaning without any difficulty. Chomsky’s theory would suggest that this is because that faculty parses the underlying structure of the sentence rather than relying on your knowledge about birds.

According to Chomsky, the dependence of human languages on these structures can also be observed in the way that certain types of sentences are produced from more basic ones. He frequently discusses the formation of questions from declarative sentences. For instance, any English speaker understands that the question form of (8) is (9), and not (10) (Chomsky, 1986: 45):

(8) The man who is here is tall.

(9) Is the man who is here tall?

(10) * Is the man who here is tall?

What rule does a child learning English have to grasp to know this? To a Martian linguist unfamiliar with the way that human languages work, a reasonable initial guess might be to move the fourth word of the sentence to the front, which is obviously incorrect. To see this, change (8) to:

(11) The man who was here yesterday was tall.

A more sophisticated hypothesis might be to move the second auxiliary verb in the sentence, is in the case of (8), to the front. But this is also not correct, as more complicated cases show:

(12) The woman who is in charge of deciding who is hired is ready to see him now.

(13) * Is the woman who is in charge of deciding who hired is ready to see him now?

In fact, in no human language do transformations from one type of sentence to another require taking the linear order of words into account, although there is no obvious reason why they shouldn’t. A language that works on a principle such as switch the first and second words of a sentence to indicate a question is certainly imaginable and would seem simple to learn, but no language yet cataloged operates in such a way.

The correct rule in the cases of (8) through (13) is that the question is formed by moving the auxiliary verb (is) occurring in the verb phrase of the main clause of the sentence, not the one in the relative clause (a clause modifying a noun, such as who is here). Thus, knowing that (9) is the correct question form of (8) or that (13) is wrong requires sensitivity to the way that the elements of a sentence are grouped together into phrases and clauses. This is something that is not apparent on the surface of either the spoken or written forms of (8) or (12), yet a speaker with no formal instruction grasps it without difficulty. It is the study of these underlying structures and the way that the mind processes them that is the core concern of Chomskyan linguistics, rather than the analysis of the strings of words actually articulated by speakers.

3. The Development of Chomsky’s Linguistic Theory

Chomsky’s research program, which has grown to involve the work of many other linguists, is closely associated with generative linguistics. This name refers to the project of identifying sets of rules—grammars—that will generate all and only the sentences of a language. Although explicit rules eventually drop out of the picture, replaced by more abstract “principles”, the goal remains to identify a system that can produce the potentially infinite number of sentences of a human language using the resources contained in the minds of a speaker, which are necessarily finite.

Chomsky’s work has implications for the study of language as a whole, but his concentration has been on syntax. This branch of linguistic science is concerned with the grammars that govern the production of sentences that are acceptable in a language and divide them from nonacceptable strings of words, as opposed to semantics, the part of linguistics concerned with the meaning of words and sentences, and pragmatics, which studies the use of language in context.

Although the methodological principles have remained constant from the start, Chomsky’s theory has undergone major changes over the years, and various iterations may seem, at least on a first look, to have little obvious common ground. Critics present this as evidence that the program has been stumbling down one dead end after another, while Chomsky asserts in response that rapid evolution is characteristic of new fields of study and that changes in a program’s guiding theory are evidence of healthy intellectual progress. Five major stages of development might be identified, corresponding to the subsections below. Each stage builds on previous ones, it has been alleged; superseded iterations should not be considered to be false but rather replaced by a more complete explanation.

a. Logical Constructivism

Chomsky’s theory of language began to be codified in the 1950s, first set down in a massive manuscript that was later published as Logical Structure of Linguistic Theory (1975) and then partially in the much shorter and more widely read Syntactic Structures (1957). These books differed significantly from later iterations of Chomsky’s work in that they were more of an attempt to show what an adequate theory of natural language would need to look like than to fully work out such a theory. The focus was on demonstrating how a small set of rules could operate over a finite vocabulary to generate an infinite number of sentences, as opposed to identifying a psychologically realistic account of the processes actually occurring in the mind of a speaker.

Even before Chomsky, since at least the 1930s, the structure of a sentence was thought to consist of a series of phrases, such as noun phrases or verb phrases. In Chomsky’s early theory, two sorts of rules governed the generation of such structures. Basic structures were given by rewrite rules, procedures that indicate the more basic constituents of structural components. For example,

S → NP VP

indicates that a noun phrase, NP, followed directly by a verb phrase, VP, constitute a sentence, S. “NP → N” indicates that a noun may constitute a noun phrase. Eventually, the application of these rewrite rules stops when every constituent of a structure has been replaced by a syntactic element, a lexical word such as Albert or meows. Transformation rules alter those basic structures in various ways to produce structures corresponding to complex sentences. Importantly, certain transformation rules allowed recursion. This is a concept central to computer science and mathematical logic, by which a rule could be applied to its own output an unlimited number of times (for instance, in mathematics, one can start with 0 and apply the recursive function add 1 repeatedly to yield the natural numbers 0,1,2,3, and so forth.). The presence of recursive rules allows the embedding of structures within other structures, such as placing Albert meows under Leisa thinks to get Leisa thinks Albert meows. This could then be placed under Casey says that to produce Casey says that Leisa thinks Albert meows, and so on. Embedding could be done as many times as desired, so that recursive rules could produce sentences of any length and complexity, an important requirement for a theory of natural language. Recursion has not only remained central to subsequent iterations of Chomsky’s work but, more recently, has come to be seen as the defining characteristic of human languages.

Chomsky’s interest in rules that could be represented as operations over symbols reflected influence from philosophers inclined towards formal methods, such as Goodman and Quine. This is a central feature of Chomsky’s work to the present day, even though subsequent developments have also taken psychological realism into account. Some of Chomsky’s most impactful research from his early career (late 50s and early 60s) was the invention of formal language theory, a branch of mathematics dealing with languages consisting of an alphabet of symbols from which strings could be formed in accordance with a formal grammar, a set of specific rules. The Chomsky Hierarchy provides a method of classifying formal languages according to the complexity of the strings that could be generated by the language’s grammar (Chomsky 1956). Chomsky was able to demonstrate that natural human languages could not be produced by the lowest level of grammar on the hierarchy, contrary to many linguistic theories popular at the time. Formal language theory and the Chomsky Hierarchy have continued to have applications both in linguistics and elsewhere, particularly in computer science.

b. The Standard Model

Chomsky’s 1965 landmark work, Aspects of the Theory of Syntax, which devoted much space to philosophical foundations, introduced what later became known as the “Standard Model”. While the theory itself was in many respects an extension of the ideas contained in Syntactic Structures, there was a shift in explanatory goals as Chomsky addressed what he calls “Plato’s Problem”, the mystery of how children can learn something as complex as the grammar of a natural language from the sparse evidence they are presented with. The sentences of a human language are infinite in number, and no child ever hears more than a tiny subset of them, yet they master the grammar that allows them to produce every sentence in their language. (“Plato’s Problem” is an allusion to Plato’s Meno, a discussion of similar puzzles surrounding geometry. Section 4.b provides a fuller discussion of the issue as well as more recent developments in Chomsky’s model of language acquisition.) This led Chomsky, inspired by early modern rationalist philosophers such as Descartes and Leibniz, to postulate innate mechanisms that would guide a child in this process. Every human child was held to be born with a mental system for language acquisition, operating largely subconsciously, preprogrammed to recognize the underlying structure of incoming linguistic signals, identify possible grammars that could generate those structures, and then to select the simplest such grammar. It was never fully worked out how, on this model, possible grammars were to be compared, and this early picture has subsequently been modified, but the idea of language acquisition as relying on innate knowledge remains at the heart of Chomsky’s work.

An important idea introduced in Aspects was the existence of two levels of linguistic structure: deep structure and surface structure. A deep structure contains structural information necessary for interpreting sentence meaning. Transformations on a deep structure —moving, deleting, and adding elements in accordance with the grammar of a language —yield a surface structure that determines the way that the sentence is pronounced. Chomsky explained (in a 1968 lecture) that,

If this approach is correct in general, then a person who knows a specific language has control of a grammar that generates the infinite set of potential deep structures, maps them onto associated surface structures, and determines the semantic and phonetic interpretations of these abstract objects (Chomsky, 2006: 46).

Note that, for Chomsky, the deep structure was a grammatical object that contains structural information related to meaning. This is very different from conceiving of a deep structure as a meaning itself, although a theory to that effect, generative semantics, was developed by some of Chomsky’s colleagues (initiating a debate acrimonious enough to sometimes be referred to as “the linguistic wars”). The names and exact roles of the two levels would evolve over time, and they were finally dropped altogether in the 1990s (although this is not always noticed, a matter that sometimes confuses the discussion of Chomsky’s theories).

Aspects was also notable for the introduction of the competence/performance distinction, or the distinction between the underlying mental systems that give a speaker mastery of her language (competence) and her actual use of the language (performance), which will seldom fully reflect that mastery. Although these terms have technically been superseded by E-language and I-language (see 4.c), they remain useful concepts in understanding Chomsky’s ideas, and the vocabulary is still frequently used.

c. The Extended Standard Model

Throughout the 1970s, a number of technical changes, aimed at simplification and consolidation, were made to the Standard Model set out in Aspects. These gradually led to what became known as the “Extended Standard Model”. The grammars of the Standard Model contained dozens of highly specific transformation rules that successively rearranged elements of a deep structure to produce a surface structure. Eventually, a much simpler and more empirically adequate theory was arrived at by postulating only a single operation that moved any element of a structure to any place in that structure. This operation, move α, was subject to many “constraints” that limited its applications and therefore restrained what could be generated. For instance, under certain conditions, parts of a structure form “islands” that block movement (as when who is blocked from moving from the conjunction in John and who had lunch? to give *Who did John and have lunch?). Importantly, the constraints seemed to be highly consistent across human languages.

Grammars were also simplified by cutting out information that seemed to be specified in the vocabulary of a language. For example, some verbs must be followed by nouns, while others must not. Compare I like coffee and She slept to * I like and * She slept a book. Knowing which of these strings are correct is part of knowing the words like and slept, and it seems that a speaker’s mind contains a sort of lexicon, or dictionary, that encodes this type of information for each word she knows. There is no need for a rule in the grammar to state that some verbs need an object and others do not, which would just be repeating information already in the lexicon. The properties of the lexical items are therefore said to “project” onto the grammar, constraining and shaping the structures available in a language. Projection remains a key aspect of the theory, so that lexicon and grammar are thought to be tightly integrated.

Chomsky has frequently described a language as a mapping from meaning to sound. Around the time of the Extended Standard Model, he introduced a schema whereby grammar forms a bridge between the Phonetic Form, or PF, the form of a sentence that would actually be pronounced, and the Logical Form, or LF, which contained the structural specification of a sentence necessary to determine meaning. To consider an example beloved by introductory logic teachers, Everyone loves someone might mean that each person loves some person (possibly a different person in each case), or it might mean that there is some one person that everyone loves. Although these two sentences have identical PFs, they have different LFs.

Linking the idea of LF and PF to that of deep structure and surface structure (now called D-structure and S-structure, and with somewhat altered roles) gives the “T-model” of language:

D-structure

|

transformations

|

PF – S-Structure – LF

As the diagram indicates, the grammar generates the D-structure, which contains the basic structural relations of the sentence. The D-structure undergoes transformations to arrive at the S-structure, which differs from the PF in that it still contains unpronounced “traces” in places previously occupied by an element that was then moved elsewhere. The S-structure is then interpreted two ways: phonetically as the PF and semantically as the LF. The PF is passed from the language system to the cognitive system responsible for producing actual speech. The LF, which is not a meaning itself but contains structural information needed for semantic interpretation, is passed to the cognitive system responsible for semantics. This idea of syntactic structures and transformations over those structures as mediating between meaning and physical expression has been further developed and simplified, but the basic concept remains an important part of Chomsky’s theories

d. Principles and Parameters

In the 1980s, the Extended Standard Model would develop into what is perhaps the best known iteration of Chomskyan linguistics, what was first referred to as “Government and Binding”, after Chomsky’s book Lectures on Government and Binding (1981). Chomsky developed these ideas further in Barriers (1986), and the theory took on the more intuitive name “Principles and Parameters”. The fundamental idea was quite simple. As with previous versions, human beings have in their minds a computational system that generates the syntactic structures linking meanings to sounds. According to Principles and Parameters Theory, all of these systems share certain fixed settings (principles) for their core components, explaining the deep commonalities that Chomsky and his followers see between human languages. Other elements (parameters) are flexible and have values that are set during the language learning process, reflecting the variations observable across different languages. An analogy can be made with an early computer of the sort that was programmed by setting the position of switches on a control panel: the core, unchanging, circuitry of the computer is analogous to principles, the switches to parameters, and the program created by one of the possible arrangements of the switches to a language such as English, Japanese, or St’at’imcets (although this simple picture captures the essence of early Principles and Parameters, the details are a great deal more complicated, especially considering subsequent developments).

Principles are the core aspects of language, including the dependence on underlying structure and lexical projection, features that the theory predicts will be shared by all natural human languages. Parameters are aspects with binary settings that vary from language to language. Among the most widely discussed parameters, which might serve as convenient illustrations, are the Head and Pro-Drop parameters.

A head is the key element that gives a phrase its name, such as the noun in a noun phrase. The rest of the phrase is the complement. It can be observed that in English, the head comes before the complement, as in the noun phrase medicine for cats, where the noun medicine is before the complement for cats; in the verb phrase passed her the tea, the verb passed is first, and in the prepositional phrase in his pocket, the preposition in is first. But consider the following Japanese sentence (Cook and Newsom, 1996: 14):

| (14) | E | wa | kabe | ni | kakatte | imasu |

| [subject marker] | picture | wall | on | hanging | is |

The picture is hanging on the wall

Notice that the head of the verb phrase, the verb kakatte imasu, is after its complement, kabe ni, and ni (on) is a postposition that occurs after its complement, kabe. English and Japanese thus represent different settings of a parameter, the Head, or Head Directionality, Parameter. Although this and other parameters are set during a child’s development by the language they hear around them, it seems that very little exposure is needed to fix the correct value. It is taken as evidence of this that mistakes with head positioning are vanishingly rare; English speaking children almost never make mistakes like * The picture the wall on is at any point in their development.

The Pro-Drop Parameter explains the fact that certain languages can leave the pronoun subjects of a sentence implied, or up to context. For instance, in Italian, a pro-drop language, the following sentences are permitted (Cook and Newsom, 1996: 55).

| (15) | Sono | il | tricheco |

| be (1st-person-present) | the | walrus | |

| I am the walrus. |

| (16) | E’ | pericoloso | sporger- | si |

| be (3rd person present) | dangerous | lean out- | (reflexive) |

It is dangerous to lean out. [a warning posted on trains]

On the other hand, the direct English translations * Am the walrus and * Is dangerous to lean out are ungrammatical, reflecting a different parameter setting, “non-prodrop”, which requires an explicit subject for sentences.

A number of other, often complex, differences beyond whether subjects must be included in all sentences were thought to come from the settings of Pro-Drop and the way it interacts with other parameters. For example, it has been observed that many pro-drop languages allow the normal order of subjects and verbs to be inverted; Cade la note is acceptable in Italian, unlike its direct translation in English, * falls the night. However, this feature is not universal among pro-drop languages, and it was theorized that whether it is present or not depends on the settings of other parameters.

Examples such as these reflect the general theme of Principles and Parameters, in which “rules” of the sort that had been postulated in Chomsky’s previous work are no longer needed. Instead of syntactical rules present in a speaker’s mental language faculty, the particular grammar of a language was hypothesized to be the result of the complex interaction of principles, the setting of parameters, and the projection properties of lexical items. As a relatively simple example, there is no need for an English-speaking child to learn a bundle of related rules such as noun first in a noun phrase, verb first in a verb phrase, and so on, or for a Japanese-speaking child to learn the opposite rules for each type of phrase; all of this is covered by the setting of the Head Parameter. As Chomsky (1995: 388) puts it,

A language is not, then, a system of rules but a set of specifications for parameters in an invariant system of principles of Universal Grammar. Languages have no rules at all in anything like the traditional sense.

This outlook represents an important shift in approach, which is often not fully appreciated by philosophers and other non-specialists. Many scholars assume that Chomsky and his followers still regard languages as particular sets of rules internally represented by speakers, as opposed to principles that are realized without being explicitly represented in the brain.

This outlook led many linguists, especially during the last two decades of the 20th century, to hope that the resemblances and differences between individual languages could be neatly explained by parameter settings. Language learning also seemed much less puzzling, since it was now thought to be a matter, not of learning complex sets of rules and constraints, but rather of setting each parameter, of which there were at one time believed to be about twenty, to the correct value for the local language, a process that has been compared to the children’s game of “twenty questions”. It was even speculated that a table could be established where languages could be arranged by their parameter settings, in analogy to the periodic table on which elements could be placed and their chemical properties predicted by their atomic structures.

Unfortunately, as the program developed, things did not prove so simple. Researchers failed to reach a consensus on what parameters there are, what values they can take, and how they interact, and there seemed to be vastly more of them than initially believed. Additionally, parameters often failed to have the explanatory power they were envisioned as having. For example, as discussed above, it was originally claimed that the Pro-Drop parameter explained a large number of differences between languages with opposite settings for that parameter. However, these predictions were made on the basis of an analysis of several related European languages and were not fully borne out when checked against a wider sample. Many linguists now see the parameters themselves as emerging from the interactions of “microparameters” that explain the differences between closely related dialects of the same language and which are often found in the properties of individual words projecting onto the syntax. There is ongoing debate as to the explanatory value of parameters as they were originally conceived.

During the Principles and Parameters era, Chomsky sharpened the notions of competence and performance into the dichotomy of I-languages and E-languages. The former is a state of the language system in the mind of an individual speaker, while the latter, which corresponds to the common notion of a language, is a publicly shared system such as “English”, “French”, or “Swahili”. Chomsky was sharply critical of the study of E-languages, deriding them as poorly defined entities that play no role in the serious study of linguistics —a controversial attitude, as E-languages are what many linguists regard as precisely the subject matter of their discipline. This remains an important point in his work and will be discussed more fully in 4.d. below.

e. The Minimalist Program

From the beginning, critics have argued that the rule systems Chomsky postulated were too complex to be plausibly grasped by a child learning a language, even if important parts of this knowledge were innate. Initially, the replacement of rules by a limited number of parameters in the Principles and Parameters paradigm seemed to offer a solution, as by this theory, instead of an unwieldy set of rules, the child needed only to grasp the setting of some parameters. But, while it was initially hoped that twenty or so parameters might be identified, the number has increased to the point where, although there is no exact consensus, it is too large to offer much hope of providing a simple explanation of language learning, and microparameters further complicate the picture.

The Minimalist Program was initiated in the mid-1990s partially to respond to such criticisms by continuing the trend towards simplicity that had begun with the Extended Standard Theory, with the goal of the greatest possible degree of elegance and parsimony. The minimalist approach is regarded by advocates not as a full theory of syntax but rather as a program of research working towards such a theory, building on the key features of Principles and Parameters.

In the Minimalist Program, syntactic structures corresponding to sentences are constructed using a single operation, Merge, that combines a head with a complement, for example, merging Albert with will meow to give Albert will meow. Importantly, Merge is recursive, so that it can be applied over and over to give sentences of any length. For instance, the sentence just discussed can be merged with thinks to give thinks Albert will meow and then again with Leisa to form the sentence Leisa thinks Albert will meow. Instead of elements within a structure moving from one place to another, a structure merges with an element already inside of it and then deletes redundant elements; a question can be formed from Albert will meow by first producing will Albert will meow, and finally will Albert meow? In order to prevent the production of ungrammatical strings, Merge must be constrained in various ways. The main constraints are postulated to be lexical, coming from the syntactic features of the words in a language. These features control which elements can be merged together, which cannot, and when merging is obligatory, for instance, to provide an object for a transitive verb.

During the Minimalist Program era, Chomsky has worked on a more specific model for the architecture of the language faculty, which he divides into the Faculty of Language, Broad (FLB) and the Faculty of Language, Narrow (FLN). The FLN is the syntactic computational system that had been the subject of Chomsky’s work from the beginning, now envisioned as using a single operation, that of recursive Merge. The FLB is postulated to include the FLN, but additionally the perceptual-articulatory system that handles the reception and production of physical messages (spoken or signed words and sentences) and the conceptual-intentional system that handles interpreting the meaning of those messages. In a schema similar to a flattened version of the T-model, the FLN forms a bridge between the other systems of the FLB. Incoming messages are given a structural form by the FLN that is passed to the conceptual-intentional system to be interpreted, and the reverse process allows thoughts to be articulated as speech. The different structural levels, D-structure and S-structure, of the T-model are eliminated in favor of maximal simplicity (the upside-down T is now just a flat ̶ ). The FLN is held to have a single level on which structures are derived through Merge, and two interfaces connected to the other parts of the FLB.

One important implication of this proposed architecture is the special role of recursion. The perceptual-articulatory system and conceptual-intentional system have clear analogs in other species, many of whom can obviously sense and produce signals and, in at least some cases, seem to be able to link meanings to them. Chomsky argues that, in contrast, recursion is uniquely human and that no system of communication among non-human animals allows users to limitlessly combine elements to produce a potential infinity of messages. In many ways, Chomsky is just restating what had been an important part of his theory from the beginning, which is that human language is unique in being productive or capable of expressing an infinity of different meanings, an insight he credits to Descartes. This makes recursion the characteristic aspect of human language that sets it apart from anything else in the natural world, and a central part of what it is to be human.

The status of recursion in Chomsky’s theory has been challenged in various ways, sometimes with the claim that some human language has been observed to be non-recursive (discussed below, in 4.a). That recursion is a uniquely human ability has also been called into question by experiments in which monkeys and corvids were apparently trained in recursive tasks under laboratory conditions. On the other hand, it has also been suggested that if the recursive FLN really does not have any counterpart among non-human species, it is unclear how such a mechanism might have evolved. This last point is only the latest version of a long-running objection that Chomsky’s ideas are difficult to reconcile with the theory of evolution since he postulates uniquely human traits for which, it is argued by critics, there is no plausible evolutionary history. Chomsky counters that it is not unlikely that the FLN appeared as a single mutation, one that would be selected due to the usefulness of recursion for general thought outside of communication. Providing evolutionary details and exploring the relationship between the language faculty and the physical brain have formed a large part of Chomsky’s most recent work.

The central place of recursion in the Minimalist Program also brought about an interesting change in Chomsky’s thoughts on hypothetical extraterrestrial languages. During the 1980s, he speculated that alien languages would be unlearnable by human beings since they would not share the same principles as human languages. As such, one could be studied as a natural phenomenon in the way that humans study physics or biology, but it would be impossible for researchers to truly learn the language in the way that field linguists master newly encountered human languages. More recently, however, Chomsky hypothesized that since recursion is apparently the core, universal property of human language and any extraterrestrial language will almost certainly be recursive as well, alien languages may not be that different from our own, after all.

4. Language and Languages

As a linguist, Chomsky’s primary concern has always been, of course, language. His study of this phenomenon eventually led him to not only formulate theories that were very much at odds with those held at one time by the majority of linguists and philosophers, but also to have a fundamentally different view about the thing, language, that was being studied and theorized about. Chomsky’s views have been influential, but many of them remain controversial today. This section discusses some of Chomsky’s important ideas that will be of interest to philosophers, especially concerning the nature and acquisition of language, as well as meaning and analyticity, topics that are traditionally the central concerns of philosophy of the language.

a. Universal Grammar

Perhaps the single most salient feature of Chomsky’s theory is the idea of Universal Grammar ( UG). This is the central aspect of language that he argues is shared by all human beings —a part of the organization of the mind. Since it is widely assumed that mental features correspond, at some level, to physical features of the brain, UG is ultimately a biological hypothesis that would be part of the genetic inheritance that all humans are born with.

In terms of Principles and Parameters Theory, UG consists of the principles common to all languages and which will not change as the speaker matures. UG also consists of parameters, but the values of the parameters are not part of UG. Instead, parameters may change from their initial setting as a child grows up, based on the language she hears spoken around her. For instance, an English-speaking child will learn that every sentence must have a subject, setting her Pro-Drop parameter to a certain value, the opposite of the value it would take for a Spanish-speaking child. While the Pro-Drop parameter is a part of UG, this particular setting of the parameter is a part of English and other languages where the subject must be overtly included in the sentence. All of the differences between human languages are then differences in vocabulary and in the settings of parameters, but they are all organized around a common core given by UG.

Chomsky has frequently stated that the important aspects of human languages are set by UG. From a sufficiently detached viewpoint, for instance, that of a hypothetical Martian linguist, there would only be minor regional variations of a single language spoken by all human beings. Further, the variations between languages are predictable from the architecture of UG and can only occur within narrowly constrained limits set by that structure. This was a dramatic departure from the assumption, largely unquestioned until the mid-20th century, that languages can vary virtually without limit and in unpredictable ways. This part of Chomsky’s theory has remained controversial, with some authorities on crosslinguistic work, such as the British psycholinguist Stephen Levinson (2016), arguing that it discounts real and important differences among languages. Other linguists argue the exact contrary: that data from the study of languages worldwide backs Chomsky’s claims. Because the debate ultimately concerns invisible mental features of human beings and how they relate to unpronounced linguistic structures, the interpretation of the evidence is not straightforward, and both sides claim that the available empirical data supports their position.

The theory of UG is an important aspect of Chomsky’s ideas for many reasons, among which is that it clearly sets his theories apart as different from paradigms that had previously been dominant in linguistics. This is because UG is not a theory about behavior or how people use language, but instead about the internal composition of the human mind. Indeed, for Chomsky and others working within the framework of his ideas, language is not something that is spoken, signed, or written but instead exists inside of us. What many people think of as language —externalized acts of communication —are merely products of that internal mental faculty. This in turn has further implications for theories of language acquisition (see 4.b) and how different languages should be identified (4.c).

An important implication of UG is that it makes Chomsky’s theories empirically testable. A common criticism of his work is that because it abstracts away from the study of actual language use to seek underlying idealized patterns, no evidence can ever count against it. Instead, apparent counterexamples can always be dismissed as artifacts of performance rather than the competence that Chomsky was concerned with. If correct, this would be problematic since it is widely agreed that a good scientific theory should be testable in some way. However, this criticism is often based on misunderstandings. A linguist dismissing an apparent failure of the theory as due to performance would need to provide evidence that performance factors really are involved, rather than a problem with the underlying theory of competence. Further, if a language was discovered to be organized around principles that contravened those of UG, then many of the core aspects of Chomsky’s theories would be falsified. Although candidate languages have been proposed, all of them are highly controversial, and none is anything close to universally accepted as falsifying UG.

In order to count as a counterexample to UG, a language must actually breach one of its principles; it is not enough that a principle merely not be displayed. As an example, one of the principles is what is known as structure dependence: when an element of a linguistic structure is moved to derive a different structure, that movement depends on the structure and its organization into phrases. For instance, to arrive at the correct question form of The cat who is on the desk is hungry; it is the is in the main clause, the one before hungry, that is moved to the front of the sentence, not the one in the relative clause (between who and on). However, in some languages, for instance Japanese, elements are not moved to form questions; instead, a question marker (ka) is added at the end of the sentence. This does not make Japanese a counterexample to the UG principle that movement is always structurally dependent. The Japanese simply do not exercise this principle when forming questions, but neither is the principle violated. A counterexample to UG would be a language that moved elements but did so in a way that did not depend on structure, for instance, by always moving the third word to the front or inverting the word order to form a question.

A case that generated a great deal of recent controversy has been the claim that Pirahã, a language with a few hundred speakers in the Brazilian rain forest, lacks recursion (Everett 2005). This has been frequently presented as falsifying UG, since recursion is the most important principle, indeed the identifying feature, of human language, according to the Minimalist Program. This alleged counterexample received widespread and often incautious coverage in the popular press, at times being compared to the discovery of evidence that would disprove the theory of relativity.

This assertion that Pirahã has no recursion has itself been frequently challenged, and the status of this claim is unclear. But there is also a lack of agreement on whether, if true, this claim would invalidate UG or whether it would just be a case similar to the one discussed above, the absence of movement in Japanese when forming questions, where a principle is not being exercised. Proponents of Chomsky’s ideas counter that UG is a theory of mental organization and underlying competence, a competence that may or may not be put fully to use. The fact that the Pirahã are capable of learning Portuguese (the majority language in Brazil) shows that they have the same UG present in their minds as anyone else. Chomsky points out that there are numerous cases of human beings choosing not to exercise some sort of biological capacity that they have. Chomsky’s own example is that although humans are biologically capable of swimming, many would drown if placed in water. It has been suggested by sympathetic scholars that this example is not particularly felicitous, as swimming is not an instinctive behavior for humans, and a better example might be monks who are sworn to celibacy. Debate has continued concerning this case, with some still arguing that if a language without recursion would not be accepted as evidence against UG, it is difficult to imagine what can.

b. Plato’s Problem and Language Acquisition

One of Chomsky’s major goals has always been to explain the way in which human children learn language. Since he sees language as a type of knowledge, it is important to understand how that knowledge is acquired. It seems inexplicable that children acquire something as complex as the grammar and vocabulary of a language, let alone the speed and accuracy with which they do so, at an age when they cannot yet learn how to tie their shoes or do basic arithmetic. The mystery is deepened by the difficulty that adults, who are usually much better learners than small children, have with acquiring a second language.

Chomsky addressed this puzzle in Aspects of the Theory of Syntax (1965), where he called it “Plato’s Problem”. This name is a reference to Plato’s Meno, a dialog in which Socrates guides a young boy, without a formal education, into producing a fairly complex geometric proof, apparently from the child’s own mental resources. Considering the difficult question of where this apparent knowledge of geometry came from, Plato, speaking through Socrates, concludes that it must have been present in the child already, although dormant until the right conditions were presented for it to be awakened. Chomsky would endorse largely the same explanation for language acquisition. He also cites Leibniz and Descartes as holding similar views concerning important areas of knowledge.

Chomsky’s theories regarding language acquisition are largely motivated by what has become known as the “Poverty of the Stimulus Argument,” the observation that the information about their native language that children are exposed to seems inadequate to explain the linguistic knowledge that they arrive at. Children only ever hear a small subset of the sentences that they can produce or understand. Furthermore, the language that they do hear is often “corrupt” in some way, such as the incomplete sentences frequently used in casual exchanges. Yet on this basis, children somehow master the complex grammars of their native languages.

Chomsky pointed out that the Poverty of the Stimulus makes it difficult to maintain that language is learned through the same general-purpose learning mechanisms that allow a human being to learn about other aspects of the world. There are many other factors that he and his followers cite to underline this point. All developmentally normal children worldwide are able to speak their native languages at roughly the same age, despite vast differences in their cultural and material circumstances or the educational levels of their families. Indeed, language learning seems to be independent of the child’s own cognitive abilities, as children with high IQs do not learn the grammar of their language faster, on average, than others. There is a notable lack of explicit instruction; analyses of speech corpora show that adult correction of children’s grammar is rare, and it is usually ineffective when it does occur. Considering these factors together, it seems that the way in which human children acquire language requires an explanation in a way that learning, say, table manners or putting shoes on do not.

The solution to this puzzle is, according to Chomsky, that language is not learned through experience but innate. Children are born with Universal Grammar already in them, so the principles of language are present from birth. What remains is “merely” learning the particularities of the child’s native language. Because language is a part of the human mind, a part that each human being is born with, a child learning her native language is just undergoing the process of shaping that part of her mind into a particular form. In terms of the Principles and Parameters Theory, language learning is setting the value of the parameters. Although subsequent research has shown that things are more complicated than the simple setting of switches, the basic picture remains a part of Chomsky’s theory. The core principles of UG remain unchanged as the child grows, while peripheral elements are more plastic and are shaped by the linguistic environment of the child.

Chomsky has sometimes put the innateness of language in very strong terms and has stated that it is misleading to call language acquisition “language learning”. The language system of the mind is a mental organ, and its development is similar, Chomsky argues, to the growth of bodily organs such as the heart or lungs, an automatic process that is complete at some point in a child’s development. The language system also stabilizes at a certain point, after which changes will be relatively minor, such as the addition of new words to a speaker’s vocabulary. Even many of those who are firm adherents to Chomsky’s theories regard such statements as incautious. It is sometimes pointed out that while the growth of organs does not require having any particular experiences, proper language development requires being exposed to language within a certain critical period in early childhood. This requirement is evidenced by tragic cases of severely neglected children who were denied the needed input and, as a result, never learned to speak with full proficiency.

It has also been pointed out that even the rationalist philosophers whom Chomsky frequently cites did not seem to view innate and learned as mutually exclusive. Leibniz (1704), for instance, stated that arithmetical knowledge is “in us” but still learned, drawn out by demonstration and testing on examples. It has been suggested that some such view is necessary to explain language acquisition. Since humans are not born able to speak in the way that, for example, a horse is able to run within hours of birth, some learning seems to be involved, but those sympathetic to Chomsky regard the Poverty of the Stimulus as ruling out simply acquiring language completely from external sources. According to this view, we are born with language inside of us, but the proper experiences are required to draw that knowledge out and make it available.

The idea of innate language is not universally accepted. The behaviorist theory that language learning is a result of social conditioning, or training, is no longer considered viable. But it is a widely held view that general statistical learning mechanisms, the same mechanisms by which a child learns about other aspects of the world and human society, are responsible for language learning, with only the most general features of language being innate. These sorts of theories tend to have the most traction in schools of linguistic thought that reject the idea of Universal Grammar, maintaining that no deep commonalities hold across human languages. On such a view, there is little about language that can be said to be shared by all humans and therefore innate, so language would have to be acquired by children in the same way as other local customs. Advocates of Chomsky’s views counter that such theories cannot be upheld given the complexity of grammar and the Poverty of the Stimulus, and that the very fact that language acquisition occurs given these considerations is evidence for Universal Grammar. The degree to which language is innate remains a highly contested issue in both philosophy and science.

Although the application of statistical learning mechanisms to machine learning programs, such as OpenAI’s ChatGPT, has proven incredibly successful, Chomsky points out that the architecture of such programs is very different from that of the human mind: “A child’s operating system is completely different from that of a machine learning program” (Chomsky, Roberts, and Watumull, 2023). This difference, Chomskyans maintain, precludes drawing conclusions about the use or acquisition of language by humans on the basis of studying these models.

c. I vs. E languages

Perhaps the way in which Chomsky’s theories differ most sharply from those of other linguists and philosophers is in his understanding of what language is and how a language is to be identified. Almost from the beginning, he has been careful to distinguish speaker performance from underlying linguistic competence, which is the target of his inquiry. During the 1980s, this methodological point would be further developed into the I-language/E-language distinction.

A common concept of what an individual language is, explicitly endorsed by philosophers such as David Lewis (1969), Michael Dummett (1986), and Michael Devitt (2022), is a system of conventions shared between speakers to allow coordination. Therefore, language is a public entity used for communication. It is something like this that most linguists and philosophers of language have in mind when they talk about “English” or “Hindi”. Chomsky calls this concept of language E-language, where the “E” stands for external and extensional. What is meant by “extensional” is somewhat technical and will be discussed later in this subsection. “External” refers to the idea just discussed, where language is a public system that exists externally to any of its speakers. Chomsky points out that such a notion is inherently vague, and it is difficult to point to any criteria of identity that would allow one to draw firm boundaries that could be used to tell one such language apart from another. It has been observed that people living near border areas often cannot be neatly categorized as speaking one language or the other; Germans living near the Dutch border are comprehensible to the nearby Dutch but not to many Germans from the southern part of Germany. Based on the position of the border, we say that they are speaking “German” rather than “Dutch” or some other E-language, but a border is a political entity with negligible linguistic significance. Chomsky (1997: 7) also called attention to what he calls “semi-grammatical sentences,” such as the string of words.

(17) *The child seems sleeping.

Although (17) is clearly ill-formed, most “English” speakers will be able to assign some meaning to it. Given these conflicting facts, there seems to be no answer to whether (17) or similar strings are part of “English”.

Based on considerations like those just mentioned, Chomsky derides E-languages as indistinct entities that are of no interest to linguistic science. The real concept of interest is that of an I-language, where the “I” refers to intensional and internal. “Intensional” is in opposition to “extensional”, and will be discussed in a moment. “Internal” means contained in the mind of some individual human being. Chomsky defines language as a computational system contained in an individual mind, one that produces syntactic structures that are passed to the mental systems responsible for articulation and interpretation. A particular state of such a system, shaped by the linguistic environment it is exposed to, constitutes an I-language. Because all I-languages contain Universal Grammar, they will all resemble each other in their core aspects, and because more peripheral parts of language are set by the input received, the I-language of two members of the same linguistic community will resemble one another more closely. For Chomsky, for whom the study of language is ultimately the study of the mind, it is the I-language that is the proper topic of concern for linguists. When Chomsky speaks of “English” or “Swahili”, this is to be understood as shorthand for a cluster of characteristics that are typically displayed by the I-languages of people in a particular linguistic community.

This rejection of external languages as worthy of study is closely related to another point where Chomsky goes against a widely held belief in the philosophy of language, as he does not accept the common hypothesis that language is primarily a means of communication. The idea of external languages is largely motivated by the widespread theory that language is a means for interpersonal communication, something that evolved so that humans could come together, coordinate to solve problems, and share ideas. Chomsky responds that language serves many uses, including to speak silently to oneself for mental clarity, to aid in memorization, to solve problems, to plan, or to conduct other activities that are entirely internal to the individual, in addition to communication. There is no reason to emphasize one of these purposes over any other. Communication is one purpose of language—an important one, to be sure—but it is not the purpose.

Besides the internal/external dichotomy, there is the intensional/extensional distinction, referring to two different ways that sets might be specified. The extension of a set is what elements are in that set, while the intension is how the set is defined and the members are divided from non-members. For instance, the set {1, 2, 3} has as its extension the numbers 1, 2, and 3. The intension of the same set might be the first three positive integers, or the square roots of 1, 4, and 9, or the first three divisors of 6; indeed, an infinite number of intensions might generate the same set extension.

Applying this concept to languages, a language might be defined extensionally in terms of the sentences of the language or intentionally in terms of the grammar that generates all of those sentences but no others. While Chomsky favors the second approach, he attributes the first to two virtually opposite traditions. Structuralist linguists, who place great value on studying corpora, and other linguists and philosophers who focus on the actual use of language define a language in terms of the sentences attested in corpora and those that fit similar patterns. A very different tradition consists of philosophers of language who are known as “Platonists”, and who are exemplified by Jerrold Katz (1981, 1985) and Scott Soames (1984), former disciples of Chomsky. On this view, every possible language is a mathematical object, a set of possible sentences that really exist in the same abstract sense that sets of numbers do. Some of these sets happen to be the languages that humans speak.

Both of these extensional approaches are rejected by Chomsky, who maintains that language is an aspect of the human mind, so what is of interest is the organization of that part of the mind, the I-language. This is an intensional approach, since a particular I-language will constitute a grammar that will produce a certain set of sentences. Chomsky argues that both extensional approaches, the mathematical and the usage-based, are insufficiently focused on the mental to be of explanatory value. If a language is an abstract mathematical object, a set of sentences, it is unclear how humans are supposed to acquire knowledge of such a thing or to use it. The usage-based approach, as a theory of behavior, is insufficiently explanatory because any real explanation of how language is acquired and used must be in mental terms, which means looking at the organization of the underlying I-language.