Substance

The term “substance” has two main uses in philosophy. Both originate in what is arguably the most influential work of philosophy ever written, Aristotle’s Categories. In its first sense, “substance” refers to those things that are object-like, rather that property-like. For example, an elephant is a substance in this sense, whereas the height or colour of the elephant is not. In its second sense, “substance” refers to the fundamental building blocks of reality. An elephant might count as a substance in this sense. However, this depends on whether we accept the kind of metaphysical theory that treats biological organisms as fundamental. Alternatively, we might judge that the properties of the elephant, or the physical particles that compose it, or entities of some other kind better qualify as substances in this second sense. Since the seventeenth century, a third use of “substance” has gained currency. According to this third use, a substance is something that underlies the properties of an ordinary object and that must be combined with these properties for the object to exist. To avoid confusion, philosophers often substitute the word “substratum” for “substance” when it is used in this third sense. The elephant’s substratum is what remains when you set aside its shape, size, colour, and all its other properties. These philosophical uses of “substance” differ from the everyday use of “substance” as a synonym for “stuff” or “material”. This is not a case of philosophers putting an ordinary word to eccentric use. Rather, “substance” entered modern languages as a philosophical term, and it is the everyday use that has drifted from the philosophical uses.

Table of Contents

- Substance in Classical Greek Philosophy

- Substance in Classical Indian Philosophy

- Substance in Medieval Arabic and Islamic Philosophy

- Substance in Medieval Scholastic Philosophy

- Substance in Early Modern Philosophy

- Substance in Twentieth-Century and Early-Twenty-First-Century Philosophy

- References and Further Reading

1. Substance in Classical Greek Philosophy

The idea of substance enters philosophy at the start of Aristotle’s collected works, in the Categories 1a. It is further developed by Aristotle in other works, especially the Physics and the Metaphysics. Aristotle’s concept of substance was quickly taken up by other philosophers in the Aristotelian and Platonic schools. By late antiquity, the Categories, along with an introduction by Porphyry, was the first text standardly taught to philosophy students throughout the Roman world, a tradition that persisted in one form or another for more than a thousand years. As a result, Aristotle’s concept of substance can be found in works by philosophers across a tremendous range of times and places. Uptake of Aristotle’s concept of substance in Hellenistic and Roman philosophy was typically uncritical, however, and it is necessary to look to other traditions for influential challenges to and/or revisions of the Aristotelian concept.

a. Substance in Aristotle

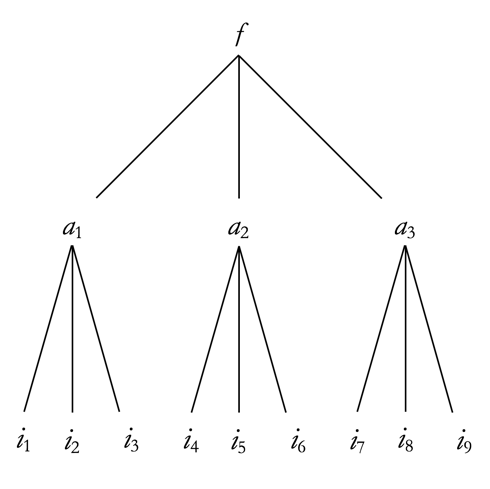

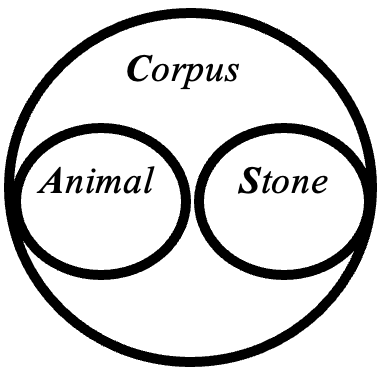

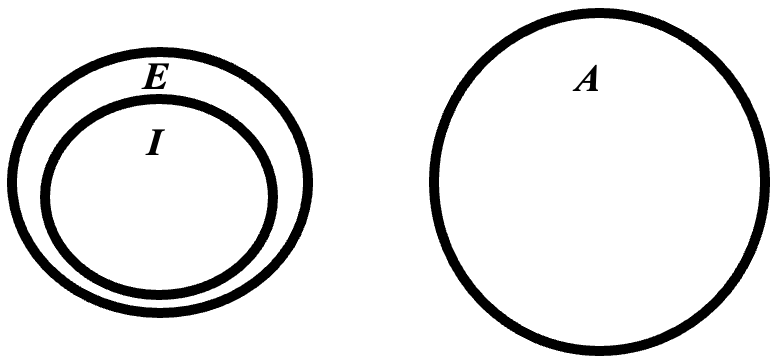

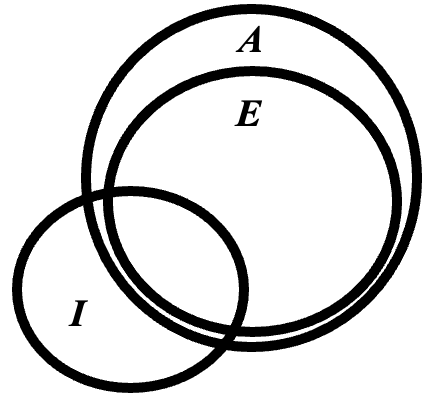

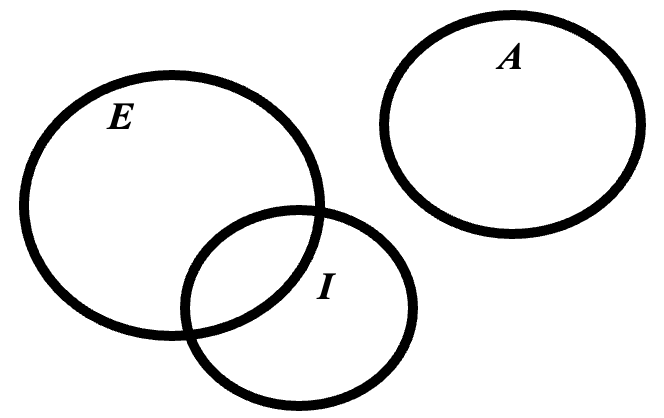

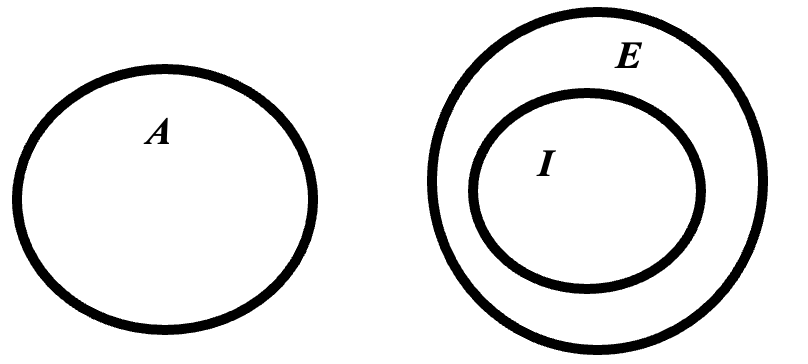

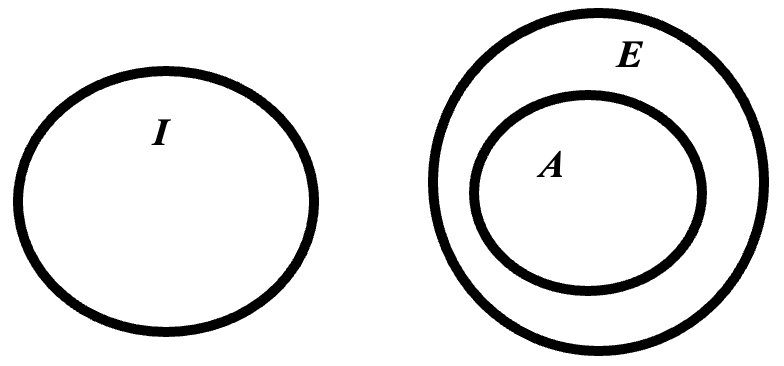

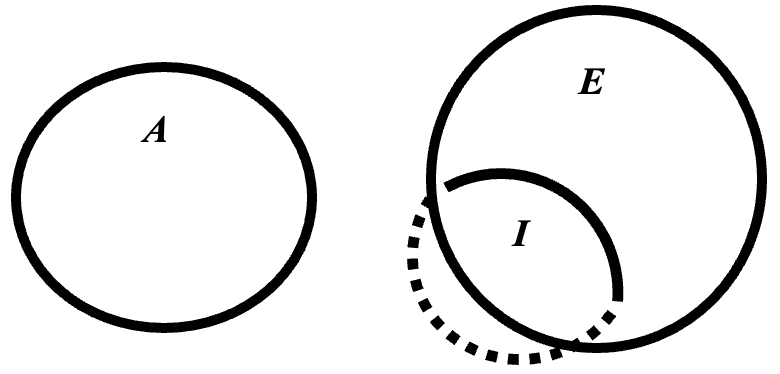

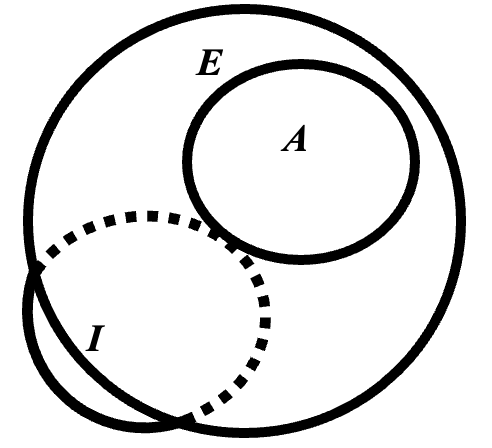

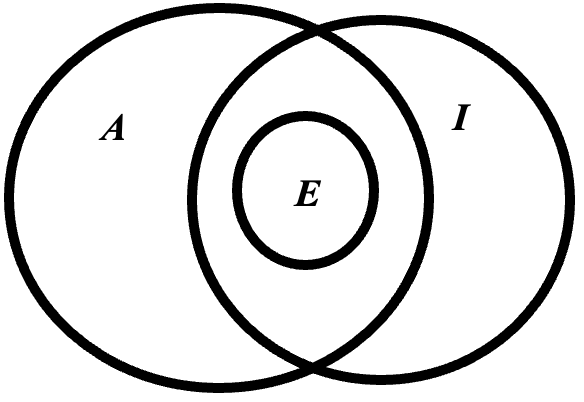

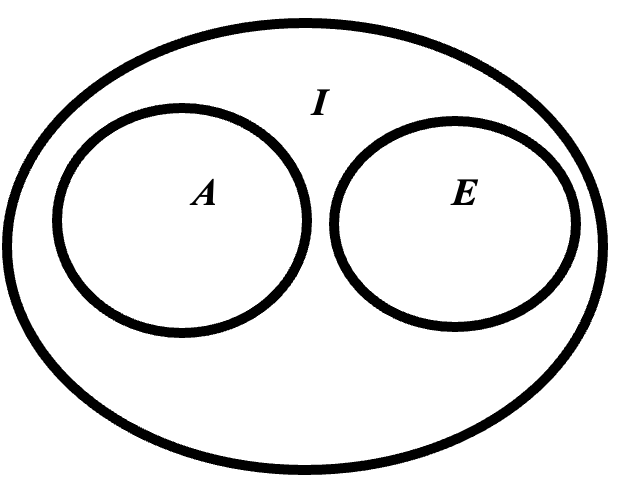

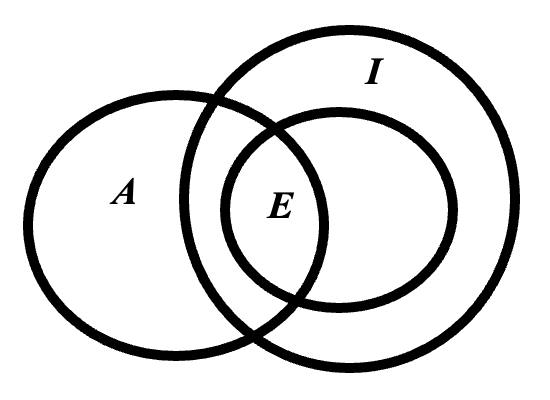

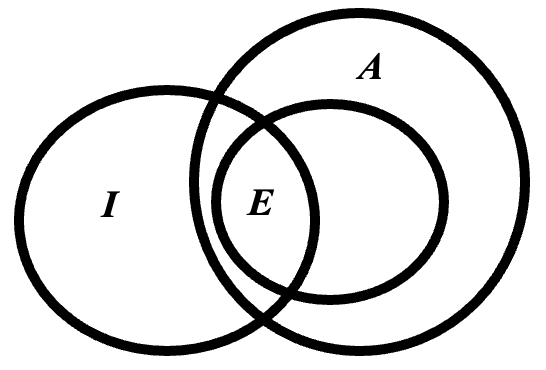

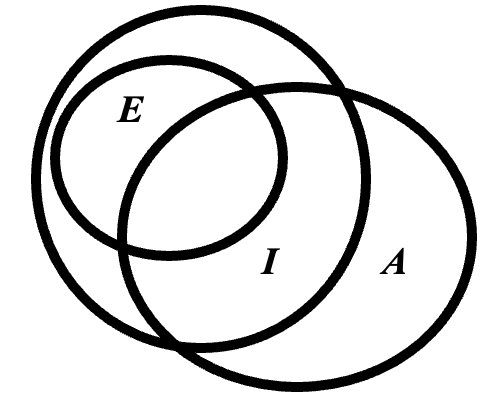

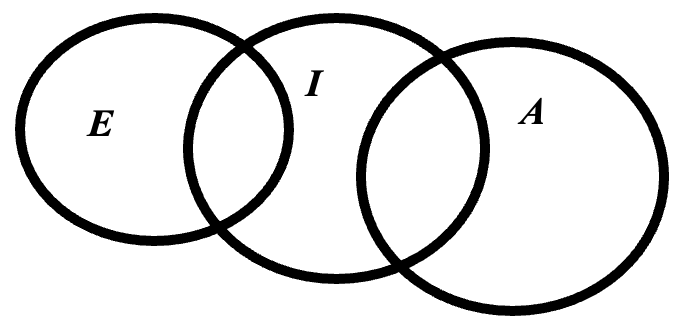

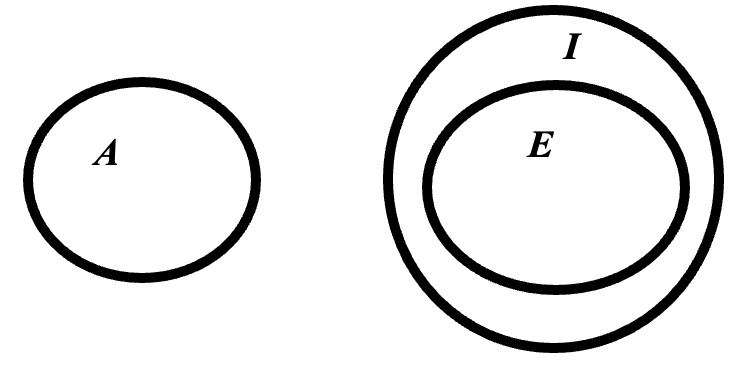

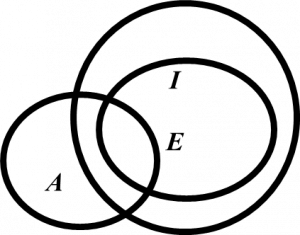

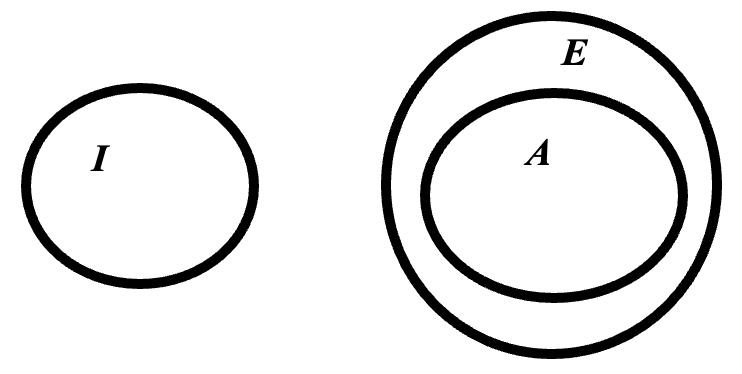

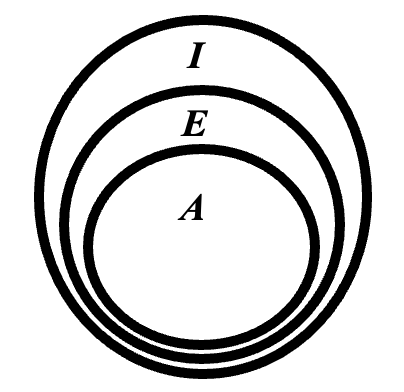

The Categories centres on two ways of dividing up the kinds of things that exist (or, on some interpretations, the kinds of words or concepts for things that exist). Aristotle starts with a simple four-fold division. He then introduces a more complicated ten-fold division. Both give pride of place to the category of substances.

Aristotle draws the four-fold division in terms of two relations: that of existing in a subject in the way that the colour grey is in an elephant, and that of being said of a subject in the way that “animal” or “four-footed” is said of an elephant. Commentators often refer to these relations as inherence and predication, respectively.

Some things, Aristotle says, exist in a subject, and some are said of a subject. Some both exist in and are said of a subject. But members of a fourth group, substances, neither exist in nor are said of a subject:

A substance—that which is called a substance most strictly, primarily, and most of all—is that which is neither said of a subject nor in a subject, e.g. the individual man or the individual horse. (Categories, 2a11)

In other words, substances are those things that are neither inherent in, nor predicated of, anything else. A problem for understanding what this means is that Aristotle does not define the said of (predication) and in (inherence) relations. Aristotle (Categories, 2b5–6) does make it clear, however, that whatever is said of or in a subject, in the sense he has in mind, depends for its existence on that subject. The colour grey and the genus animal, for example, can exist only as the colour or genus of some subject—such as an elephant. Substances, according to Aristotle, do not depend on other things for their existence in this way: the elephant need not belong to some further thing in order to exist in the way that the colour grey and the genus animal (arguably) must. In this respect, Aristotle’s distinction between substances and non-substances approximates the everyday distinction between objects and properties.

Scholars tend to agree that Aristotle treats the things that are said of a subject as universals and other things as particulars. If so, Aristotle’s substances are particulars: unlike the genus animal, an individual elephant cannot have multiple instances. Scholars also tend to agree that Aristotle treats the things that exist in a subject as accidental and the other things as non-accidental. If so, substances are non-accidental. However, the term “accidental” usually signifies the relationship between a property and its bearer. For example, the colour grey is an accident of the elephant because it is not part of its essence, whereas the genus animal is not an accident of the elephant but is part of its essence. The claim that an object-like thing, such as a man, a horse, or an elephant, is non-accidental therefore seems trivially true.

Unlike the four-fold division, Aristotle’s ten-fold division does not arise out of the systematic combination of two or more characteristics such as being said of or existing in a subject. It is presented simply as a list consisting of substance, quantity, qualification, relative, where, when, being-in-a-position, having, doing, and being-affected. Scholars have long debated on whether Aristotle had a system for arriving at this list of categories or whether he “merely picked them up as they occurred to him” as Kant suggests (Critique of Pure Reason, Pt.2, Div.1, I.1, §3, 10).

Despite our ignorance about how he arrived at it, Aristotle’s ten-fold division helps clarify his concept of substance by providing a range of contrast cases: substances are not quantities, qualifications, relatives and so on, all of which depend on substances for their existence.

Having introduced the ten-fold division, Aristotle also highlights some characteristics that make substances stand out (Categories, 3b–8b): a substance is individual and numerically one, has no contrary (nothing stands to an elephant as knowledge stands to ignorance or justice to injustice), does not admit of more or less (no substance is more or less a substance than another substance, no elephant is more or less an elephant than another elephant), is not said in relation to anything else (one can know what an elephant is without knowing anything else to which it stands in some relation), and is able to receive contraries (an elephant can be hot at one time, cold at another). Aristotle emphasises that whereas substances share some of these characteristics with some non-substances, the ability to receive contraries while being numerically one is unique to substances (Categories, 4a10–13).

The core idea of a substance in the Categories applies to those object-like particulars that, uniquely, do not depend for their existence on some subject in which they must exist or of which they must be said, and that are capable of receiving contraries when they undergo change. That, at any rate, is how the Categories characterises those things that are “most strictly, primarily, and most of all” called “substances”. One complication must be noted. Aristotle adds that:

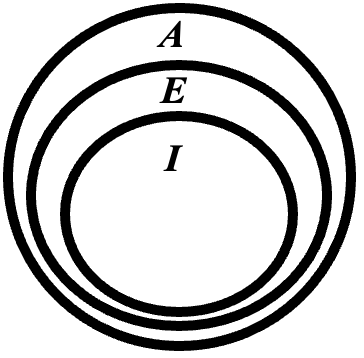

The species in which the things primarily called substances are, are called secondary substances, as also are the genera of these species. For example, the individual man belongs in a species, man, and animal is a genus of the species; so these—both man and animal—are called secondary substances. (Categories, 2a13)

Strictly, then, the Categories characterises two kinds of substances: primary substances, which have the characteristics we have looked at, and secondary substances, which are the species and genera to which primary substances belong. However, Aristotle’s decision to call the species and genera to which primary substances belong “secondary substances” is not typically adopted by later thinkers. When people talk about substances in philosophy, they almost always have in mind a sense of the term derived from Aristotle’s discussion of primary substances. Except where otherwise specified, the same is true of this article.

In singling out object-like particulars such as elephants as those things that are “most strictly, primarily and most of all” called “substance”, Aristotle implies that the term “substance” is no mere label, but that it signifies a special status. A clue as to what Aristotle has in mind here can be found in his choice of terminology. The Greek term translated “substance” is ousia, an abstract noun derived from the participle ousa of the Greek verb eimi, meaning—and cognate with—I am. Unlike the English “substance”, ousia carries no connotation of standing under or holding up. Rather, ousia suggests something close to what we mean by the word “being” when we use it as a noun. Presumably, therefore, Aristotle regards substances as those things that are most strictly and primarily counted as beings, as things that exist.

Aristotle sometimes refers to substances as hypokeimena, a term that does carry the connotation of standing under (or rather, lying under), and that is often translated with the term “subject”. Early translators of Aristotle into Latin frequently used a Latin rendering of hypokeimenon—namely, substantia—to translate both terms. This is how we have ended up with the English term “substance”. It is possible that this has contributed to some of the confusions that have emerged in later discussions, which have placed too much weight on the connotations of the English term (see section 5.c).

Aristotle also discusses the concept of substance in a number of other works. If these have not had the same degree of influence as the Categories, their impact has nonetheless been considerable, especially on scholastic Aristotelianism. Moreover, these works add much to what Aristotle says about substance in the Categories, in some places even seeming to contradict it.

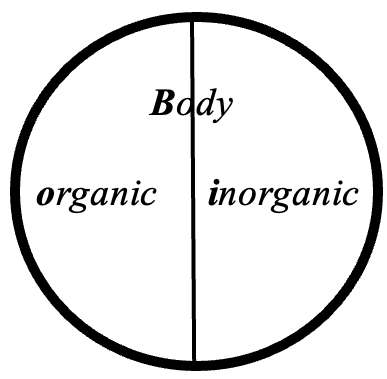

The most important development of Aristotle’s concept of substance outside the Categories is his analysis of material substances into matter (hyle) and form (morphe)—an analysis that has come to be known as hylomorphism (though only since the late nineteenth century). This analysis is developed in the Physics, a text dedicated to things that undergo change, and which, unsurprisingly therefore, also has to do with substances. Given the distinctions drawn in the Categories, one might expect Aristotle’s account of change to simply say that change occurs when a substance gains or loses one of the things that is said of or that exists in it—before its bath, the elephant is hot and grey, but afterwards, it is cool and mud-coloured. However, Aristotle also has the task of accounting for substantial change. That is, the coming to be or ceasing to exist of a substance. An old tradition in Greek philosophy, beginning with Parmenides, suggests that substantial change should be impossible, since it involves something coming from nothing or vanishing into nothing. In the Physics, Aristotle addresses this issue by analysing material substances into the matter they are made of and the form that organises that matter. This allows him to explain substantial change. For example, when a vase comes into existence, the pre-existing clay acquires the form of a vase, and when it is destroyed, the clay loses the form of a vase. Neither process involves something coming from or vanishing into nothing. Likewise, when an elephant comes into existence, pre-existing matter acquires the form of an elephant. When an elephant ceases to exist, the matter loses the form of an elephant, becoming (mere) flesh and bones.

Aristotle returns to the topic of substance at length in the Metaphysics. Here, much to the confusion of readers, Aristotle raises the question of what is most properly called a “substance” afresh and considers three options: the matter of which something is made, the form that organises that matter, or the compound of matter and form. Contrary to what was said in the Categories and the Physics, Aristotle seems to say that the term “substance” applies most properly not to a compound of matter and form such as an elephant or a vase, but to the form that makes that compound the kind of thing it is. (The form that makes a hylomorphic compound the kind of thing it is, such as the form of an elephant or the form of a vase, is referred to as a substantial form, to distinguish it from accidental forms such as size or colour). Scholars do not agree on how to reconcile this position with that of Aristotle’s other works. In any case, it should be noted that it is Aristotle’s identification of substances with object-like particulars such as elephants and vases that has guided most of later discussions of substance.

One explanation for Aristotle’s claim in the Metaphysics that it is the substantial form that most merits the title of “substance” concerns material change. In the Categories, Aristotle emphasises that substances are distinguished by their ability to survive through change. Living things, such as elephants, however, do not just change with respect to accidental forms such as temperature and colour. They also change with respect to the matter they are made of. As a result, it seems that if the elephant remains the same elephant over time, this must be in virtue of its having the same substantial form.

In the Metaphysics, Aristotle rejects the thesis that the term “substance” applies to matter. In discussing this thesis, he anticipates a usage that becomes popular from the seventeenth century onwards. On this usage, “substance” does not refer to object-like particulars such as elephants or vases; rather, it refers to an underlying thing that must be combined with properties to yield an object-like particular. This underlying thing is typically conceived as having no properties in itself, but as standing under or supporting the properties with which it must be combined. The application of the term “substance” to this underlying thing is confusing, and the common practice of favouring the word “substratum” in this context is followed here. The idea of a substratum that must be combined with properties to yield a substance in the ordinary sense is close to Aristotle’s idea of matter that must be combined with form. It is closer still to the concept of prime matter, which is traditionally (albeit controversially) attributed to Aristotle and which, unlike flesh or clay, is conceived as having no properties in its own right, except perhaps spatial extension. Though the concept of a substratum is not same as the concept of substance in its original sense, it also plays an extremely important role in the history of philosophy, and one that has antecedents earlier than Aristotle in the Presocratics and in classical Indian philosophy, a topic discussed in section 2.b.

b. Substance in Hellenistic and Roman Philosophy

As noted in the previous section, in the Categories, Aristotle distinguishes two kinds of non-substance: those that exist in a subject and those that are said of a subject. He goes on to divide these further, into the ten categories from which the work takes its name: quantity, qualification, relative, where, when, being-in-a-position, having, doing, being-affected, and secondary substance (which we can count as non-substances for the reasons explained in section 1.a).

Although an enormous number of subsequent thinkers adopt the basic distinction between substances and non-substances, many omit the distinction between predication and inherence. That is, between non-substances that are said of a subject and non-substances that exist in a subject. Moreover, many compact the list of non-substances. For example, the late Neoplatonist Simplicius (480–560 C.E.) records that the second head of the Academy after Plato, Xenocrates (395/96–313/14 B.C.E.), as well as the eleventh head of the Peripatetic school, Andronicus of Rhodes (ca.60 B.C.E.), reduced Aristotle’s ten categories to two: things that exist in themselves, meaning substances, and things that exist in relation to something else, meaning non-substances.

In adopting the language of things that exist in themselves and those that exist in relation to something else, philosophers such as Xenocrates and Andronicus of Rhodes appear to have been recasting Aristotle’s distinction between substances and non-substances in a terminology that approximates that of Plato’s Sophist (255c). It can therefore be argued that the distinction between substances and non-substances that later thinkers inherit from Aristotle also has a line of descent from Plato, even if Plato devotes much less attention to the distinction.

The definition of substances as things that exist in themselves (kath’ auta or per se) is commonplace in the history of philosophy after Aristotle. The expression is, however, regrettably imprecise, both in the original Greek and in the various translations that have followed. For it is not clear what the preposition “in” is supposed to signify here. Clearly, it does not signify containment, as when water exists in a vase or a brick in a wall. It is plausible that the widespread currency of this vague phrase is responsible for the failure of the most influential philosophers from antiquity onwards to state explicit necessary and sufficient conditions for substancehood.

The simplification of the category of non-substances and the introduction of the Platonic in itself terminology are the main philosophical innovations respecting the concept of substance in Hellenistic and Roman philosophy. The concept would also be given a historic theological application when the Nicene Creed (ca.325 C.E.) defined the Father and Son of the Holy Trinity as consubstantial (homoousion) or of one substance. As a result, the philosophical concept of substance would play a central role in the Arian controversy that shaped early Christian theology.

Although Hellenistic and Roman discussions of substance tend to be uncritical, an exception can be found in the Pyrrhonist tradition. Sextus Empiricus records a Pyrrhonist argument against the distinction between substance and non-substance, which says, in effect, that:

- If things that exist in themselves do not differ from things that exist in relation to something else, then they too exist in relation to something else.

- If things that exist in themselves do differ from things that exist in relation to something else, then they too exist in relation to something else (for to differ from something is to stand in relation to it).

- Therefore, the idea of something that exists in itself is incoherent (see McEvilley 2002, 469).

While arguing against the existence of substances is not a central preoccupation of Pyrrhonist philosophy, it is a central concern of the remarkably similar Buddhist Madhyamaka tradition, and there is a possibility of influence in one direction or the other.

2. Substance in Classical Indian Philosophy

The concept of substance in Western philosophy derives from Aristotle via the ancient and medieval philosophical traditions of Europe, the Middle East and North Africa. Either the same or a similar concept is central to the Indian Vaisheshika and Jain schools, to the Nyaya school with which Vaisheshika merged and, as an object of criticism, to various Buddhist schools. This appears to have been the first time that the concept of substance was subjected to sustained philosophical criticism, anticipating and possibly influencing the well-known criticisms of the idea of substance advanced by early modern Western thinkers.

a. Nyaya-Vaisheshika and Jain Substances

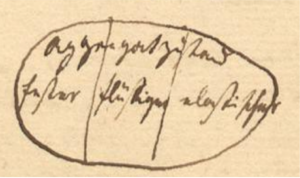

There exist six orthodox schools of Indian philosophy (those that acknowledge the authority of the Vedas—the principal Hindu scriptures) and four major unorthodox schools. The orthodox schools include Vaisheshika and Nyaya which appear to have begun as separate traditions, but which merged some time before the eleventh century. The founding text of the Vaisheshika school, the Vaisheshikasutra is attributed to a philosopher named Kaṇāda and was composed sometime between the fifth and the second century B.C.E. Like Aristotle’s Categories, the focus of the Vaisheshikasutra is on how we should divide up the kinds of things that exist. The Vaisheshikasutra presents a three-fold division into substance (dravya), quality (guna), and motion (karman). The substances are divided, in turn, into nine kinds. These are the five elements—earth, water, fire, air, and aether—with the addition of time, space, soul, and mind.

The early Vaisheshika commentators, Praśastapāda (ca.6th century) and Candrānanda (ca.8th century) expand the Vaisheshikasutra’s three-category division into what has become a canonical list of six categories. The additional categories are universal (samanya), particularity (vishesha), and inherence (samavaya), concepts which are also mentioned in the Vaisheshikasutra, but which are not, in that text, given the same prominence as substance, quality and motion (excepting one passage of a late edition which is of questionable authenticity).

The Sanskrit term translated as “substance”, dravya, comes from drú meaning wood or tree and has therefore a parallel etymology to Aristotle’s term for matter, hyle, which means wood in non-philosophical contexts. Nonetheless, it is widely recognised that the meaning of dravya is close to the meaning of Aristotle’s ousia: like Aristotle’s ousiai, dravyas are contrasted with quality and motion, they are distinguished by their ability to undergo change and by the fact that other things depend on them for their existence. McEvilley (2002, 526–7) lists further parallels.

At the same time, there exist important differences between the Vaisheshika approach to substance and that of Aristotle. One difference concerns the paradigmatic examples. Aristotle’s favourite examples of substances are individual objects, and it is not clear that he would count the five classical elements, soul, or mind, as substances. (Aristotle’s statements on these themes are ambiguous and interpretations differ.) Moreover, Aristotle would not class space or time as substances. This, however, need not be taken to show that the Vaisheshika and Aristotelian concepts of substance are themselves fundamentally different. For philosophers who inherit Aristotle’s concept of substance often disagree with Aristotle about its extension in respects similar to Vaisheshika philosophers.

A second difference between the Vaisheshika approach to substance and Aristotle’s is that according to Vaisheshika philosophers, composite substances (anityadravya, that is noneternal substances), though they genuinely exist, do not persist through change. An individual atom of earth or water exists forever, but as soon as you remove a part of a tree, you have a new tree (Halbfass 1992, 96). A possible explanation for both differences between Vaisheshika and Aristotelian substances is that the former are not understood as compounds of matter and form but play rather a role somewhere between that of Aristotelian substances and Aristotelian matter.

Something closer to Aristotle’s position on this point is found in Jain discussions of substance, which appear to be indebted to the Vaisheshika notion, but which combine it with the idea of a vertical universal (urdhvatasmanya). The vertical universal plays a similar role to Aristotle’s substantial form, in that it accompanies an individual substance through nonessential modifications and can therefore account for its identity through material change.

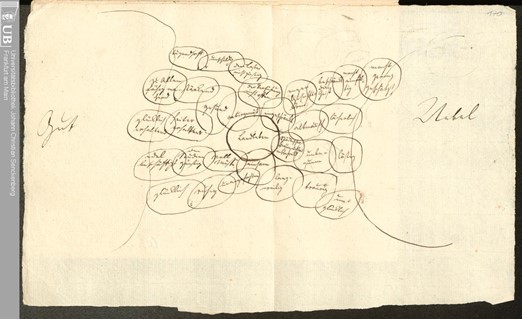

The earliest parts of the Vaisheshikasutra are believed to have been authored between the fifth and second centuries B.C.E., with most parts being in place by the second century C.E. (Moise and Thite 2022, 46). This interval included a period of intense cultural exchange between Greece and India, beginning in the final quarter of the fourth century B.C.E. In view of the close parallels between the philosophy of Aristotle and that of the proponents of Vaisheshika, and of the interaction between the two cultures going on at this time, Thomas McEvilley (2002, 535) states that “it is possible to imagine stimulus diffusion channels” whereby elements of Vaisheshika’s thought “could reflect Greek, and specifically Peripatetic, influence”, including Aristotelian ideas about substance. However, it is also possible that the Vaisheshika and Aristotelian concepts of substance developed independently, despite their similarity.

b. Upanishadic Substrata

The paradigmatic examples of substances identified by Vaisheshika thinkers, like those identified by Aristotelians, are ordinary propertied things such as earth, water, humans and horses. Section 1.a noted that since the seventeenth century, the term “substance” has acquired another usage, according to which “substance” does not applies to ordinary propertied things, but to a putative underlying entity that is supposed to lack properties in itself but to combine with properties to yield substances of the ordinary sort. The underlying entity is often referred to as a substratum to distinguish it from substances in the traditional sense of the term. Although the application of the term “substance” to substrata only became well-established in the twentieth century, the idea that substances can be analysed into properties and an underlying substratum is very old and merits attention here.

As already mentioned, the idea of a substratum is exemplified by the idea of prime matter traditionally attributed to Aristotle. An earlier precursor of this idea is the Presocratic Anaximander, according to whom the apeiron underlies everything that exists. Apeiron is usually translated “infinite”; however, in this context, a more illuminating (albeit etymologically parallel) translation would be “unlimited” or “indefinite”. Anaximander’s apeiron is a thing conceived of in abstraction from any characteristics that limit or define its nature: it is a propertyless substratum. It is reasonable, moreover, to attribute essentially the same idea to Anaximander’s teacher, Thales. For although Thales identified the thing underlying all reality as water, and not as the apeiron, once it is recognised that “water” here is used as a label for something that need not possess any of the distinctive properties of water, the two ideas turn out to be more or less the same.

Thales was the first of the Presocratics and, therefore, the earliest Western philosopher to whom the idea of a substratum can be attributed. Thomas McEvilley (2002) argues that it is possible to trace the idea of a substratum still further back to the Indian tradition. First, McEvilley proposes that Thales’ claim that everything is water resembles a claim advanced by Sanaktumara in the Chandogya Upanishad (ca.8th–6th century B.C.E.), which may well predate Thales. Moreover, just as we can recognise an approximation of the idea of a propertyless substratum in Thales’ claim, the same goes for Sanaktumara’s. McEvilley adds that even closer parallels can be found between Anaximander’s idea of the apeiron and numerous Upanishadic descriptions of brahman as that which underlies all beings, descriptions which, in this case, certainly appear much earlier.

The idea of substance in the sense of an underlying substratum can, therefore, be traced back as far as the Upanishads, and it is possible that the Upanishads influenced the Presocratic notion and, in turn, Aristotle. For there was significant Greek-Indian interchange in the Presocratic period, mediated by the Persian empire, and there is persuasive evidence that Presocratic thinkers had some knowledge of Upanishadic texts or of some unknown source that influenced both (McEvilley 2002, 28–44).

c. Buddhist Objections to Substance

The earliest sustained critiques of the notion of substance appear in Buddhist philosophy, beginning with objections to the idea of a substantial soul or atman. Early objections to the idea of a substantial soul are extended to substances in general by Nagarjuna, the founder of the Madhyamaka school, in around the second or third century C.E. As a result, discussions about substances would end up being central to the philosophical traditions across Eurasia in the succeeding centuries.

The earliest Buddhist philosophical texts are the discourses attributed to the Buddha himself and to his immediate disciples, collected in the Sutra Piṭaka. These are followed by the more technical and systematic Abhidharma writings collected in the Abhidhamma Piṭaka. The Sutra Piṭaka and the Abhidhamma Piṭaka are two of the three components of the Buddhist canon, the third being the collection of texts about monastic living known as the Vinaya Piṭaka. (The precise content of these collections differs in different Buddhist traditions, the Abhidhamma Piṭaka especially.)

The Sutra Piṭaka and the Abhidhamma Piṭaka both contain texts arguing against the idea of a substantial soul. According to the authors of these texts, the term atman is applied by convention to what is in fact a mere collection of mental and physical events. The Samyutta Nikaya, a subdivision of the Sutra Piṭaka, attributes a classic expression of this view to the Buddhist nun, Vaijira. Bhikku Bodhi (2000, 230) translates the relevant passage as follows:

Why now do you assume ‘a being’?

Mara, is that your speculative view?

This is a heap of sheer formations:

Here no being is found.

Just as, with an assemblage of parts,

The word ‘chariot’ is used,

So, when the aggregates exist,

There is the convention ‘a being’.

Although they oppose the idea of a substantial self, the texts collected in the Sutra Piṭaka and the Abhidhamma Piṭaka do not argue against the existence of substances generally. Indeed, Abhidharma philosophers analysed experiential reality into elements referred to as dharmas, which are often described in terms suggesting that they are substances (all the more so in later, noncanonical texts in the Abhidharma tradition).

The Madhyamaka school arose in response to Abhidharma philosophy as well as non-Buddhist schools such as Nyaya-Vaisheshika. In contrast to earlier Buddhist thought, its central preoccupation is the rejection of substances generally.

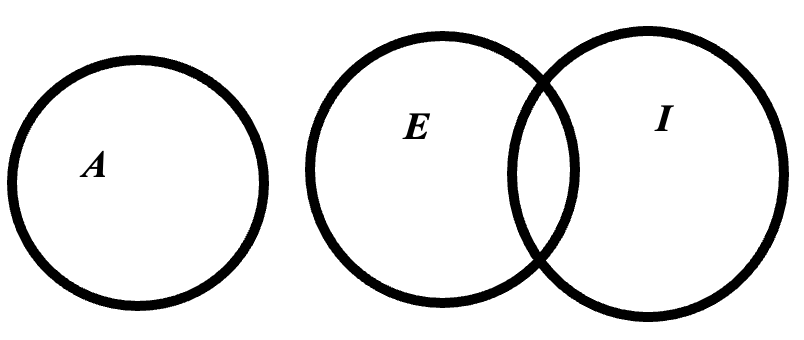

Madhyamaka means middle way. The school takes this name from its principal doctrine, which aims to establish a middle way between two opposing metaphysical views: realism (broadly the view that some things are ultimately real) and nihilism (the view that ultimately, nothing exists). Nagarjuna expresses the third alternative as the view that everything is characterised by emptiness (sunyata), which he explicates as the absence of svabhava. While svabhava has various interconnected meanings in Nagarjuna’s thought, it is mainly used to express the idea of substance understood as “any object that exists objectively, the existence and qualities of which are independent of other objects, human concepts, or interests” (Westerhoff 2009, 199).

Westerhoff (2009, 200–212) summarises several arguments against substance that can be attributed to Nagarjuna. These include an argument that substances could not stand in causal relations, an argument that substance could not undergo change, and an argument that there exists no satisfactory account of the relation between a substance and its properties. The first two appear to rule out substances only on the assumption that substances, if they exist at all, must stand in causal relations and undergo change, something that most, but not all, proponents of substances would hold. Regarding the self or soul, Nagarjuna joins with other Buddhist schools in arguing that what we habitually think of as a substantial self is in fact a collection of causally interconnected psychological and physical events.

The principal targets of Nagarjuna’s attacks on the concept of substance are Abhidharma and Nyaya-Vaisheshika philosophies. A central preoccupation of the Nyaya school is to respond to Buddhist arguments, including those against substance. It is possible that a secondary target is the concept of substance in Greek philosophy. As noted above, there is some evidence of influence between the Greek and Indian philosophical traditions in one or both directions. Greeks in India took a significant interest in Buddhism, with Greek converts contributing to Buddhist culture. The best known of these, Menander, a second century B.C.E. king of Bactria, is one of the two principal interlocutors in the Milindasutra, a Buddhist philosophical dialogue that includes a famous presentation of Vaijira’s chariot analogy.

There also exist striking parallels between the arguments of the Pyrrhonists, as recorded by Sextus Empiricus in around 200 C.E. and the Madhyamaka school founded by Nagarjuna at about the same time (McEvilley 2002; Neale 2014). Diogenes Laertius records that Pyrrho himself visited India with Alexander the Great’s army, spending time in Taxila, which would become a centre of Buddhist philosophy. Roman historians record flourishing trade between the Roman empire and India. There was, therefore, considerable opportunity for philosophical interchange during the period in question. Nonetheless, arguing against the idea of substance does not seem to have been such a predominant preoccupation for the Pyrrhonists as it was for the Madhyamaka philosophers.

3. Substance in Medieval Arabic and Islamic Philosophy

Late antiquity and the Middle Ages saw a decline in the influence of Greco-Roman culture in and beyond Europe, hastened by the rise of Islam. Nonetheless, the tradition of beginning philosophical education with Aristotle’s logical works, starting with the Categories, retained an enormous influence in Middle Eastern intellectual culture. (Aristotle’s work was read not only in Greek but also in Syriac and Arabic translations from the sixth and ninth centuries respectively). The translation of Greek philosophical works into Arabic was accompanied by a renaissance in Aristotelian philosophy beginning with al-Kindi in the ninth century. Inevitably, this included discussions of the concept of substance, which is present throughout the philosophy of this period. Special attention is due to al-Farabi for an early detailed treatment of the topic and to Avicebron (Solomon ibn Gabirol) for his influential defence of the thesis that all substances must be material. Honourable mention is also due to Avicenna’s (Ibn Sina) floating-man argument, which is widely seen as anticipating Descartes’ (in)famous disembodiment argument for the thesis that the mind is an immaterial substance.

a. Al-Farabi

The resurgence of Aristotelian philosophy in the Arabic and Islamic world is usually traced back to al-Kindi. Al-Kindi’s works on logic (the subject area to which the Categories is traditionally assigned) have however been lost, and with them any treatment of substance they might have contained. Thérèse-Anne Druart (1987) identifies al-Farabi’s discussion of djawhar, in his Book of Letters, as the first serious Arabic study of substance. There, al-Farabi distinguishes between the literal use of djawhar (meaning gem or ore), metaphorical uses to refer to something valuable or to the material of which something is constituted, and three philosophical uses as a term for substance or essence.

The first two philosophical uses of djawhar identified by al-Farabi approximate Aristotle’s primary and secondary substances. That is, in the first philosophical usage, djawhar refers to a particular that is not said of and does not exist in a subject. For example, an elephant. In the second philosophical usage, it refers to the essence of a substance in the first sense. For example, the species elephant. Al-Farabi adds a third use of djawhar, in which it refers to the essence of a non-substance. For example, to colour, the essence of the non-substance grey.

Al-Farabi says that the other categories depend on those of first and second substances and that this makes the categories of first and second substances more perfect than the others. He reviews alternative candidates for the status of djawhar put forward by unnamed philosophers. These include universals, indivisible atoms, spatial dimensions, mathematical points, and matter. The idea appears to be that these could turn out to be superior candidates for substances because they are more perfect. However, with one exception, al-Farabi does not discover anything more perfect than primary and secondary substances.

The exception is as follows. Al-Farabi claims that it can be proved that there exists a being that is neither in nor predicated of a subject and that is not a subject for anything else either. This being, al-Farabi claims, is more worthy of the term djawhar than the object-like primary substances, insofar as it is still more perfect. Although al-Farabi indicates that it would be reasonable to extend the philosophical usage of djawhar in this way, he does not propose to break with the established use in this way. Insofar as “more perfect” means “more fundamental”, we see here the tension mentioned at the beginning of this article between the use of the term “substance” for object-like things and its use for whatever is most fundamental.

b. Avicebron (Solomon ibn Gabirol)

Avicebron was an eleventh century Iberian Jewish Neoplatonist. In addition to a large corpus of poetry, he wrote a philosophical dialogue, known by its Latin name, Fons Vitae (Fountain of Life), which would have a great influence on Christian scholastic philosophy in the twelfth and thirteenth centuries.

Avicebron’s principal contribution to the topic of substance is his presentation of the position known as universal hylomorphism. As explained in section 1, Aristotle defends hylomorphism, the view that material substances are composed of matter (hyle) and form (morphe). However, Aristotle does not extend this claim to all substances. He leaves room for the view that there exist many substances, including human intellects, that are immaterial. By late antiquity, a standard interpretation of Aristotle emerged, according to which such immaterial substances do in fact exist. By contrast, in the Fons Vitae, Avicebron defends the thesis that all substances, with the only exception of God, are composed of matter and form.

There is a sense in which Avicebron’s universal hylomorphism is a kind of materialism: he holds that created reality consists solely of material substances. It is however important not to be misled by this fact. For although they argue that all substances, barring God, are composed of matter and form, Avicebron and other universal hylomorphists draw a distinction between the ordinary matter that composes corporeal substances and the spiritual matter that composes spiritual substances. Spiritual matter plays the same role as ordinary matter in that it combines with a form to yield a substance. However, the resulting substances do not have the characteristics traditionally associated with material entities. They are not visible objects that take up space. Hence, universal hylomorphism would not satisfy traditional materialists such as Epicurus or Hobbes, who defend their position on the basis that everything that exists must take up space.

Scholars do not agree on what the case for universal hylomorphism is supposed to be. Paul Vincent Spade (2008) suggests that it results from two assumptions: that only God is metaphysically simple in all respects, and that anything that is not metaphysically simple in all respects is a composite of matter and form. However, Avicebron does not explicitly defend this argument, and it is not obvious why something could not qualify as non-simple in virtue of being complex in some way other than involving matter and form.

4. Substance in Medieval Scholastic Philosophy

In the early sixth century, Boethius set out to translate the works of Plato and Aristotle into Latin. This project was cut short when he was executed by Theodoric the Great, but Boethius still did manage to translate Aristotle’s Categories and De Interpretatione. A century later, Isadore of Seville summarised Aristotle’s account of substance in the Categories in his Etymologiae, perhaps the most influential book of the Middle Ages, after the Bible. As a result, the concept of substance introduced in Aristotle’s Categories remained familiar to philosophers after the fall of the Western Roman Empire. Nonetheless, prior to the twelfth century, philosophy in the Latin West consisted principally in elaborating on traditional views, inherited from the Church Fathers and other familiar authorities. It is only in the twelfth century that philosophers made novel contributions to the topic of substance, influenced by Arabic-Islamic philosophy and by the recovery of ancient works by Aristotle and others. The most important are those of Thomas Aquinas and John Duns Scotus.

a. Thomas Aquinas

All the leading philosophers of this period adopted a version of Aristotle’s concept of substance. Many, and in particular those in the Franciscan order, such as Bonaventure, followed Avicebron in accepting universal hylomorphism. Aquinas’s main contribution to the topic of substance is his opposition to Avicebron’s position.

Aquinas endorses Aristotle’s definition of a substance as something that neither is said of, nor exists in, a subject, and he follows Aristotle in analysing material substances as composites of matter and form. However, Aquinas recognised a problem about how to square these views with his belief that some substances, including human souls, are immaterial.

Aquinas was committed to the view that, unlike God, created substances are characterised by potentiality. For example, before its bath, the elephant is actually hot but potentially cool. Aquinas takes the view that in material substances, it is matter that contributes potentiality. For matter is capable of receiving different forms. Since immaterial substances lack matter, it seems to follow that they also lack potentiality. Aquinas is happy to accept this conclusion respecting God whom he regards as pure act. He is however not willing to say the same of other immaterial substances, such as angels and human souls, which he takes to be characterised by potentiality no less than material substances.

One solution would be to adopt the universal hylomorphism of Avicebron, but Aquinas rejects this position on the basis that the potentiality of matter, as usually understood, consists ultimately in its ability to move through space. If so, it seems that matter can only belong to spatial, and hence corporeal, beings (Questiones Disputate de Anima, 24.1.49.142–164).

Instead, Aquinas argues that although immaterial substances are not composed of matter and form, they are composed of essence and existence. In immaterial substances, it is their essence that contributes potentiality. This account of immaterial substances presupposes that existence and essence are distinct, an idea that had been anticipated by Avicenna as a corollary of his proof of God’s existence. Aquinas defends the distinction between existence and essence in De Ente et Essentia, though scholars disagree about how exactly the argument should be understood (see Gavin Kerr’s article on Aquinas’s Metaphysics).

Aquinas recognises that one might be inclined to refer to incorporeal potentiality as matter simply on the basis that it takes on, in spiritual substances, the role that matter plays in corporeal substances. However, he takes the view that this use of the term “matter” would be equivocal and potentially misleading.

A related, but more specific, contribution by Aquinas concerns the issue of how a human soul, if it is the form of a hylomorphic compound, can nonetheless be an immaterial substance in its own right, capable of existing without the body after its death. Aquinas compares the propensity of the soul to be embodied to the propensity of lighter objects to rise, observing that in both cases, the propensity can be obstructed while the object remains in existence. For more on this issue, see Christopher Brown’s article on Thomas Aquinas.

b. Duns Scotus

Like Aquinas, Scotus adopts the Categories’ account of substance. In contrast to earlier Franciscans, he agrees with Aquinas’s rejection of universal hylomorphism. Indeed, Scotus goes even further, claiming not only that form can exist without matter, but also that prime matter can exist without form. As a result, Scotus is committed to the view that matter has a kind of formless actuality, something that, in Aquinas’s system, looks like a contradiction.

Although he drops the doctrine of universal hylomorphism, Scotus maintained, against Aquinas, a second thesis concerning substances associated with Franciscan philosophers and often paired with universal hylomorphism: the view that a single substance can have multiple substantial forms (Ordinatio, 4).

According to Aquinas, a substance has only one substantial form. For example, the substantial form of an elephant is the species elephant. The parts of the elephant, such as its organs, do not have their own substantial forms. Because substantial forms are responsible for the identity of substances over time, this view has the counterintuitive consequence that when, for example, an organ transplant takes place, the organ acquired by the recipient is not the one that was possessed by the donor.

According to Scotus, by contrast, one substance can have multiple substantial forms. For example, the parts of the elephant, such as its organs, may each have their own substantial form. This allows followers of Scotus to take the intuitive view that when an organ transplant takes place, the organ acquired by the recipient is one and the same as the organ that the donor possessed, and not a new entity that has come into existence after the donor’s death. (Aristotle seems to endorse the position of Scotus in the Categories, and that of Aquinas in the Metaphysics.)

Scotus is also known for introducing the idea that every substance has a haecceity (thisness), that is, a property that makes it the particular thing that it is. In this, he echoes the earlier Vaisheshika idea of a vishesha (usually translated “particularity”) which plays approximately the same role (Kaipayil 2008, 79).

5. Substance in Early Modern Philosophy

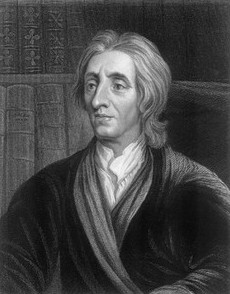

Prior to the early modern period, Western philosophers tend to adopt both Aristotle’s definition of substance in the Categories and his analysis of material substances into matter and form. In the early modern period, this practice begins to change, with many philosophers offering new characterisations of substance, or rejecting the notion of substance entirely. The most influential contribution from this period is Descartes’ independence definition of substance. Although many earlier philosophers have been interpreted as saying that substances are things that have independent existence, Descartes appears to be the first prominent thinker to say this explicitly. Descartes’ influence, respecting this and other topics, was reinforced by Antoine Arnauld and Pierre Nicole’s Port-Royal Logic, which, towards the end of the seventeenth century, took the place of Aristotle’s Categories as the leading introduction to philosophy. Important contributions to the idea of substance in this period are also made by Spinoza, Leibniz, Locke and Hume, all of whom are known for resisting some aspect of Descartes’ account of substance.

a. Descartes

Substance is one of the central concepts of Descartes’ philosophy, and he returns to it on multiple occasions. In the second set of Objections and Replies to the Meditations on First Philosophy, Descartes advances a definition of substance that resembles Aristotle’s definition of substance in the Categories. This is not surprising given that Descartes underwent formal training in Aristotelian philosophy at the Royal College of La Flèche, France. In a number of other locations, however, Descartes offers what has been called the independence definition of substance. According to the independence definition, a substance is anything that could exist by itself or, equivalently, anything that does not depend on anything else for its existence (Oeuvres, vol. 7, 44, 226; vol. 3, 429; vol. 8a, 24).

Scholars disagree about how exactly we should understand Descartes’ independence definition. Some have argued that Descartes’ view is that substances must be causally independent, in the sense that they do not require anything else to cause them to exist. Another and maybe more popular view is that, for Descartes, substances are modally independent, meaning that the existence of a substance does not necessitate the existence of any other entity. This interpretation itself has several variants (see Weir 2021, 281–7).

In addition to offering a new definition of substance, Descartes draws a distinction between a strict and a more permissive sense of the term. A substance in the strict sense satisfies the independence definition without qualification. Descartes claims that there is only one such substance: God. For everything else depends on God for its existence. Descartes adds, however, that we can count as created substances those things that depend only on God for their existence. Descartes claims that finite minds and bodies qualify as created substances in this sense, whereas their properties (attributes, qualities and modes in his terminology) do not.

It is possible to view Descartes’ independence definition of substance as a disambiguation of Aristotle’s definition of substance in the Categories. Aristotle says that substances do not depend, for their existence, on any other being of which they must be predicated or in which they must inhere. He does not however say explicitly whether substances depend in some other way on other things for their existence. Descartes clarifies that they do not. This is consistent with, and may even be implied by, what Aristotle says in the Categories.

In another respect, Descartes’ understanding of substance departs dramatically from the Aristotelian orthodoxy of his day. For example, while Descartes accepts Aristotle’s claim that in the case of a living human, the soul serves as the form of body, he exhibits little or no sympathy for hylomorphism beyond this. Rather than analysing material substances into matter and form like Aristotle, or substances in general into potency and act like Aquinas, Descartes proposes that every substance has, as its principal attribute, one of two properties—namely, extension or thought—and that all accidental properties of substances are modes of their principal attribute. For example, being elephant-shaped is a mode of extension, and seeing sunlight glimmer on a lake is a mode of thought. In contrast to the scholastic theory of real accidents, Descartes holds that these modes are only conceptually distinct from, and cannot exist without, the substances to which they belong.

One consequence is that Descartes appears to accept what has come to be known as the bundle view of substances: the thesis that, in his words, “the attributes all taken together are the same as the substance” (Conversation with Burman, 7). To put it another way, once we have the principal attribute of the elephant—extension—and all of the accidental attributes, such as its size, shape, texture and so on, we have everything that this substance comprises. These attributes do not need to be combined with a propertyless substratum. (The bundle view, in the relevant sense, contrasts with the substratum view, according to which a substance is composed of properties and a substratum. Sometimes, the term “bundle view” is used in a stronger sense, to imply that the properties that make up a substance could exist separately, but Descartes does not endorse the bundle view in this stronger sense.)

A further consequence is that Descartes could not accept the standard transubstantiation account of the eucharist, which depended on the theory of real accidents, and was obliged to offer a competing account.

In the late seventeenth century, two followers of Descartes, Antoine Arnauld and Pierre Nicole, set out to author a modern introduction to logic that could serve in place of the texts of Aristotle’s Organon, including the Categories. (The word “logic” is used here in a traditional sense that is significantly broader than the sense that philosophers of the beginning of the twenty-first century would attribute to it, including much of what these philosophers would recognize as metaphysics.) The result was La logique ou l’art de penser, better known as the Port-Royal Logic, a work that had an enormous influence on the next two centuries of philosophy. The Port-Royal Logic offers the following definition of substance:

I call whatever is conceived as subsisting by itself and as the subject of everything conceived about it, a thing. It is otherwise called a substance. […] This will be made clearer by some examples. When I think of a body, my idea of it represents a thing or a substance, because I consider it as a thing subsisting by itself and needing no other subject to exist. (30–21)

This definition combines Aristotle’s idea that a substance is the subject of other categories and Descartes claim that a substance does not need other things to exist. It is interesting to note here a shift in focus from what substances are to how they are conceived or considered. This reflects the general shift in focus from metaphysics to epistemology that characterised philosophy after Descartes.

b. Spinoza

Influential philosophers writing after Descartes tend to use Descartes’ views as a starting point, criticising or accepting them as they deem reasonable. Hence, a number of responses to Descartes’ account of substance appear in the early modern period.

In the only book published under his name in his lifetime, the 1663 Principles of Cartesian Philosophy, Spinoza endorses both Descartes’ definition of substance in the Second Replies (which is essentially Aristotle’s definition in the Categories) and the independence definition introduced in the Principles of Philosophy and elsewhere. Spinoza also endorses Descartes’ distinction between created and uncreated substances, his rejection of substantial forms and real accidents, and his division of substances into extended substances and thinking substances.

In the Ethics, published posthumously in 1677, Spinoza develops his own approach to these issues. Spinoza opens the Ethics by stating that “by substance I understand what is in itself and is conceived through itself”. Shortly after this, in the first of his axioms, he adds that “Whatever is, is either in itself or in another”. Spinoza’s contrast between substance, understood as those things that are in themselves, and non-substances, understood as those things that are in another, reflects the distinction introduced by Plato in the Sophist and taken up by countless later thinkers from antiquity onwards. As in the Port-Royal Logic, Spinoza’s initial definition of substance in terms of how it is conceived reflects the preoccupation of early modern philosophy with epistemology.

Spinoza clarifies the claim that a substance is conceived through itself by saying that it means that “the conception of which does not require for its formation the conception of anything else”. This might mean that something is a substance if and only if it is possible to conceive of its existing by itself. If so, then Spinoza’s definition might be interpreted as an epistemological rewriting of Descartes’ independence definition.

Spinoza purports to show, on the basis of various definitions and axioms, that there can only be one substance, and that this substance is to be identified with God. What Descartes calls created substances are really modes of God. This conclusion is sometimes represented as a radical departure from Descartes. This is misleading, however. For Descartes also holds that only God qualifies as a substance in the strict sense of the word “substance”. To this extent, Spinoza is no more monistic than Descartes.

Spinoza’s Ethics does however depart from Descartes in (i) not making use of a category of created substances, and (ii) emphasizing that those things that Descartes would class as created substances are modes of God. Despite this, Spinoza’s theory is not obviously incompatible with the existence of created substances in Descartes’ sense of the term, even if he does not make use of the category himself. It is plausibly a consequence of Descartes’ position that created substances are, strictly speaking, modes of God, even if Descartes does not state this explicitly.

c. Leibniz

In his Critical Thoughts on Descartes’ Principles of Philosophy, Leibniz raises the following objection to Descartes’ definition of created substances as things that depend only on God for their existence:

I do not know whether the definition of substance as that which needs for its existence only the concurrence of God fits any created substance known to us. […] For not only do we need other substances; we need our own accidents even much more. (389)

Leibniz does not explicitly explain here why substances should need other substances, setting aside God, for their existence. Still, his claim that substances need their own accidents is an early example of an objection that has had a significant degree of influence in the literature of the twentieth and twenty-first centuries on substance. According to this objection, nothing could satisfy Descartes’ independence definition of substance because every candidate substance (an elephant or a soul, for example) depends for its existence on its own properties. This objection is further discussed in section 6.

In the Discourse of Metaphysics, Leibniz does provide a reason for thinking that created substances need other substances to exist. There, he begins by accepting something close to Aristotle’s definition of substance in the Categories: a substance is something of which other things are predicated, but which is not itself predicated of anything else. However, Leibniz claims that this characterisation is insufficient, and sets out a novel theory of substance, according to which the haecceity of a substance includes everything true of it (see section 4.b for the notion of haecceity). Accordingly, Leibniz holds that from a perfect grasp of the concept of a particular substance, one could derive all other truths.

It is not obvious how Leibniz arrives at this unusual conception of substance, but it is clear that if the haecceity of one substance includes everything that is true of it, this will include the relationships in which it stands to every other substance. Hence, on Leibniz’s view, every substance turns out to necessitate, and so to depend modally on, every other for its existence, a conclusion that contrasts starkly with Descartes’ position.

Leibniz’s view illustrates the fact that it is possible to accept Aristotle’s definition of substance in the Categories while rejecting Descartes’ independence definition. Leibniz clearly agrees with Aristotle that a substance does not have to be said of or to exist in something in the way that properties do. However, he holds that substances depend for their existence on other things in a way that contradicts Descartes’ independence definition.

Leibniz’s enormous corpus makes a number of other distinctive claims about substances. The most important of these are the characterisation of substances as unities and as things that act, both of which can be found in his New Essays on Human Understanding. These ideas have precursors as far back as Aristotle, but they receive special emphasis in Leibniz’s work.

d. British Empiricism

Section 1 mentions that since the seventeenth century, a new usage of the term “substance” becomes prevalent, on which it does not refer to an object-like thing, such as an elephant, but to an underlying substratum that must be combined with properties to yield an object-like thing. On this usage, an elephant is a combination of properties such as its shape, size and colour, and the underlying substance in which these properties inhere. The substance in this sense is often described as having no properties in itself, and therefore resembles Aristotelian prime matter more than the objects that serve as examples of substances in earlier traditions.

This new usage of “substance” is standardly traced back to Locke’s Essay Concerning Human Understanding, where he states that:

Substance [is] nothing, but the supposed, but unknown support of those qualities, we find existing, which we imagine cannot subsist, sine re substante, without something to support them, we call that support substantia; which, according to the true import of the word, is in plain English, standing under, or upholding. (II.23.2)

This and similar statements in Locke’s Essay initiated a longstanding tradition in which British empiricists, including Berkeley, Hume, and Russell, took for granted that the term “substance” typically refers to a propertyless substratum and criticised the concept on that basis.

Scholars debate on whether Locke actually intended to identify substances with propertyless substrata. There exist two main interpretations. On the traditional interpretation, associated with Leibniz and defended by Jonathan Bennett (1987), Locke uses the word “substance” to refer to a propertyless substratum that we posit to explain what supports the collections of properties that we observe, although Locke is sceptical of the value of this idea, since it stands for something whose nature we are entirely ignorant of. (Those who believe that Locke intended to identify substances with propertyless substrata disagree regarding the further issue of whether Locke reluctantly accepts or ultimately rejects such entities.)

The alternative interpretation, defended by Michael Ayers (1977), agrees that Locke identifies substance with an unknown substratum that underlies the collections of properties we observe. However, on this view, Locke does not regard the substratum as having no properties in itself. Rather, he holds that these properties are unknown to us, belonging as they do to the imperceptible microstructure of their bearer. This microstructure is posited to explain why a given cluster of properties should regularly appear together. On this reading, Locke’s substrata play a similar role to Aristotle’s secondary substances or Jain vertical universals in that they are the essences that explain the perceptible properties of objects. The principal advantage of this interpretation is that it explains how Locke can endorse the idea of a substratum while recognising the (apparent) incoherence of the idea of something having no properties in itself. The principal disadvantages of this interpretation include the meagre textual evidence in its favour and its difficulty accounting for Locke’s disparaging comments about the idea of a substratum.

Forrai (2010) suggests that the two interpretations of Locke’s approach to substances can be reconciled if we suppose that Locke takes our actual idea of substance to be that of a propertyless substratum while holding that we only think of that substratum as propertyless because we are ignorant of its nature, which is in fact that of an invisible microstructure.

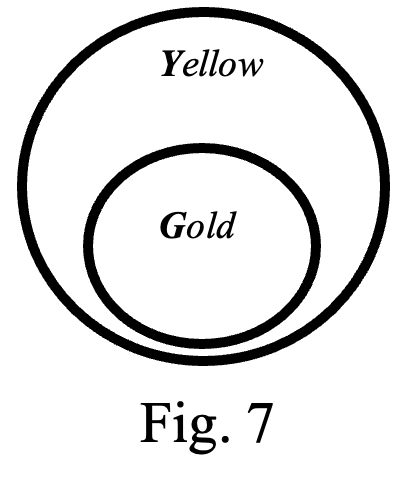

In the passages traditionally interpreted as discussing the idea of a propertyless substratum, Locke refers to it as the “idea of substance in general”. In other passages, Locke discusses our ideas of “particular sorts of substances”. Locke’s particular sorts of substances resemble the things referred to as substances in earlier traditions. His examples include humans, horses, gold, and water. These, Locke claims, are in fact just collections of simple ideas that regularly appear together:

We come to have the Ideas of particular sorts of Substances, by collecting such Combinations of simple Ideas, as are by Experience and Observation of Men’s Senses taken notice of to exist together, and are therefore supposed to flow from the particular internal Constitution, or unknown Essence of that Substance. (Essay, II.23.3)

The idea of an elephant, on this view, is really just a collection comprising the ideas of a certain colour, a certain shape, and so on. Locke seems to take the view that the distinctions we draw between different sorts of substances are somewhat arbitrary and conventional. That is, the word “elephant” may not refer to what the philosophers of the twentieth and twenty-first centuries would consider a natural kind. Hence, substances in the traditional sense turn out to be subject-dependent in the sense that the identification of some collection of ideas as a substance is not an objective, mind-independent fact, but depends on arbitrary choices and conventions.

Locke’s comments about substance, particularly those traditionally regarded as identifying substances with propertyless substrata, had a great influence on Berkeley and Hume, both of whom followed Locke in treating substances as substrata and in criticising the notion on this basis, while granting the existence of substances in the deflationary sense of subject-dependent collections of ideas.

Berkeley’s position is distinctive in that he affirms an asymmetry between perceptible substances, such as elephants and vases, and spiritual substances, such as human and divine minds. Berkeley agrees with Locke that our ideas of perceptible substances are really just collections of ideas and that we are tempted to posit a substratum in which these ideas exist. Unlike Locke, Berkeley explicitly says that in the case of perceptible objects at least, we should posit no such thing:

If substance be taken in the vulgar sense for a combination of qualities such as extension, solidity, weight, and the like […] this we cannot be accused of taking away; but if it be taken in the philosophic sense for the support of accidents or quantities without the mind, then I acknowledge that we take it away. (Principles, 1.37)

Berkeley’s rejection of substrata in the case of material objects is not necessarily due to his rejection ofthe idea of substrata in general, however. It may be that Berkeley rejects substrata for material substances only, and does so solely on the basis that, according to his idealist metaphysics, those properties that make up perceptible objects really inhere in the minds of the perceivers.

Whether or not Berkeley thinks that spiritual substances involve propertyless substrata is hard to judge and it is not clear that Berkeley maintains a consistent view on this issue. On the one hand, Berkeley’s published criticisms of the idea of a substratum tend to focus exclusively on material objects, suggesting that he is not opposed to the existence of a substratum in the case of minds. On the other hand, several passages in Berkeley’s notebooks assert that there is nothing more to minds than the perceptions they undergo, suggesting that Berkeley rejects the idea of substrata in the case of minds as well (see in particular his Notebooks, 577 and 580). The task of interpreting Berkeley on this point is complicated by the fact that the relevant passages are marked with a “+”, which some but not all scholars interpret as indicating Berkeley’s dissatisfaction with them.

Hume’s Treatise of Human Nature echoes Locke’s claim that we have no idea of what a substance is and that we have only a confused idea of what a substance does. Although Hume does not explicitly state that these criticisms are intended to apply to the idea of substances as propertyless substrata, commentators tend to agree that this is his intention (see for example Baxter 2015). Hume seems to agree with Locke (as traditionally interpreted) that we introduce the idea of a propertyless substratum in order to make sense of the unity that we habitually attribute to what are in fact mere collections of properties that regularly appear together. Hume holds that we can have no idea of this substratum because any such idea would have to come from some sensory or affective impression while, in fact, ideas derived from sensory and affective impressions are always of accidents—that is, of properties.

Hume grants that we do have a clear idea of substances understood as Descartes defines them, that is, as things that can exist by themselves. However, Hume asserts that this definition applies to anything that we can think of, and hence, that to call something a substance in this sense is not to distinguish it from anything else.

Hume further argues that we can make no sense of the idea of the inherence relation that is supposed to exist between properties and the substances to which they belong. For the inherence relation is taken to be the relation that holds between an accident and something without which it could not exist (as per Aristotle’s description of inherence in the Categories, for example). According to Hume, however, nothing stands in any such relation to anything else. For he makes it an axiom that anything that we can distinguish in thought can exist separately in reality. It follows that not only do we have no idea of a substratum, but no such thing can exist, either in the case of perceptible objects or in the case of minds. For a substratum is supposed to be that in which properties inhere. It is natural to see Hume’s arguments on this topic as the culmination of Locke’s more circumspect criticisms of substrata.

It follows from Hume’s arguments that the entities that earlier philosophers regarded as substances, such as elephants and vases, are in fact just collections of ideas, each member of which could exist by itself. Hume emphasises that, as a consequence, the mind really consists in successive collections of ideas. Hence, Hume adopts a bundle view of the mind and other putative substances not only in the moderate sense that he denies that minds involve a propertyless substratum, but in the extreme sense that he holds that they are really swarms of independent entities.

There exists a close resemblance between Hume’s rejection of the existence of complex substances and his emphasis on the nonexistence of a substantial mind in particular, and the criticisms of substance advanced by Buddhist philosophers and described in section 2. It is possible that Hume was influenced by Buddhist thought on this and other topics during his stay at the Jesuit College of La Flèche, France, in 1735–37, through the Jesuit missionary Charles François Dolu (Gopnik 2009).

Although not himself a British empiricist (though see Stephen Priest’s (2007, 262 fn. 40) protest on this point), Kant developed an approach to substance in the tradition of Locke, Berkeley and Hume, with a characteristically Kantian twist. Kant endorses a traditional account of substance, according to which substances are subjects of predication and are distinguished by their capacity to persist through change. However, Kant adds that the category of substance is something that the understanding imposes upon experience, rather than something derived from our knowledge of things in themselves. For Kant, the category of substance is, therefore, a necessary feature of experience, and to that extent, it has a kind of objectivity. Kant nonetheless agrees with Locke, Berkeley (respecting material substances) and Hume that substances are subject-dependent. (See Messina (2021) for a complication concerning whether we might nonetheless be warranted in applying this category to things in themselves.)

While earlier thinkers beginning with Aristotle asserted that substances can persist through change, Kant goes further, claiming that substances exist permanently and that their doing so is a necessary condition for the unity of time. It seems to follow that for Kant, composites such as elephants or vases cannot be substances, since they come into and go out of existence. Given that Kant also rejects the existence of indivisible atoms in his discussion of the second antinomy, the only remaining candidate for a material substance in Kant appears to be matter taken as a whole. For an influential exposition, see Strawson (1997).

6. Substance in Twentieth-Century and Early-Twenty-First-Century Philosophy

The concept of substance lost its central place in philosophy after the early modern period, partly as a result of the criticisms of the British empiricists. However, philosophers of the twentieth and early twenty-first centuries have shown a revival of interest in the idea, with several philosophers arguing that we need to accept the concept of substance to account for the difference between object-like and property-like things, or to account for which entities are fundamental, or to address a range of neighbouring metaphysical issues. Discussions have centred on two main themes: the criteria for being a substance, and the structure of substances. O’Conaill (2022) provides a detailed overview of both. Moreover, in the late twentieth century, the concept of substance has gained an important role in philosophy of mind, where it has been used to mark the difference between two kinds of mind-body dualism: substance dualism and property dualism.

a. Criteria for Being a Substance

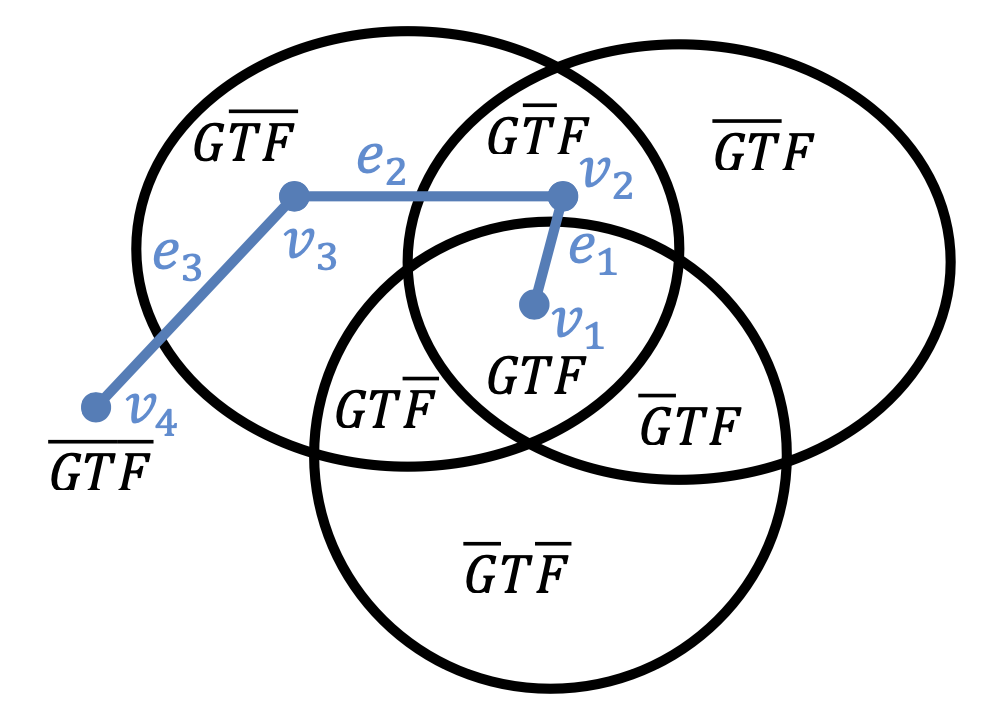

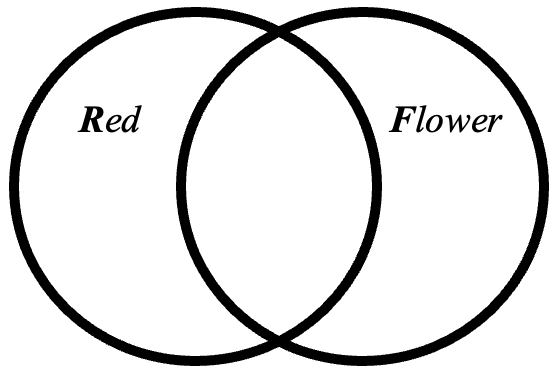

As noted at the beginning of this article, the term “substance” has two main uses in philosophy. Some philosophers use this word to pick out those things that are object-like in contrast to things that are property-like (or, for some philosophers, event-like or stuff-like). Others use it to pick out those things that are fundamental, in contrast to things that are non-fundamental. Both uses derive from Aristotle’s Categories, which posits that the object-like things are the fundamental things. For some thinkers, however, object-like-ness and fundamentality come apart. When philosophers attempt to give precise criteria for being a substance, they tend to have one of two targets in mind. Some have in mind the task of stating what exactly makes something object-like, while others have in mind the task of stating what exactly makes something fundamental. Koslicki (2018, 164–7) describes the two approaches in detail. Naturally, this makes a difference to which criteria for being a substance seem reasonable, and occasionally this has resulted in philosophers talking past one another. Nonetheless, the hypothesis that the object-like things are the fundamental things is either sufficiently attractive, or sufficiently embedded in philosophical discourse, that there exists considerable overlap between the two approaches.

The most prominent criterion for being a substance in the philosophy of the beginning of the twenty-first century is independence. Many philosophers defend, and even more take as a starting point, the idea that what makes something a substance is the fact that it does not depend on other things. Philosophers differ, however, on what kind of independence is relevant here, and some have argued that independence criteria are unsatisfactory and that some other criterion for being a substance is needed.

The most common independence criteria for being a substance characterise substances in terms of modal (or metaphysical) independence. One thing a is modally independent of another thing b if and only if a could exist in the absence of b. The idea that substances are modally independent is attractive for two reasons. First, it seems that properties, such as shape, size or colour, could not exist without something they belong to—something they are the shape, size or colour of. In other words, property-like things seem to be modally dependent entities. By contrast, object-like things, such as elephants or vases, do not seem to depend on other things in this way. An elephant need not be the elephant of some elephant-having being. Therefore, one could argue for the claim that object-like things differ from property-like things by saying that the former are not modally dependent on other entities, while the latter are. Secondly, modally independent entities are arguably more fundamental than modally dependent entities. For example, it is tempting to say that modally independent entities are the basic elements that make up reality, whereas modally dependent entities are derivative aspects or ways of being that are abstracted from the modally independent entities.

Though attractive, the idea that substances are modally independent faces some objections. The most influential objection says that nothing is modally independent because nothing can exist without its own parts and/or properties (see Weir (2021, 287–291) for several examples). For example, an elephant might not have to be the elephant of some further, elephant-having being, but an elephant must have a size and shape, and countless material parts. An elephant cannot exist without a size, a shape and material parts, and so there is a sense in which an elephant is not modally independent of these things.