Ordinary Language Philosophy

Ordinary Language philosophy, sometimes referred to as ‘Oxford’ philosophy, is a kind of ‘linguistic’ philosophy. Linguistic philosophy may be characterized as the view that a focus on language is key to both the content and method proper to the discipline of philosophy as a whole (and so is distinct from the Philosophy of Language). Linguistic philosophy includes both Ordinary Language philosophy and Logical Positivism, developed by the philosophers of the Vienna Circle (for more detail see Analytic Philosophy section 3). These two schools are inextricably linked historically and theoretically, and one of the keys to understanding Ordinary Language philosophy is, indeed, understanding the relationship it bears to Logical Positivism. Although Ordinary Language philosophy and Logical Positivism share the conviction that philosophical problems are ‘linguistic’ problems, and therefore that the method proper to philosophy is ‘linguistic analysis’, they differ vastly as to what such analysis amounts to, and what the aims of carrying it out are.

Ordinary Language philosophy is generally associated with the (later) views of Ludwig Wittgenstein, and with the work done by the philosophers of Oxford University between approximately 1945-1970. The origins of Ordinary Language philosophy reach back, however, much earlier than 1945 to work done at Cambridge University, usually marked as beginning in 1929 with the return of Wittgenstein, after some time away, to the Cambridge faculty. It is often noted that G. E. Moore was a great influence on the early development of Ordinary Language philosophy (though not an Ordinary Language philosopher himself), insofar as he initiated a focus on and interest in ‘commonsense’ views about reality. Major figures of Ordinary Language philosophy include (in the early phases) John Wisdom, Norman Malcolm, Alice Ambrose, Morris Lazerowitz, and (in the later phase) Gilbert Ryle, J. L. Austin and P. F. Strawson, among others. However, it is important to note that the Ordinary Language philosophical view was not developed as a unified theory, nor was it an organized program, as such. Indeed, the figures we now know as ‘Ordinary Language’ philosophers did not refer to themselves as such – it was originally a term of derision, used by its detractors. Ordinary Language philosophy is (besides an historical movement) foremost a methodology – one which is committed to the close and careful study of the uses of the expressions of language, especially the philosophically problematic ones. A commitment to this methodology as that which is proper to, and most fruitful for, the discipline of philosophy, is what unifies an assortment of otherwise diverse and independent views.

Table of Contents

- Introduction

- Cambridge

- Analysis and Formal Logic

- Logical Atomism

- Logical Positivism and Ideal Language

- Ordinary Language versus Ideal Language

- Ordinary Language Philosophy: Nothing is Hidden

- The Misuses of Language

- Philosophical Disputes and Linguistic Disputes

- Ordinary Language is Correct Language

- The Paradigm Case Argument

- A Use-Theory of Linguistic Meaning

- Oxford

- Ryle

- Austin

- Strawson

- The Demise of Ordinary Language Philosophy: Grice

- Contemporary Views

- References and Further Reading

- Analysis and Formal Logic

- Logical Atomism

- Logical Positivism and Ideal Language

- Early Ordinary Language Philosophy

- The Paradigm Case Argument

- Oxford Ordinary Language Philosophy

- Criticism of Ordinary Language Philosophy

- Contemporary views

- Historical and Other Commentaries

1. Introduction

For Ordinary Language philosophy, at issue is the use of the expressions of language, not expressions in and of themselves. So, at issue is not, for example, ordinary versus (say) technical words; nor is it a distinction based on the language used in various areas of discourse, for example academic, technical, scientific, or lay, slang or street discourses – ordinary uses of language occur in all discourses. It is sometimes the case that an expression has distinct uses within distinct discourses, for example, the expression ‘empty space’. This may have both a lay and a scientific use, and both uses may count as ordinary; as long as it is quite clear which discourse is in play, and thus which of the distinct uses of the expression is in play. Though connected, the difference in use of the expression in different discourses signals a difference in the sense with which it is used, on the Ordinary Language view. One use, say the use in physics, in which it refers to a vacuum, is distinct from its lay use, in which it refers rather more flexibly to, say, a room with no objects in it, or an expanse of land with no buildings or trees. However, on this view, one sense of the expression, though more precise than the other, would not do as a replacement of the other term; for the lay use of the term is perfectly adequate for the uses it is put to, and the meaning of the term in physics would not allow speakers to express what they mean in these other contexts.

Thus, the way to understand what is meant by the ‘ordinary use of language’ is to hold it in contrast, not with ‘technical’ use, but with ‘non-standard’ or ‘non-ordinary’ use, or uses that are not in accord with an expression’s ‘ordinary meaning’. Non-ordinary uses of language are thought to be behind much philosophical theorizing, according to Ordinary Language philosophy: particularly where a theory results in a view that conflicts with what might be ordinarily said of some situation. Such ‘philosophical’ uses of language, on this view, create the very philosophical problems they are employed to solve. This is often because, on the Ordinary Language view, they are not acknowledged as non-ordinary uses, and attempt to be passed-off as simply more precise (or ‘truer’) versions of the ordinary use of some expression – thus suggesting that the ordinary use of some expression is deficient in some way. But according to the Ordinary Language position, non-ordinary uses of expressions simply introduce new uses of expressions. Should criteria for their use be provided, according to the Ordinary Language philosopher, there is no reason to rule them out.

Methodologically, ‘attending to the ordinary uses of language’ is held in general to be in opposition to the philosophical project, begun by the Logical Atomists (for more detail on this movement, see below, and the article on Analytic Philosophy, section 2d.) and taken up and developed most enthusiastically by the Logical Positivists, of constructing an ‘ideal’ language. An ideal language is supposed to represent reality more precisely and perspicuously than ordinary language. Ordinary Language philosophy emerged in reaction against certain views surrounding this notion of an ideal language. The ‘Ideal Language’ doctrine (which reached maturity in Logical Positivism) sees ‘ordinary’ language as obstructing a clear view on reality – it is thought to be opaque, vague and misleading, and thus stands in need of reform (at least insofar as it is to deliver philosophical truth).

Contrary to this view, according to Ordinary Language philosophy, it is the attempt to construct an ideal language that leads to philosophical problems, since it involves the non-ordinary uses of language. The key view to be found in the metaphilosophy of the Ordinary Language philosophers is that ordinary language is perfectly well suited to its purposes, and stands in no need of reform – though it can always be supplemented, and is also in a constant state of evolution. On this line of thought, the observation of and attention to the ordinary uses of language will ‘dissolve’ (rather than ‘solve’) philosophical problems – that is, will show them to have not been genuine problems in the first place, but ‘misuses’ of language.

On the positive side, the analysis of the ordinary uses of language may actually lead to philosophical knowledge, according to at least some versions of the view. But, the caveat is, the knowledge proper to philosophy is knowledge (or, rather, improved understanding) of the meanings of the expressions we use (and thus, what we are prepared to count as being described by them), or knowledge of the ‘conceptual’ structures our use of language reflects (our ‘ways of thinking about and experiencing things’). Wittgenstein himself would have argued that this ‘knowledge’ is nothing new, that it was available to all of us all along – all we had to do was notice it through paying proper attention to language use. Later Ordinary Language philosophers such as Strawson, however, argued that this did count as new knowledge – for it made possible new understanding of our experience of reality. Hence, on this take, philosophy does not merely have a negative outcome (the ‘dissolution’ of philosophical problems), and Ordinary Language philosophy need not be understood as quietist or even nihilist as has been sometimes charged. It does, however, turn out to be a somewhat different project to that which it is traditionally conceived to be.

2. Cambridge

The genesis of Ordinary Language philosophy occurred in the work of Wittgenstein after his 1929 return to Cambridge. This period, roughly up to around 1945, represents the early period of Ordinary Language philosophy that we may characterize as ‘Wittgensteinian’. We shall examine these roots first, before turning to its later development at Oxford (which we will continue to call ‘Oxford’ philosophy for convenience) – development that saw significant evolution and variation in the view.

The Cambridge period may be characterized as ‘Wittgensteinian’ because the Ordinary Language philosophers of the time were close followers of Wittgenstein. Many were his pupils at Cambridge, or associates of those pupils who later traveled to other parts of the world transmitting Wittgenstein’s thought, including Ambrose, Lazerowitz, Malcolm, Gasking, Paul, Von Wright, Black, Findlay, Bouwsma and Anscombe to name a few. (See P. M. S. Hacker (1996) for a more detailed historical account, and biographical details, of the Cambridge and Oxford associates of Wittgenstein.) They cleaved closely to the views they believed they found in Wittgenstein’s work, much of which was distributed about Cambridge, and eventually Oxford, as manuscripts or lecture notes that were not published until some time later (for example The Blue and Brown Books (1958) and the seminal Philosophical Investigations (1953)).

These ‘Wittgensteinians’ developed and propounded certain ideas and views, and indeed arguments and theories that Wittgenstein himself may not have approved (nor did Wittgenstein himself ever accept any label for his work, let alone ‘Ordinary Language’ philosophy). The Wittgensteinians saw themselves as developing and extending Wittgenstein’s views, despite the fact that the key principle in Wittgenstein’s work (both earlier and later) was that philosophical ‘theses’, as such, cannot be stated. Wittgenstein steadfastly denied that his work amounted to a philosophical theory because, according to him, philosophy cannot ‘explain’ anything; it may only ‘describe’ what is anyway the case (Philosophical Investigations, section 126-128). The Wittgensteinians developed more explicit arguments that tried to explain and justify the method of appeal to ordinary language than did Wittgenstein. Nevertheless, it is possible to understand what they were doing as remaining faithful to the Wittgensteinian tenet that one cannot propound philosophical theses insofar as claims about meaning are not in themselves theses about meaning. Indeed, the view was that the appeal to the ordinary uses of language is an act of reminding us of how some term or expression is used anyway – to show its meaning rather than explain it.

The first stirrings of the Ordinary Language views emerged as a reaction against the prevailing Logical Atomist, and later, Logical Positivist views that had been initially (ironically) developed by Wittgenstein himself, and published in his Tractatus Logico-Philosophicus in 1921. In order to understand this reaction, we must take a brief look at the development of Ideal Language philosophy, which formed the background against which Ordinary Language philosophy arose.

a. Analysis and Formal Logic

Around the turn of the 20th century, in the earliest days of the development of Analytic philosophy, Russell and Moore (in particular) developed the methods of ‘analysis’ of problematic notions. These methods involved, roughly, ‘re-writing’ a philosophically problematic term or expression so as to render it ‘clearer’, or less problematic, in some sense. This itself involved a focus on language – or on the ‘proposition’ – as part of the methodology of philosophy, which was quite new at the time.

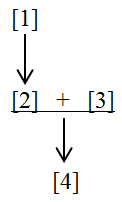

This new spirit of precision and rigor paid particular attention to Gottlob Frege’s groundbreaking work in formal logic (1879), which initiated the development of the truth-functional view of language. A logical system is truth-functional if all its sentential operators (words such as ‘and’, ‘or’, ‘not’ and ‘if…then’) are functions that take truth-values as arguments and yield truth-values as their output. The conception of a truth-functional language is deeply connected with that of the truth-conditional conception of meaning for natural language. On this view, the truth-condition of a sentence is its meaning – it is that in virtue of which an expression has a meaning – and the meaning of a compound sentence is determined by the meanings of its constituent parts, that is the words that compose it. This is known as the principle of compositionality (see Davidson’s Philosophy of Language, section 1a, i).

Although formal symbolic logic was developed initially in order to analyze and explore the structure of arguments, in particular the structure of ‘validity’, its success soon led many to see applications for it within the realm of natural language. Specifically, the thought began to emerge that the logic that was being captured in ever more sophisticated systems of symbolic logic was the structure that is either actually hidden beneath natural, ordinary language, or it is the structure which, if not present in ordinary language, ought to be. What emerges in connection with the development of the truth-functional and truth-conditional view of language is the idea that the surface form of propositions may not represent their ‘true’ (or truth-functional) logical form. Indeed, Russell’s ‘On Denoting’ in 1905, which proposed a thesis called the Theory of Descriptions, argues just that: that underlying the surface grammar of ordinary expressions was a distinct logical form. His method of the ‘logical analysis of language’, based on the attempt to ‘analyze’ (or ‘re-write’) the propositions of ordinary language into the propositions of an ideal language, became known as the ‘paradigm of philosophy’ (as described by Ramsey in his 1931, pp. 321).

Both Russell and Frege recognized that natural language did not always, on the surface at any rate, behave like symbolic logic. Its elementary propositions, for example, were not always determinately true or false; some were not truth-functional, or compositional, at all (such as those in ‘opaque contexts’ like “Mary believes she has a little lamb”) and so on. In ‘On Denoting’ Russell proposed that despite misleading surface appearances, many (ideally, all) of the propositions of ordinary language could nevertheless be rewritten as transparently truth-functional propositions (that is, those that can be the arguments of truth-functions, and whose values are determinately either true or false, as given by their truth-conditions). That is, he argued, they could be ‘analyzed’ in such a way as to reveal the true logical form of the proposition, which may be obscured beneath the surface grammatical form of the proposition.

The proposition famously treated to Russell’s logical analysis in ‘On Denoting’ is the following: “The present King of France is bald.” We do not know how to treat this proposition truth-functionally if there is no present King of France – it does not have a clear truth-value in this case. But all propositions that can be the arguments of truth-functions must be determinately either true or false. The surface grammar of the proposition appears to claim of some object X, that it is bald. Therefore, it would appear that the proposition is true or false depending on whether X is bald or not bald. But there is no X. Russell’s achievement lies in his analysis of this proposition in (roughly and non-symbolically) the following way, which rendered it truth-functional (such that the truth-value of the whole is a function of the truth-values of the parts) after all: instead of “The present King of France is bald,” we read (or analyze the proposition as) “There is one, and only one, object X such that X is the present King of France (and) X is bald.” Now the proposition is comprised of elementary propositions governed by an existential quantifier (plus a ‘connective’– the word ‘and’), which can now be treated perfectly truth-functionally, and can return a determinately true or false value. Since the elementary proposition that claims that there is such an X is straightforwardly false, then by the rules of the propositional calculus this renders the entire complex proposition straightforwardly false. This had the result that it was no longer necessary to agonize over whether something that does not exist can be bald or not!

b. Logical Atomism

To the view that logical analysis would reveal a ‘logically perfect’ truth-functional language that lurked beneath ordinary language (but was obscured by it), Russell added that the most elementary constituents of the true logical form of a proposition (‘logical atoms’) correspond with the most elementary constituents of reality. This combination of views constituted his Logical Atomism (for more detail see Analytic Philosophy, section 2d). According to Russell, the simple parts of propositions represent the simple parts of the world. And just as more complex propositions are built up out of simpler ones, so the complex facts and objects in reality are built up out of simpler ones (Russell 1918).

Thus, the notion of the ‘true logical form’ of propositions was not only thought to be useful for working out how arguments functioned, and whether they were valid, but for a wider metaphysical project of representing how the world really is. The essence of Russellian Logical Atomism is that once we analyze language into its true logical form, we can simply read off from it the ultimate ontological structure of reality. The basic assumption at work here, which formed the foundation for the Ideal Language view, is that the essential and fundamental purpose of language is to represent the world. Therefore, the more ‘perfect’, that is ‘ideal’, the language, the more accurately it represents the world. A logically perfect language is, on this line of thought, a literal mirror of metaphysical reality. Russell’s work encouraged the view that language is meaningful in virtue of this underlying representational and truth-functional nature.

The Ideal Language view gave weight to the growing suspicion that ordinary language actually obscured our access to reality, because it obscured true logical form. Perhaps, the thought went, ordinary language does not represent the world as it really is. For example, the notion of a non-existent entity, suggested in the proposition about ‘the present King of France’ was simply one which arose because of the misleading surface structure of ordinary language, which, when properly ‘analyzed’, revealed there was no true ontological commitment to such a paradoxical entity at all.

Wittgenstein, in his Tractatus, took these basic ideas of Logical Atomism to a more sophisticated level, but also provided the materials for the development of the views by the Logical Positivists. An ideal language, according to Wittgenstein, was understood to actually share a structure with metaphysical reality. On this view, between language and reality there was not mere correspondence of elements, but isomorphism of form – reality shares the ‘logical form’ of language, and is pictured in it. Wittgenstein’s version of Atomism became known as the ‘picture theory’ of language, and ultimately became the focus of the view he later rejected. A rather confounding part of Wittgenstein’s argument in the Tractatus is that although this picturing relation between reality and language exists, it cannot itself be represented, and nor therefore spoken of in language. Thus, according to Wittgenstein, the entire Tractatus attempts to say what cannot be said, and is therefore a form of ‘nonsense’ – once its lessons are absorbed, he advised, it must then be rejected, like a ladder to be kicked away once one has stepped up it to one’s destination (1921, section 6.54).

c. Logical Positivism and Ideal Language

The Vienna Circle, where the doctrine of Logical Positivism (or ‘Logical Empiricism’ as it was sometimes called) was developed, included philosophers such as Schlick, Waismann, Neurath, and Carnap, among others. (See Coffa 1991, chapter 13 for an authoritative history of this period in Vienna.) This group was primarily interested in the philosophy of science and epistemology. Unlike the Cambridge analysts, however, who merely thought metaphysics had to be done differently, that is more rigorously, the Logical Positivists thought it should not be done at all. They acquired this rejection of metaphysics from Wittgenstein’s Tractatus.

According to the Tractatus, properly meaningful propositions divided into two kinds only: ‘factual’ propositions which represented, or ‘pictured’, reality and the propositions of logic. The latter, although not meaningless, were nevertheless all tautologous – empty of empirical, factual content. The propositions that fell into neither of these classes – according to Wittgenstein, the propositions of his own Tractatus – were ‘meaningless’ nonsense because, he claimed, they tried to say what could not be said. The Positivists did not accept this part of Wittgenstein’s view however, that is that what defined ‘nonsense’ was trying to say what could not be said. What they did believe was that metaphysical (and other) propositions are nonsense because they cannot be confirmed – there exists no procedure by which they may be verified. For the Positivists, ‘pseudo-propositions’ are those which present themselves as if they were factual propositions, but which are, in fact, not. The proposition, for example, “All atomic propositions correspond to atomic facts” looks like a scientific, factual claim such as “All physical matter is composed of atoms.” But the propositions are not of the same order, according to the Positivists – the former is masquerading as a scientific proposition, but in fact, it is not the sort of proposition that we know how to confirm, or even test. The former has, according to the view, no ‘method of verification’.

Properly ‘empirical’ propositions are those, according to the Positivists, that are ‘about’ the world, they are ‘factual’, have ‘content’ and their truth-values are determined strictly by the way the world is; but most crucially, they can be confirmed or disconfirmed by empirical observation of the world (testing, experimenting, observing via instruments, and so forth). On the other hand, the ‘logical’ propositions, and any that could be reduced to logical propositions for example analytic propositions, were not ‘about’ anything: they determined the form of propositions and structured the body of the properly empirical propositions of science. The so-called ‘Empiricist Theory of Meaning’ is, thus, the view that ‘the meaning of a proposition is its method of verification’, and the so-called ‘Verification Principle’ is the doctrine that if a proposition cannot be empirically verified, and it is not, or is not reducible to, a logical proposition, it is therefore meaningless (see Carnap 1949, pp. 119).

Logical Positivism cemented the Ideal Language view insofar as it accepted all of the elements we have identified; the view that ordinary language is misleading, and that underlying the vagueness and opacity of ordinary language is a precise and perspicuous language that is truth-functional and truth-conditional. From these basic ideas emerged the notion that a meaningful language is meaningful in virtue of having a systematic, and thus formalizable syntactic and semantic structure, which, although it is often obscured in ordinary language, could be revealed with proper philosophical and logical analysis.

It is in opposition to this overall picture that Ordinary Language philosophy arose. Ideal language came to be seen as thoroughly misleading as to the true structure of reality. As Alice Ambrose (1950) noted, ideal language was by this time “…[condemned] as being seriously defective and failing to do what it is intended to do. [This view] gives rise to the idea that [ordinary] language is like a tailored suit that does not fit” (pp. 14-15). Contrary to this notion, according to Ambrose, ordinary language is the very paradigm of meaningfulness. Wittgenstein, for example, said in the Philosophical Investigations that,

On the one hand it is clear that every sentence in our language ‘is in order as it is’. That is to say, we are not striving after an ideal, as if our ordinary vague sentences had not yet got a quite unexceptionable sense, and a perfect language awaited construction by us. – On the other hand it seems clear that where there is sense there must be perfect order. – So there must be perfect order even in the vaguest sentence. (Section 98)

d. Ordinary Language versus Ideal Language

It is notable that, methodologically, Ideal and Ordinary Language philosophy both placed language at the center of philosophy, thus taking the so-called ‘linguistic turn’ (a term coined by Bergmann 1953, pp. 65) – which is to hold the rather more radical position that philosophical problems are (really) linguistic problems.

Certainly both the Ideal and Ordinary Language philosophers argued that philosophical problems arise because of the ‘misuses of language’, and in particular they were united against the metaphysical uses of language. Both complained and objected to what they called ‘pseudo-propositions’. Both saw such ‘misuses’ of language as the source of philosophical problems. But although the Positivists ruled out metaphysical (and many other non-empirically verifiable) uses of language as nonsense on the basis of the Verification Principle, the Ordinary Language philosophers objected to them as concealed non-ordinary uses of language – not to be ruled out, as such, so long as criteria for their use were provided.

The Positivists understood linguistic analysis as the weeding out of nonsense, such that a ‘logic of science’ could emerge (Carnap 1934). A ‘logic of science’ would be based on an ideal language – one which is of a perfectly perspicuous logical form, comprised exhaustively of factual propositions, logical propositions and nothing else. In such a language, philosophical problems would be eliminated because they could not even be formulated. On the other hand, for the Ordinary Language philosophers, the aim was to resolve philosophical confusion, but one could expect to achieve a kind of philosophical enlightenment, or certainly a greater understanding of ourselves and the world, in the process of such resolution: for philosophy is seen as an ongoing practice without an ultimate end-game.

Most strikingly, however, is the difference in the views about linguistic meaning between the Ideal and Ordinary Language philosophers. As we’ve seen, the Ideal language view maintains a truth-functional and representational theory of meaning. The Ordinary Language philosophers held, following Wittgenstein, a use-based theory (or just a ‘use-theory’) of meaning. The exact workings of such a theory have never been fully detailed, but we turn to examine what we can of it below (section 3a). Suffice it to say here that, for the Ordinary Language philosopher, no proposition falls into a class – say ‘empirical’, ‘logical’, ‘necessary’, ‘contingent’ or ‘analytic’ or ‘synthetic’ and so forth in and of itself. That is, it is not, on a use-theory of meaning, the content of a proposition that marks it as belonging to such categories, but the way it is used in the language. (For more on this aspect of a use-theory, see for example Malcolm 1940; 1951.)

3. Ordinary Language Philosophy: Nothing is Hidden

The Ordinary Language philosophers, did not, strictly speaking, ‘reject’ metaphysics (to deny the existence of a metaphysical realm is itself, notice, a metaphysical contention). Rather, they ‘objected’ to metaphysical theorizing for two reasons. Firstly, because they believed it distorted the ordinary use of language, and this distortion was itself a source of philosophical problems. Secondly, they argued that metaphysical theorizing was superfluous to our philosophical needs – metaphysics was, basically, thought to be beside the point. Both objections rested on the view that our ordinary perceptions of the world, and our ordinary use of language to talk about them, are all we need to observe in order to dissipate philosophical perplexity. On this view, metaphysics adds nothing, but poses the danger of distorting what the issues really are. This position rests on Wittgenstein’s insistence that ‘nothing is hidden’.

Wittgenstein’s view was that whatever philosophy does, it simply describes what is open to view to anyone. Philosophy is not, on this approach, a matter of theorizing about ‘how reality really is’ and then confirming such philosophical ‘facts’ – generally, not obvious to everyday experience – through special philosophical techniques, such as analysis. Philosophy is, thus, quite distinct from the empirical sciences – as the Positivists agreed. For Ordinary Language philosophy, however, the distinction did not rest on issues of verification, but on the view that philosophy is a practice rather than an accumulation of knowledge or the discovery of new, special philosophical facts. This runs contrary to the traditional, ‘metaphysical’ view that ‘reality’ is somehow hidden, or underlies the familiar, the everyday reality we experience and must be ‘revealed’ or ‘discovered’ by some special philosophical kind of study or analysis. On the contrary Wittgenstein claimed:

Philosophy simply puts everything before us, and neither explains nor deduces anything. – Since everything lies open to view there is nothing to explain. (Section 126)

…For nothing is hidden. (Section 435)

Metaphysical theorizing requires that language be used in ways that it is not ordinarily used, according to Wittgenstein, and the task proper to philosophy is to simply remind us what the ordinary uses actually are:

…We must do away with all explanation, and description alone must take its place. And this description gets its light, that is to say its purpose, from the philosophical problems. These are, of course, not empirical problems; they are solved rather by looking into the workings of our language, and that in such a way as to make us recognise these workings; in despite of an urge to misunderstand them. The problems are solved, not by giving new information, but by arranging what we have always known. Philosophy is a battle against the bewitchment of our intelligence by means of language. (Section 109)

What we do is bring words back from their metaphysical to their everyday use. (Section 116)

The idea that philosophical problems could be dissolved by means of the observation of the ordinary uses of language was referred to, mostly derogatively, by its critics as ‘therapeutic positivism’ (see the critical papers by Farrell 1946a and 1946b). It is true that the notion of ‘philosophy as therapy’ is to be found in the texts of Wittgenstein (1953, Section 133) and particularly in Wisdom (1936; 1953). However, the idea of philosophy as therapy was not an idea that was taken too kindly by traditional philosophers. The method was referred to by Russell as “…procurement by theft of what one has failed to gain by honest toil” (quoted in Rorty 1992, pp. 3). This view of philosophy was marked as a kind of ‘quietism’ about the real philosophical problems (a stubborn refusal to address them), and even as a kind of ‘nihilism’ about the prospects of philosophy altogether (once ‘misuses’ of language have been revealed, philosophical problems would disappear). It was only later at Oxford that Ordinary Language philosophy was eventually able to shrug off the association with the view that philosophical perplexity is a ‘disease’ that needed to be ‘cured’.

In the following sections, four important aspects of early Ordinary Language philosophy are examined, along with some of the key objections.

a. The Misuses of Language

In examining the view that metaphysics leads to the distortion of the ordinary uses of language, the question must also be answered as to why this was supposed to be a negative thing – since that is not at all obvious. An account is required of what the Ordinary Language philosophers counted as ‘ordinary’ uses of language, as non-ordinary uses, and why the latter was thought to be the source of philosophical problems, rather than elements of their solution.

The question of what counts as ‘ordinary’ language has been a pivotal point of objection to Ordinary Language philosophy from its earliest days. Some attempts were made by Ryle to get clearer on the matter (see 1953; 1957; 1961). For example, he emphasized, as we noted in the introduction, that it is not words that are of interest, but their uses:

Hume’s question was not about the word ‘cause’; it was about the use of ‘cause’. It was just as much about the use of ‘Ursache’. For the use of ‘cause’ is the same as the use of ‘Ursache’, though ‘cause’ is not the same word as ‘Ursache’. (1953, pp. 28)

He emphasized that the issue was ‘the ordinary use of language’ and not ‘the use of ordinary language’. Malcolm described the notion of the ordinary use of some expression thus:

By an “ordinary expression” I mean an expression which has an ordinary use, i.e. which is ordinarily used to describe a certain sort of situation. By this I do not mean that the expression need be one that is frequently used. It need only be an expression which would be used… To be an ordinary expression it must have a commonly accepted use; it need not be the case that it is ever used. (1942a, pp. 16)

The uses of expressions in question here refer almost entirely to what would ordinarily be said of some situation or state of affairs; what we (language users) would ordinarily call it a case of. So, of interest are the states of affairs that come under philosophical dispute, for example cases which we would ordinarily call cases of, say ‘free-will’, cases of ‘seeing some object’, cases of ‘knowing something for certain’ and so forth. It was Malcolm who, in his 1942a paper, first pointed out that non-ordinary uses of expressions occur in philosophy most particularly when the philosophical thesis propounded ‘goes against ordinary language’ – that is, when what the philosophical thesis proposes to be the case is radically different from what we would ordinarily say about some case (1942a, pp. 8).

To illustrate, take the term ‘knowledge’. According to Malcolm, its use in epistemological skepticism is non-ordinary. If one uttered “I do not know if this is a desk before me,” a hearer may assume that what is meant is that the utterer is unsure about the object before her because, for example the light is bad, or she thinks it might be a dining table instead of a desk or something similar. Something like this would be the, let us say, ordinary use of the term ‘know’. By way of contrast, if the utterer meant, by the expression, ‘I do not know if this is a desk before me, because I do not know the truth of any material-object statement, or I do not know if any independent objects exist outside my mind’ – then, we might say this was a non-ordinary use of the term ‘know’. We have not established that the non-ordinary use is at any disadvantage as yet. However, since if the latter was what one meant when one uttered the original statement, then one would have to explain this use to a hearer (unless the philosophical use was established to be in play at an earlier moment) – that is, one would have to note that “I do not know if this is a desk before me” is being used in a different sense to the other (non-skeptical) one. On this basis, the claim is that the first use is ordinary and the second, non-ordinary. Minimally, the expressions have different uses, and thus different senses, on this argument.

It might be objected that the skeptical use is perfectly ordinary—say, among philosophers at least. However, this does not establish that the skeptical use is the ordinary use, because the skeptical use depends on the prior existence, and general acceptance, of the original use. That is, the skeptical claim about knowledge could not even be formulated if it were not assumed that everyone knew the ordinary meaning of the term ‘know’ – if this were not assumed, there would be no point in denying that we have ‘knowledge’ of material-object propositions. Indeed, Ryle noted his sense of this paradox quite early on:

…if the expressions under consideration [in philosophical arguments] are intelligently used, their employers must always know what they mean and do not need the aid or admonition of philosophers before they can understand what they are saying. And if their hearers understand what they are being told, they too are in no such perplexity that they need to have this meaning philosophically “analysed” or “clarified” for them. And, at least, the philosopher himself must know what the expressions mean, since otherwise, he could not know what it was that he was analysing. (1992, pp. 85)

Often, the ordinary use of some expression must be presupposed in order to formulate the philosophical position in which it is used non-ordinarily.

Nevertheless, challenges to the very idea of ordinary versus non-ordinary uses of language came from other quarters. In particular, a vigorous dispute arose over what the criteria were supposed to be to identify ordinary versus non-ordinary uses of language, and why a philosopher assumes herself to have any authority on this matter. For example, Benson Mates argued, in his ‘On the Verifiability of Statements about Ordinary Language’ (1958, 1964) just what the title suggests: how can any such claims be confirmed? This objection applies more seriously to the later Ordinary Language philosophical work, because that period focused on far more detailed analyses of the uses of expressions, and made rather more sweeping claims about ‘what we say’. Stanley Cavell (1958, 1964) responded to Mates that claims as to the ordinary uses of expressions are not empirically based, but are normative claims (that is, they are not, in general, claims about what people do say, but what they can say, or ought to say, within the bounds of the meaning of the expression in question). Cavell also argued that the philosopher, as a member of a linguistic community, was at least as qualified as any other member of that community to make claims about what is, or can be, ordinarily said and meant; although it is always possible that any member of the linguistic community may be wrong in such a claim. The debate has continued, however, with similar objections (to Mates’) raised by Fodor and Katz (1963, 1971), to which Henson (1965) has responded.

The reason this objection applies less-so to the early Ordinary Language philosophers is that, for the Wittgensteinians, claims as to what is ‘ordinarily said’ applied in much more general ways. It applied, for example, to ‘what would ordinarily be said of, for example, a situation’ – for example, as we noted, cases of what we ordinarily call ‘seeing x’, or ‘doing x of her own free-will’, or ‘knowing “x” for certain’ and so forth (these kinds of cases were later argued to be paradigm cases – see below, section 3d, for a discussion of this important argument within early Ordinary Language philosophy). The Wittgensteinians were originally making their points against the kind of skeptical metaphysical views which had currency in their own time; the kinds of theories which suggested such things as ‘we do not know the truth of any material-thing statements’, ‘we are acquainted, in perception, only with sense-data and not external, independent objects’, ‘no sensory experience can be known for certain’ and so on. In such cases there is no question that the ordinary thing to say is, for example “I am certain this is a desk before me,” and “I see the fire-engine” and “It is true that I know that this is a desk” and so forth. In fact, in such disputes it was generally agreed that there was a certain ordinary way of describing such and such a situation. This would be how a situation is identified, so that the metaphysician or skeptical philosopher could proceed to suggest that this way of describing things is false.

The objection that was directed equally at the Wittgensteinians and the Oxford Ordinary Language philosophers was in regard to what was claimed to count as ‘misuses’ of expressions, particularly philosophical ‘misuses’. In particular, it was objected that presumably such uses must be banned according to Ordinary Language philosophy (for example Rollins 1951). Sometimes, it was argued, the non-ordinary use of some expression is philosophically necessary since sometimes technical or more precise terms are needed.

In fact, it was never argued by the Ordinary Language philosophers that any term or use of an expression should be prohibited. The objection is not born out by the actual texts. It was not argued that non-ordinary uses of language in and of themselves were a cause of philosophical problems; the problem lay in, mostly implicitly, attempting to pass them off as ordinary uses. The non-ordinary use of some term or expression is not, merely, a more ‘technical’ or more ‘precise’ use of the term – it is to introduce, or even assume, a quite different meaning for the term. In this sense, a philosophical theory that uses some term or expression non-ordinarily is talking about something entirely different to whatever the term or expression talks about in its ordinary use. Malcolm, for example, argues that the problem with philosophical uses of language is that they are often introduced into discussion without being duly noted as non-ordinary uses. Take, for example, the metaphysical claim that the content of assertions about experiences of an independent realm of material objects can never be certain. The argument (see Malcolm 1942b) is that this is an implicit suggestion that we stop applying the term ‘certain’ to empirical propositions, and reserve it for the propositions of logic or mathematics (which can be exhaustively proven to be true). It is a covert suggestion about how to reform the use of the term ‘certain’. But the suggested use is a ‘misuse’ of language, on the Ordinary Language view (that is, applying the term ‘certain’ only to mathematical or logical propositions). Moreover, the argument presents itself as an argument about the facts concerning the phenomenon of certainty, thus failing to ‘own up’ to being a suggestion about the use of the term ‘certain’ – leaving the assumption that it uses the term according to its ordinary meaning misleadingly in place. The issue, according to Ordinary Language philosophy, is that the two uses of ‘certain’ are distinct and the philosopher’s sense cannot replace the ordinary sense – though it can be introduced independently and with its own criteria, if that can be provided. Malcolm says, for example:

…if it gives the philosopher pleasure always to substitute the expression “I see some sense-data of my wife” for the expression “I see my wife,” etc.and so forth, then he is at liberty thus to express himself, provided he warns people beforehand, so that they will understand him. (1942a, pp. 15)

In an argument Malcolm elsewhere (1951) deals with, he suggests that it is not a ‘correct’ use of language to say, “I feel hot, but I am not certain of it.” But this is not to suggest that the expression must be banned from philosophical theorizing, nor that it is not possible that it might, at some point, become a perfectly correct use of language. What is crucial is that, for any use newly introduced to a language, how that expression is to be used must be explained, that is, criteria for its use must be provided. He says:

We have never learned a usage for a sentence of the sort “I thought that I felt hot but it turned out that I was mistaken.” In such matters we do not call anything “turning out that I was mistaken.” If someone were to insist that it is quite possible that I were mistaken when I say that I feel hot, then I should say to him: Give me a use for those words! I am perfectly willing to utter them, provided that you tell me under what conditions I should say that I was or was not mistaken. Those words are of no use to me at present. I do not know what to do with them…There is nothing we call “finding out whether I feel hot.” This we could term either a fact of logic or a fact of language. (1951, pp. 332)

Malcolm went so far as to suggest the laws of logic may well, one day, be different to what we accept now (1940, pp. 198), and that we may well reject some necessary statements, should we find a use for their negation, or for treating them as contingent (1940, pp. 201ff). Thus, the objection that, according to Ordinary Language philosophy, non-ordinary uses, or new, revised or technical uses of expressions are to be prohibited from philosophy is generally unfounded – though it is an interpretation of Ordinary Language philosophy that survives into the present day.

b. Philosophical Disputes and Linguistic Disputes

There was no lack of voluble objection to the claim that philosophical disputes are ‘really linguistic’ (or sometimes ‘really verbal’). Therefore, it is essential to understand the Ordinary Language philosophers’ reasons for holding it to be true (although the later Oxford philosophers were generally less committed to it in quite such a rigid form). One of the most explicit formulations of this view is, once again, to be found in Malcolm’s 1942a paper. Its rough outline is this: in certain philosophical disputes the empirical facts of the matter are not at issue – that is to say, the dispute is not based on any lack of access to the empirical facts, the kinds of fact we can, roughly, ‘observe’. If the dispute is not about the empirical facts according to Malcolm, then the only other thing that could be at issue is how some phenomenon is to be described – and that is a ‘linguistic’ issue. This is particularly true of metaphysical disputes, according to Malcolm, who presents as examples the following list of ‘metaphysical’ propositions’ (1942a, pp. 6):

1. There are no material things

2. Time is unreal

3. Space is unreal

4. No-one ever perceives a material thing

5. No material thing exists unperceived

6. All that one ever sees when he looks at a thing is part of his own brain

7. There are no other minds – my sensations are the only sensations that exist

8. We do not know for certain that there are any other minds

9. We do not know for certain that the world was not created five minutes ago

10. We do not know for certain the truth of any statement about material things

11. All empirical statements are hypotheses

12. A priori statements are rules of grammar

According to Malcolm, affirming or denying the truth of any one of these propositions is not affirming or denying a matter of fact, but rather, Malcolm claims, “…it is a dispute over what language shall be used to describe those facts” (1942a, pp. 9). On what basis does he make this claim? The obvious objection on behalf of the metaphysician is that she certainly is talking about ‘the facts’ here, namely the metaphysical facts, and not about language at all.

Malcolm argues thus: he imagines a dispute between Russell and Moore, as illustrated by the propositions noted above. He takes as an example Russell’s assertion that “All that one ever sees when one looks at a thing is part of one’s own brain”. This is the sort of proposition that would follow from the philosophical thesis that all we are acquainted with in perception is sense-data, and that we do not perceive independent, external objects directly (See Russell 1927, pp. 383 – see also Sense-Data). Malcolm casts Moore (unauthorized by Moore it should be noted) as replying to Russell: “This desk which both of us now see is most certainly not part of my brain, and, in fact, I have never seen a part of my own brain” (Malcolm 1942a, pp. 7). We are asked to notice that there is no disagreement, in Russell and Moore’s opposing propositions, about the empirical facts of the matter. The dispute is not that one of either Russell or Moore cannot see the desk properly, or is hallucinating, in disagreeing whether what is before them is, or is not, a desk. That is, Russell and Moore agree that the phenomenon they are talking about is the one that is ordinarily described by saying (for example) “I see before me a desk.”

Malcolm asserts that “Both the philosophical statement, and Moore’s reply to it are disguised linguistic statements” (1942a, pp. 13 – my italics). Malcolm’s claim that this kind of dispute is not ‘empirical’ has less to do with a Positivistically construed notion of ‘verifiability’, and more to do with the contrast such a dispute has with a kind of dispute that really is empirical, or ‘factual’ – in the ordinary sense, where getting a closer look, say, at something would resolve the issue. The argument that the dispute is ‘really linguistic’ rests on Malcolm’s claim that when a philosophical thesis denies the applicability of some ordinary use of language, it is not merely suggesting that, occasionally, when we make certain claims, what we say is false. According to Malcolm, when a philosophical thesis suggests (implicitly), for example, that we should withhold the term ‘certain’ from non-analytic (mathematical or logical) statements, the suggestion is not that it is merely false to say one is certain about a synthetic (material-thing) statement – but that it is logically impossible. Malcolm tries to support his contention by drawing attention to the features apparent in the sort of dispute that is really about ‘the facts’, and one that he regards as, rather, really linguistic:

In ordinary life everyone of us has known of particular cases in which a person has said that he knew for certain that some material-thing statement was true, but that it has turned out that he was mistaken. Someone may have said, for example, that he knew for certain by the smell that it was carrots that were cooking on the stove. But you had just previously lifted the cover and seen that it was turnips, not carrots. You are able to say, on empirical grounds, that in this particular case when the person said that he knew for certain that a material-thing statement was true, he was mistaken…It is an empirical fact that sometimes when people use statements of the form: “I know for certain that p”, where p is a material-thing statement, what they say is false. But when the philosopher asserts that we never know for certain any material-thing statements, he is not asserting this empirical fact…he is asserting that always…when any person says a thing of that sort his statement will be false. (1942a, pp. 11)

So, Malcolm proposes that, since their dispute is not empirical, or contingent, we ought to understand Russell as saying that “…it is really a more correct way of speaking to say that you see part of your brain than to say that you see [for example] a desk” and we have Moore saying that, on the contrary, “It is correct language to say that what we are doing now is seeing [a desk], and it is not correct language to say that what we are doing now is seeing parts of our brains” (1942a, pp. 9).

The obvious objection here is to the claim that the dispute is linguistic rather than about the phenomenon of, for example perception itself. For example, C. A. Campbell (1944) remarks that:

…it seems perfectly clear that what [these] arguments [such as the one’s mentioned by Malcolm] [are] concerned with is the proper understanding of the facts of the situation, and not with any problem of linguistics: and that there is a disagreement about language with the plain man only because there is a disagreement about the correct reading of the facts…The philosopher objects to such [ordinary] statements only in the context of philosophical discourse where it is vital that our words should accurately describe the facts. (pp. 15)

This objection is further echoed in Morris Weitz (1947), responding to Malcolm’s Ordinary Language treatment of the propositions of epistemic skepticism, such as “no-material thing statement is known for certain”:

The first thing that needs to be pointed out is that philosophers who recommend the abolition of the prefix ‘it is certain that’ as applied to empirical statements do not suggest that the language of common sense is mistaken. What they mean to say to common sense is that its language is alright provided that its interpretation of the facts is all right. But the interpretation is not all right; therefore, the articulation of the interpretation is mistaken, and needs revision. [Thus]…will we not, and more correctly say, common sense and its language are here in error and….incline a little with Broad to the view that common sense, at least on this issue, ought to go out and hang itself. (pp. 544-545)

A more contemporary version of this objection, which applies to the idea that philosophical disputes are about concepts and thoughts (rather than the ordinary uses of language) may be found in Williamson (2007). He argues that not all philosophy is (the equivalent of) ‘linguistic’, because philosophers may well study objects that have never (as yet) been thought or spoken about at all (‘elusive objects’). He says:

Suppose, just for the sake of argument, that there are no [such] elusive objects. That by itself would still not vindicate a restriction of philosophy to the conceptual, the realm of sense or thought. The practitioners of any discipline have thoughts and communicate them, but they are rarely studying those very thoughts: rather, they are studying what their thoughts are about. Most thoughts are not about thoughts. To make philosophy the study of thought is to insist that philosophers’ thoughts should be about thoughts. It is not obvious why philosophers should accept that restriction. (pp. 17-18)

The general gist of the objection was that philosophy is just as perfectly the study of facts as any other science, except what is under investigation are metaphysical rather than physical facts. There is no reason, on this objection, to understand metaphysical claims as claims about language. The early Ordinary Language philosophers did not formulate a complete response to this charge. Indeed, that the charge is still being raised demonstrates that it still has not been answered to the satisfaction of its critics. Some attempts were made to emphasize, for example, that an inquiry about how some X is to be described ought not be distinguished from an inquiry into the nature of X (for example Austin 1964; Cavell 1958, pp. 91; McDowell 1994, pp. 27) – given that we have no obviously independent way to study such ‘natures’. This appears not to have convinced those who disagree, however.

It was also part of a defence to this objection to appeal to the so-called ‘linguistic doctrine of necessity’. The terminology popular in the day is partly to blame for the general disdain of this view (which was only a ‘doctrine’ as such in the hands of the Positivists), as it sounds as though the claim is that necessary propositions, because they are ‘linguistic’, are not to be understood as being about the world and the way things are in it, but about words, or even about the ways words are used. This of course would have the result that necessary propositions turn out to be contingent propositions about language use, which was correctly recognised to be absurd (as noted in Malcolm 1940, pp. 190-191). This was rather more how the Positivists described the doctrine. For them, the thought in distinguishing ‘linguistic’ from ‘factual’ propositions was that the former are ‘rules of language’, and therefore truth-valueless, or ‘non-cognitive’. But on the Ordinary Language philosophers’ view, necessary propositions are not (disguised) assertions about the uses of words, they are ‘about’ the world just as all propositions are (and so are perfectly ‘cognitive’ bearers of truth-values). On the other hand, what makes them count as necessary, what justifies us in holding them to be so, is not any special metaphysical fact; only the ordinary empirical fact that this is how some of the propositions of language are used. (op.cit. 1940, pp. 192; 1942b) On this view, it is through linguistic practice that we establish the distinction between necessary and contingent propositions. We have met this idea already in some preliminary remarks about a use-theory of meaning (in section 2d above).

However, even on the more amenable use-based interpretation of the linguistic doctrine of necessity, metaphysicians still wish to insist that some necessities are, indeed, metaphysical, and not connected with the uses of propositions at all – for example, that nothing is both red and green all over, that a circle cannot be squared, and so forth. Thus, the objection persists that in philosophy what one is doing is inquiring into facts, that is, the nature of phenomena, the general structure and fundamental ontology of reality, and not at all the meanings of the expressions we use to describe them. Indeed, it seems to be the most prevalent and recurrent complaint against ‘linguistic’ philosophy, and it seems to be an argument in which neither side will be convinced by the other, and thus one that will probably go on indefinitely. At least one question that has not fully entered the debate is why a ‘linguistic’ problem is understood to be so philosophically inferior to a metaphysical one.

c. Ordinary Language is Correct Language

The contention ‘ordinary language is correct language’ forms the rationale, or justification, for the method of the appeal to ordinary language. This is a basic and fundamental tenet on which it is safe to say all Ordinary Language philosophers concur, more or less strongly. However, it has been often misunderstood, and the misunderstanding has unfortunately in part been attributable to the early Ordinary Language philosophers. The misunderstanding lies in conflating the notion of ‘correctness’ with the notion of ‘truth’. It appears that the Ordinary Language philosophers themselves did not always make this distinction clearly enough, nor did they always adhere to it, as we shall see below. We shall examine one formulation of the argument to the conclusion that ‘ordinary language is correct language’, and see that it need not be (as it very often has been) understood as a claim that what is said in the ordinary use of language must thereby be true (or its converse: that whatever is said in using language non-ordinarily must thereby be false).

The latter interpretation of Ordinary Language philosophy was, and is, widespread. For example, Chisholm, in 1951, devoted a paper to rejecting the claim that “Any philosophical statement which violates ordinary language is false” (pp. 175). The converse, that any statement which is in accord with ordinary language is true is a version of what came to be known as the ‘paradigm case argument’, which we shall examine in the following section.

Once again, the classic formulation of the argument to the conclusion that ‘ordinary language is correct’ is to be found in Malcolm’s 1942a paper. His argument is roughly this: not only are metaphysical philosophical disputes not based on any facts, but metaphysical claims are, generally, claims to necessary rather than ordinary, contingent truth (that is, a philosophical thesis does not claim that sometimes we cannot be certain of a material-thing statement, for that is perfectly true and we all know this; rather the philosophical thesis says that it is never the case that such statements are certain). We should note that it is at least debatable whether a metaphysical thesis might be presented as contingent (See article on Modal Illusions). Certainly for the most part, metaphysical theses are presented as necessary truths, as there are separate difficulties in doing otherwise. Malcolm’s argument is that these metaphysical theses, which contradict the ordinary uses of language, have the semantic consequence that the ordinary uses of expressions fail to express anything at all.

No metaphysician would argue for this explicitly. Indeed, most suggest that the ordinary expression they contest, or that is ultimately contested by their thesis, is ‘just wrong’, that is, merely false. The thought is that the folk get certain things wrong all the time and need to be corrected: the empirical sciences are the model here. But the analogy with science is misleading, since science only shows us that certain ways we describe things may turn out to be contingently false. But, if the metaphysically necessary propositions in question turn out to be true, that is, the ones that are inconsistent with ordinary language, the result is not that our ordinary ways of describing certain phenomena or situations turn out to be merely false. Rather, our ordinary uses of language would turn out to express that which is necessarily false – they would express, or try to express, that which is metaphysically impossible.

According to Malcolm, the implication that what is expressed in certain ordinary uses of language is necessarily false, or metaphysically impossible, renders those uses ‘self-contradictory’ (1942a, pp. 11). What he means is this: if it turns out that, say, the proposition “One never perceives a material object” is true, then because it is necessarily true, it is therefore impossible (for us) to perceive a material object. Therefore, material objects are (for us) imperceptible. So, to assert “I perceive a material object” is not merely to state a falsehood (like saying “The earth is flat”), but to state something like “I perceive something that is imperceptible.” If a metaphysical thesis is necessarily true, and it contradicts what would be said ordinarily, then the latter is necessarily false, and to assert a necessarily false proposition is to fail to assert anything at all. Failing to assert anything, in the utterance of an assertion, is to fail to use the language correctly – and this is the implicit upshot of some metaphysical theses.

On the contrary, Malcolm claims, such a misuse of language is impossible, because “The proposition that no ordinary expression is self-contradictory is a tautology” (pp. 16 – my italics). This is not to say that whatever is said using language ordinarily is thereby actually true. Malcolm insists that there are two ways one can ‘go wrong’ in saying something; one way is to be wrong about the facts, the second way is to use language incorrectly. And so he notes that it is perfectly possible to be using language correctly, and yet state something that is plainly false. But, on this view, one cannot be uttering self-contradictions and at the same time be saying something true or false for that matter. The assertion of contradictions, according to this view, has no use for us in our language (so far at least), and therefore they have no meaning (clearly, this is an aspect of the use-theory of meaning at work). On the other hand, according to Malcolm, to have a use just is to have a meaning. Thus, any expression that does have a use cannot also be ‘meaningless’ – or self-contradictory. ‘Correct’ language, therefore, is language that is or would be used, and is therefore meaningful, on this argument. Given the nature of our language, we do not, as a matter of fact, use self-contradictory expressions to describe situations (literally, sincerely and non-metaphorically that is) – although we do say such things as “It is and it is not,” but we do not say of such uses that one is contradicting oneself (see Malcolm 1942a, pp. 16 for more on uttering contradictions). He says:

The reason that no ordinary expression is self-contradictory is that a self-contradictory expression is an expression which would never be used to describe any sort of situation. It does not have a descriptive usage. An ordinary expression is an expression which would be used to describe a certain sort of situation; and since it would be used to describe a certain sort of situation, it does describe that sort of situation. A self-contradictory expression, on the contrary, describes nothing. (1942a, pp. 16)

It is on the basis of this argument that Malcolm claims that Moore, in the imagined dispute with Russell, actually refutes the philosophical propositions in question – merely by pointing out that they do ‘go against ordinary language’ (1942a, pp. 5). It is here that we get some insight into why it was assumed that Ordinary Language philosophy argued that anything said in the non-ordinary use of language must be false (and anything said in ordinary language must be true): Malcolm after all does say that what Moore says refutes the skeptical claims and shows the falsity of the proposition in question.

However, it must be kept in mind that what Malcolm is claiming to be true and false here are the linguistic versions of the dispute: he claims that Moore’s assertion that “It is a correct use of language to say that ‘I am certain this is a desk before me’” is true – he does not argue that Moore has proven there is a desk before him. Moreover, the philosophical proposition Moore has proven to be false is not “No material-thing statement is known for certain,” but the claim that “It is not a correct use of language to say ‘I am certain this is a desk before me’.” What Malcolm has argued, even if he himself did not make this entirely clear (and perhaps even deliberately equivocated on), is that certain claims about what is a correct (ordinary) use of language are true or false. His is not an argument with metaphysical results, and he has not shown, through Moore, that any version of skepticism is false. Indeed, the metaphysical thesis itself is beside the point. If the skeptic insists that, although it may be an incorrect use of language to say “I am not certain that this is a desk before me,” it may nevertheless be true, then the onus is on the skeptic to explain why it is that our ordinary claims to ‘know’ such and such are, therefore, not merely contingently false, but necessarily false. By Malcolm’s lights, this would amount to the skeptic claiming that this particular part of our use of language, that is, that involving some ordinary claims to ‘know’ such and such, is self-contradictory; yet, for Malcolm, as we have seen, this is not possible, because ordinary language is correct language. At any rate, in assessing the Ordinary Language argument, it is clear that the claim that philosophical propositions are incorrect uses of language and the claim that what they express is false ought not be conflated.

Although Malcolm has not refuted the skeptic, he nevertheless has demonstrated that there are some semantic difficulties in formulating the skeptical thesis in the first place – since it requires the non-ordinary use of language. This places additional, hitherto unacknowledged constraints on certain skeptical and metaphysical theses. Either the skeptic/metaphysician must acknowledge the non-ordinary use of the expression in question, or she must argue that we must reform our ordinary use. In the first case, she must then acknowledge that her thesis concerns something other than what we are ordinarily talking about when we use the term in question (for example ‘know’, ‘perceive’, ‘certain’, and so forth). In the second case, she must convince us that our ordinary use of the expression has, hitherto unbeknownst to us, been a misuse of language: we have, up till now, been asserting something that is necessarily false. Someone, in the imagined philosophical dispute, is failing to use language correctly, or failing to express anything in their description of some phenomenon. The dilemma the skeptic faces is that neither of theses two possibilities is a comfortable one for her to explain, although maintaining the truth of her thesis. This dilemma creates a situation for the skeptic that, although not refuting her directly, poses a possibly insurmountable challenge to meaningfully formulating her thesis.

d. The Paradigm Case Argument

The so-called ‘paradigm-case argument’ generated a good deal of debate (for example Watkins 1957; Richman 1961; Flew 1966; Hanfling 1990). It plays a significant role in Ordinary Language philosophy, because it tends to be interpreted as the mistaken view that Ordinary Language philosophy contends that what is said in ordinary language must be true. This is a prime example, as will be shown, of conflating the claim that ordinary language is correct with the claim that what is expressed in the ordinary use of some expression is true.

It was understood that the paradigm-case argument was an argument that shows that there must be (at least some) true instances, in the actual and not merely possible world, of anything that is said (referred to) in ordinary language. For example, Roderick Chisholm (1951) says “There are words in ordinary language, Malcolm believes, whose use implies that they have a denotation. That is to say, from the fact that they are used in ordinary language, we may infer that there is something to which they truly apply” (pp. 180). On this interpretation, certain metaphysical truths, indeed empirical truths, could be proven simply by the fact that we use a certain expression ordinarily. This seems clearly an absurd position to take, and does not, once again, appear to be supported by the texts.

Malcolm invokes what is now known as the paradigm-case argument by reference to one of the outcomes on the assumption that the philosophical claims he is examining are true. If we consider, say, the thesis that “No-one ever knows for certain the truth of any material-thing statement” to be true, then on that theory it turns out that an ordinary expression such as “I am certain there is a chair in this room” is never true, no matter how good our evidence for the claim is – indeed, regardless of the evidence. Malcolm casts the ‘Moorean’ reply to such a view, that “[On the contrary] both of us know for certain there are several chairs in this room, and how absurd it would be to suggest that we do not know it, but only believe it, or that it is highly probable but not really certain!” (1942a, pp. 12) Malcolm says,

What [Moore’s] reply does is give us a paradigm of absolute certainty, just as in the case previously discussed his reply gave us a paradigm of seeing something not a part of one’s brain. What his reply does is to appeal to our language-sense; to make us feel how queer and wrong it would be to say, when we sat in a room seeing and touching chairs, that we believed there were chairs but did not know it for certain, or that it was only highly probable that there were chairs… Moore’s refutation consists simply in pointing out that [the expression “know for certain”] has an application to empirical statements. (1942a, pp. 13)

Here, he says nothing to the effect that Moore has proven that it is true that there are in fact chairs and tables before us, and so forth. All Malcolm has claimed is that Moore has denied, indeed disproven, the suggestion that the term ‘certainty’ has no application to empirical statements. He has disproven that by invoking examples where it is manifestly the case that the term ‘certainty’ has been, and can be, ‘applied’. Nothing, notice, has been said as to whether there really are tables, chairs and cheese before us – unless, that is, we confuse ‘having an application’ with ‘having a reference’ or ‘having a true application’. But it is not necessary to interpret the claims this way. ‘Having an application’ means, as Malcolm argues, having a use in a given situation. Malcolm’s example in the quote above is a ‘paradigm of absolute certainty’, but not, notice, a proof of what it is that one is certain of. It is a ‘paradigm of absolute certainty’ because it is a prime example of the sort of situation, or context in which the term ‘certain’ applies – it is a paradigm of the term’s use: namely, in situations where we have very good (though not infallible) evidence, and no reason to think that our evidence is not of the highest quality. Indeed, on Malcolm’s view, a ‘paradigm case’ is an example of the ordinary use and hence the ordinary meaning of an expression.

The point of appealing to paradigm cases, then, is not to guarantee the truth of ordinary expressions, but to demonstrate that they have a use in the language. Thus, the paradigm-case argument is an effective move against any view that implies that some ordinary expression does not have a use, or application – for example that what we call ‘certain’ (well-evidenced non-mathematical statements) are not ‘really’ certain. That the ordinary use of expressions should be incorrect is, on Malcolm’s argument, as impossible as it would be for the rules of chess to be incorrect (and therefore that what we play is not ‘really’ chess).

e. A Use-Theory of Linguistic Meaning

The ‘use-theory’ of meaning, whose source is Wittgenstein and which was adopted by the Ordinary Language philosophers, objects to the idea that language could be treated like a calculus, or an ‘ideal language’. If language is like a calculus, then its ‘meanings’ could be specified, so that determinate truth-conditions could be paired with every expression of a language in advance of, and independently of, any particular use of a term or expression in a speech-act. Therefore, the observation of our actual uses of expressions in the huge variety of contexts and speech-acts we do and can use them in would be irrelevant to determining the meaning of expressions. On the contrary, for the Ordinary Language Philosopher, linguistic meaning may only be determined by the observation of the various uses of expressions in their actual ordinary uses, and it is not independent of these. Thus, Wittgenstein claimed that:

For a large class of cases – though not for all – in which we employ the word “meaning” it can be defined thus: the meaning of a word is its use in the language. (Section 43)

Does this mean that only sometimes ‘meaning is use’? The remark has been interpreted this way (for example Lycan 1999, pp. 99, fn 2). But it need not be. We need to notice that in the remark, Wittgenstein refers to ‘cases where we employ the word “meaning,”’ and not ‘cases of meaning’. The difference, it might be said, is that sometimes when we use the term ‘meaning’ we are not talking about linguistic meaning. That is, we may talk about for example “The meaning of her phone call,” in which case we are not going to be assisted by looking to the use of the word ‘phone call’ in the language. On the other hand, when we use the word ‘meaning’ as in “The meaning of ‘broiling’ is (such and such),” then looking to the use of the word ‘broiling’ in the language will help. Thus, an interpretation is possible in which the remark does not mean that only sometimes ‘meaning is use’. Rather, we can interpret it as claiming that linguistic meaning is to be found in language use.

At the most fundamental base of a use-theory, language is not representational – although it is sometimes (perhaps even almost always) used to represent. On the view, the ‘meaning’ of a term (or expression) is exhausted by its use: there is nothing further, nothing ‘over and above’, the use of an expression for its meaning to be.

A good many objections have been raised to this theory, which we cannot here examine in full. However, most appear to object to it because it apparently rules out the possibility of a systematic theory of meaning. If meaning-is-use, then the ideal language approach is out of the question, and determining linguistic meaning becomes an ad-hoc process. This thought has displeased many, as they have understood it to entail something of an end to the possibility of a philosophy of language per se. Dummett (1973), for example, has complained that:

…[although] the ‘philosophy of ordinary language’ was indeed a species of linguistic philosophy, [it was] one which was contrary to the spirit of Frege in two fundamental ways, namely in its dogmatic denial of the possibility of system, and in its treatment of natural language as immune from criticism. (pp. 683)

Soames (2003) goes on to echo the same complaint:

Rather than constructing general theories of meaning, philosophers were supposed to attend to subtle aspects of language use, and to show how misuse of certain words leads to philosophical perplexity and confusion. [Such a view] proved to be unstable… What is needed is some sort of systematic theory of what meaning is, and how it interacts with these other factors governing the use of language. (pp. xiv)

It was ultimately the re-introduction of the possibility of a systematic theory of meaning by Grice, later at Oxford (see section 5, below), that finally spelled the end for Ordinary Language philosophy.

4. Oxford