Multiculturalism

Cultural diversity has been present in societies for a very long time. In Ancient Greece, there were various small regions with different costumes, traditions, dialects and identities, for example, those from Aetolia, Locris, Doris and Epirus. In the Ottoman Empire, Muslims were the majority, but there were also Christians, Jews, pagan Arabs, and other religious groups. In the 21st century, societies remain culturally diverse, with most countries having a mixture of individuals from different races, linguistic backgrounds, religious affiliations, and so forth. Contemporary political theorists have labeled this phenomenon of the coexistence of different cultures in the same geographical space multiculturalism. That is, one of the meanings of multiculturalism is the coexistence of different cultures.

The term ‘multiculturalism’, however, has not been used only to describe a culturally diverse society, but also to refer to a kind of policy that aims at protecting cultural diversity. Although multiculturalism is a phenomenon with a long history and there have been countries historically that did adopt multicultural policies, like the Ottoman Empire, the systematic study of multiculturalism in philosophy has only flourished in the late twentieth century, when it began to receive special attention, especially from liberal philosophers. The philosophers who initially dedicated more time to the topic were mainly Canadian, but in the 21st century it is a widespread topic in contemporary political philosophy. Before multiculturalism became a topic in political philosophy, most literature in this area focused on topics related to the fair redistribution of resources; conversely, the topic of multiculturalism in the realm of political philosophy highlights the idea that cultural identities are also normatively relevant and that policies ought to take these identities into consideration.

To understand the discussion of multiculturalism in contemporary political philosophy, there are four key topics that should be taken into consideration; these are the meaning of the concept of ‘culture’, the meaning of the concept of ‘multiculturalism’, the debate about justice between cultural groups and the discussion regarding the practical implications of multicultural practices.

Table of Contents

- The Concepts of Culture in Contemporary Political Theory

- The Concept of Multiculturalism

- The Second Wave of Writings on Multiculturalism

- Animals and Multiculturalism

- References and Further Reading

1. The Concepts of Culture in Contemporary Political Theory

Multiculturalism is before anything else a theory about culture and its value. Hence, to understand what multiculturalism is it is indispensable that the meaning of culture is clarified. In this section, five concepts of culture that are predominant in contemporary political philosophy are outlined: semiotic, normative, societal, economic/rational choice and the anti-essentialist cosmopolitanism conceptions of culture. As Festenstein (2005) points out, these are not competing conceptions of culture, where each selects a distinct set of necessary and sufficient conditions for the right application of the predicate. Contrastingly, all these conceptions of culture defend, even though in slightly different ways, the idea that culture is constitutive of personal identity. Therefore, it is possible to simultaneously defend, say, a semiotic conception of culture and admit that a culture may have normative, societal, economic and cosmopolitan features.

a. The Semiotic Perspective

The semiotic conception of culture was very popular in the 1960s, and has its roots in classic social anthropology. Social anthropologists like Margaret Mead, Levi-Straus and Malinowski considered culture as a set of social systems, symbols, representations and practices of signification held by a certain group. Thus, from this perspective, a culture is defined as a system of ideals or structures of symbolic meaning. Put differently, according to this view, culture should be understood as a symbolic system which in turn is a way of communication which represents the world. This form of communication is based on symbols, underlying structures and beliefs or ideological principles. One of the philosophers endorsing this perspective of culture is Parekh (2005). According to Parekh (2005, p. 139), human life is organized by a historically created system of meaning and significance and in turn this is what we call culture.

Taylor (1994b) who contends that human beings are self-interpreting animals, that is, human beings’ identities depend on the way each individual sees them self, also endorses this viewpoint. These self-understandings necessarily have to have meaning. Hence, the thesis that human beings are self-interpreting animals presupposes that human existence is constituted by meaning. In turn, this implies that human beings are also language animals. By language, what is meant are all modes of expression (music, spoken language, art and so forth) (Taylor, 1994b). To be language animals means that individuals are capable of creating value and meaning, and in Taylor’s view, these meanings have their origins in each individual’s cultural community. That is to say, language is, at least primarily, a result of the interaction of individuals with their own cultural community (Taylor, 1974; 1994b). More precisely, linguistic meanings and self-interpretations have their origins in individuals’ linguistic communities. Thus, culture is a system of symbolic meaning.

Bearing this in mind, it can be argued that the study of culture from the semiotic perspective is the analysis or elucidation of meaning. As in hermeneutics, where the reader has to interpret the meaning of a text, in culture one has to interpret its internal logic (Festenstein, 2005). An example of interpreting the internal logic of a culture could be given by the story told by Quine (1960) regarding the native who says ‘Gavagai!’ whenever he sees a rabbit. Quine (1960) suggests that there may be multiple meanings associated with this actions; it may mean ‘rabbit’, ‘food’, ‘an undetached rabbit-part’, ‘there will be a storm tonight’ (if the native is superstitious) and so forth. The symbolism, sign process or system of meaning underlying this action is what, according to the point of view of semiotics, culture is, and this is what should be studied. In short, it is the study of culture’s autonomous logic.

b. The Normative Conception

The normative conception of culture is usually adopted by communitarians. From this point of view, culture is important because it is what provides beliefs, norms and moral reasons, prompting individuals to act. Hence, part of what a person is includes their moral commitments; their practical identity is made up of these moral commitments, while their reasons to act are motivated by their moral commitments. In other words, according to the normative conception of culture, the term ‘culture’ refers to a group of norms and beliefs that are distinctive and which constitute the practical identity of a group of individuals; thereby, people’s values and commitments result, in part, from culture (Festenstein, 2005, p. 14). By way of illustration, part of what a Christian, a Muslim and a Jew are is constituted by the fact they abide or follow the moral teachings of the Bible, the Quran and the Torah, respectively. Therefore, understanding who one is is about understanding one’s moral commitments and therefore culture is norm-providing. Shachar (2001a, p. 2) is one of the philosophers who endorses this conception of culture. According to her, culture is a world view, both comprehensive and distinguishable, whereby community law is able to be created. To minority groups that have a culture, Shachar (2001a, p.2) attaches the label ‘nomoi communities’. According to her, this term can apply to religious, ethnic, racial, tribal and national groups, for all these groups exhibit the normative dimension required to be classified as a ‘nomoi community’.

The normative conception of culture is usually associated with the semiotic, in the sense that one does not contradict the other; in fact, they may be complementary. For instance, Taylor endorses both perspectives of culture. However, this is not necessary because the system of meaning and significance does not need to provide moral reasons in order to motivate action. From the semiotic perspective, what someone is is not necessarily his or her moral commitments; it can be anything within the system. That is, the system of meaning may be based on anything while, according to the normative conception of culture, culture is strong source of one’s moral commitments.

To explain how the semiotic and normative conceptions of culture can be compatible, consider Taylor’s conception of culture. Taylor considers that individuals are self-interpreting animals. The fact that individuals are thus entails that human existence is constituted by meanings. From the normative point of view, these meanings are moral evaluations/strong evaluations. This refers to the distinctions of worth that individuals make regarding objects of desire. In other words, it is a background of distinctions between things that individuals consider important or worthy and those things which are considered less valuable. From the normative perspective of culture, individuals direct their lives and purposes towards what they consider morally worthwhile. In short, these strong evaluations or moral frameworks are what indicate to individuals what is meaningful and rewarding. That is, they are motivated by these evaluations (Taylor, 1974). Therefore, the self has a moral dimension, in the sense that rationality and identity refer to moral evaluations. Identity is connected with morality because what individuals are is constituted by their self-interpretations, which are ultimately provided by strong evaluations (Taylor, 1974). These moral beliefs or strong evaluations are in turn provided by an individual’s culture–that is why this can be considered a normative conception of culture.

c. The Societal Conception

The societal conception of culture is a concept mainly used by the Canadian philosopher Kymlicka. In order to understand this, it is helpful to consider Kymlicka’s dual typology of the sources of diversity that exist in contemporary societies; for Kymlicka there are two kinds of diversity: polyethnic minorities and national minorities.

Kymlicka uses the term polyethnicity to refer to the kind of diversity resulting from immigration. Polyethnic minorities refer to what is commonly defined as ethnic groups. According to him, polyethnic groups are usually not territorially concentrated; rather they are dispersed around the country to which they migrated. Furthermore, Kymlicka affirms that they do not usually want to be segregated from the culture of the majority; rather they want to integrate with it, demanding policies that give them equal citizenship. For instance, these groups demand language rights, voting rights, places in parliament and so forth. However, even though this demand for equal citizenship is usually what polyethnic groups aspire to, this is not always the case. Kymlicka contends that polyethnic groups can be sub-divided into liberal and illiberal groups (Kymlicka, 2001, pp. 55-58). Liberal polyethnic groups have aspirations that do not go against liberal values, usually aspiring to be integrated into society, demanding policies for equal citizenship. As an example, Kymlicka usually refers to Latin-American immigrants living in the United States, who, in broad terms, make demands for language rights, such as an education curriculum in Spanish.

On the other hand, for Kymlicka, illiberal polyethnic groups are those where the culture and the demands to the state are not in accordance with liberal values. For example, some religious minority ethnic groups advocate the death penalty for gays within their groups; others have gendered and discriminatory norms in relation to divorce and marriage. Some of these groups have demands that are more similar to the ones of national minorities but Kymlicka contends that these cases are the exception, not the rule (Kymlicka, 1995, pp. 11-26, 97-99).

Polyethnic groups are not, in Kymlicka’s view, considered a culture; according to him, only nations are a culture. Kymlicka (1995, p. 18) uses the term nation interchangeably with the terms culture, people and societal culture, for example, “I am using ‘a culture’ as synonymous with ‘a nation’ or ‘a people’—that is, as an intergenerational community, more or less institutionally complete, occupying a given territory or homeland, sharing a distinct language and history”. In Kymlicka’s view, national minorities are a group in a society with a societal culture and a smaller number of members than the majority. Hence, a national minority is a societal culture where the amount of members is smaller in number than the amount of members of the majority. For Kymlicka (1995, p. 76) a societal culture is a kind of social setting that provides individuals with meaningful ways of life, both in the public and private sphere. These societal cultures are important mainly because they give individuals the groundwork from which they can make choices. More precisely for Kymlicka (1995, p. 76) due to the fact that societal cultures provide meaningful ways of life, they provide the social context that individuals need in order to make their own choices (that is, to be autonomous). Kymlicka’s rationale is that autonomy is only possible in certain social contexts and that social context is set up by societal cultures.

From Kymlicka’s point of view, national minorities or minority societal cultures usually share a number of characteristics. First, national minorities have settled in the country long ago. For example, most of the Amish communities in Pennsylvania settled there in the eighteenth century, as a result of religious persecution in Europe. Aborigines in Australia and many Native American groups in the USA have lived in that territory for a long period. Second, from Kymlicka’s point of view, these groups are often territorially concentrated; for example, Quebec and Catalonia are situated in specific geographic areas of Canada and Spain, respectively. In India, Sikhs are geographically concentrated mostly in the Punjab region. Third, according to Kymlicka, the institutions and practices of these groups provide a full range of human activities; this means that nations are embodied in common economic, political and educational institutions. These institutions are not based only on shared meanings, memories and values but include common practices and procedures. Put differently, nations are institutionally complete in the sense that they encompass a wide institutional elaboration that encompasses a variety of areas of life; they have their own governments, laws, schools and so forth. In Kymlicka’s view, the fourth characteristic that national minorities have in common is that they usually aspire to either total or partial segregation from the larger society. That is, these groups wish to be a totally or partially separate society, with a different state, governed by their own laws and institutions. Hence, national minorities, in Kymlicka’s view, do not want to integrate in the larger society; rather they wish to be able to have a certain degree of autonomy. For example, many Quebecois want to be able to have their own government institutions, run in the way they wish, like schools run in French. Often, the Amish want to be left alone, without intervention from the state in their internal affairs. More precisely, one of the demands of some Amish communities is that they are exempt from the basic educational requirements that other citizens of the USA have to abide by, namely, the minimum literacy requirements. This, as will be explained later on, relates to other set of normative questions about what groups can and cannot impose to their members. In order to address this problem, Kymlicka draws a distinction between practices that can be imposed (external protections) and practices that cannot be imposed (internal restrictions).

From Kymlicka’s point of view, national minorities can further be sub-divided into liberal and illiberal minorities. The former are those whose demands are compatible with liberal values, that is, their demands do not violate individuals’ rights and liberties. Under the concept of liberal national minorities are examples like Quebecois and Catalonians; these national minorities usually demand the right to use a different language in schools and their other institutions, and this does not necessarily violate any liberal value. The concept of illiberal national minorities refers to groups that wish to endorse illiberal values, like the death penalty for gays and lesbians.

d. The Economic/Rational Choice Approach

Rational choice is a theory that aims to explain and predict social behavior. From the viewpoint of rational choice, individuals act self-interestedly when they take into consideration their preferences and the information available. Self-interest means that individuals tend to maximize what is valuable for them. In other words, human behavior is goal-oriented. It is goal oriented by its preferences, that is, individuals act according to their preferences. For instance, if an individual prefers a hot chocolate to a vanilla milkshake or a strawberry milkshake and all the options are available, he will choose hot chocolate (other things being equal).

According to the rational choice view, the information available strongly affects behavior. By way of illustration, if an individual does not know that hot chocolate is available he will not choose it. Thus individuals act according to their self-interest, information and preferences. If a certain person’s preference is to buy the tastiest hot chocolate and this person has the information that the tastiest hot chocolate is sold in a particular store, then this person will act in order to achieve her/his own interest, that is, by going to that store and purchasing it there. Obviously, these actions are limited by the options available and by the actions of others. Therefore, if there is no hot chocolate on the market, this person will not be able to buy it–the option is not available because the suppliers decided not to offer hot chocolate. In this sense, an individual’s are dependent on their circumstances and on the actions of others.

With these premises in mind, a possible definition of culture from a rational choice perspective is provided by Laitin (2007, p. 64), whereby culture is:

an equilibrium in a well-defined set of circumstances in which members of a group sharing in common descent, symbolic practices and/or high levels of interaction—and thereby becoming a cultural group—are able to condition their behavior on common knowledge beliefs about the behavior of all members of the group.

Therefore, there are four key features of this conception of culture. First, a cultural group is a group in which individuals share a certain number of characteristics that differentiate them from other individuals–for example, language or religion. Second, all these individuals share a high degree of common knowledge. What common knowledge means in this context is that the members of a certain culture have shared information and mutual expectations about the actions and beliefs of others in the group. Third, there is a cultural equilibrium when the incentive to act or the self-interest to act is according to the beliefs of his or her own culture. More precisely, a cultural equilibrium occurs when individuals’ have an interest in acting in accordance with the norms and practices of their culture. These norms and practices can be any, but Laitin (2007) provides an insightful example with respect to the old Chinese tradition of foot binding. Laitin explains that it was very difficult for Chinese women to marry a man if they did not engage in the foot binding tradition. In this case, most Chinese parents forced their daughters to engage in this practice owing to the fact that their interest in finding a husband to their daughters was in accordance with the cultural practice of foot binding. Finally, a well-defined set of circumstances can be described as a kind of situation where the type of interactions that members have with each other are ones of coordination and not conflict. That is, individuals’ actions are ones that are arranged in a way that match or complement each other, rather than being in conflict.

e. Anti-Essentialism and Cosmopolitanism

The concepts of culture mentioned above have been strongly criticized by some political theorists. Some of these, who direct their criticisms mostly to the semiotic, normative and societal conceptions of culture, argue that these conceptions are essentialist views of culture that inaccurately describe social reality. However, as Festenstein (2005) has pointed out, these criticisms are sometimes misplaced, that is, these conceptions of culture do not necessarily need to be essentialist.

In general terms, from an essentialist point of view, there is a distinction between the essential and accidental properties that the different kinds of objects and subjects may have. Accidental properties are properties that are not necessarily present in all members of a certain group of objects or subjects. Essential properties are those that define the objects or subjects, that is, objects or subjects necessarily need to have these properties in order to be members of a certain group. Furthermore, members of other groups do not have this property or set of properties; otherwise they too would belong to this group. By way of illustration, a bookshelf in order to be a bookshelf has to necessarily be constructed in a way that makes it possible to hold books–this is its essential property. The fact that a specific bookshelf is brown, black or blue is an accidental property–it does not change what the object is and it is indifferent to its definition. These properties are necessary and sufficient not only to include a certain object or subject in the group but also to exclude any object or subject which does not share these properties. Bearing this in mind, it can be concluded that essences are given by differences and similarities; for what defines a subject is what it has in common with the subjects of the same group, which in turn is a characteristic that other groups do not have.

In terms of what this means to culture, it means identifying the social characteristics or attributes that make the group what it is, and that all members of that group necessarily share. Moreover, these characteristics are what differentiate members of that group from others and clearly exclude others (Young, 2000a, p. 87). For example, for an essentialist, to classify Muslims as Muslims means to identify a certain characteristic, like shared practices and beliefs, common to all of the individuals who identify as Muslims. Thus, essentialism applied to culture would be that a certain culture means having a certain characteristic or set of characteristics that all members share, and which no one outside the group does. Hence, from this point of view, the identity of the group is constituted by the set of properties or attributes which are essential to this particular group (Young, 2000a).

According to the critics of essentialism, this theory necessarily makes two wrong assumptions about culture. First, the critics state that essentialists wrongly affirm that cultures are clearly demarcated wholes and their practices and beliefs do not overlap with other cultures. Thus, according to this argument, essentialists wrongly affirm that beliefs and practices are exclusive to each culture. This premise is necessary for defending essentialism because from an essentialist point of view; different groups cannot share the same essential properties; otherwise they would belong to the same group. Second, essentialists, according to these critics, wrongly picture cultures as internally uniform or homogeneous. Put differently, essentialists consider that individuals with the same culture all agree and interpret practices in the same way. Furthermore, they all place the same value on the practices of the group. This second premise is necessary for essentialist thinking owing to the fact that a group has to have a property or a set of properties that is predicated of all individuals in order for them to be members of this group.

This essentialist perspective of culture has however been widely contested. The general argument is that essentialism stereotypes and makes abusive generalizations of what groups are. That is to say, according to the critics, essentialism is descriptively inaccurate. Criticism of this perspective contends that the first premise lacks empirical evidence. There is no evidence that there is any exclusivity in terms of practices and beliefs, in fact, evidence suggests the opposite; cultures borrow practices and beliefs in order to increase their fitness. Cultures are not bounded, owing to the fact that culture is constantly changing, influenced by local, national and global resources (Phillips, 2007a; 2010). Hence, according to this view, it is not possible to clearly demarcate the boundaries of cultures because they share a number of practices and beliefs. There is significant overlapping of cultures, especially in neighboring cultures. The distinction between cultures is, therefore, overemphasized–the boundaries between cultures not being clearly demarcated (Benhabib, 2002; Phillips, 2007a).

With regards to the second premise, the criticism contends that it is false to say that there is internal homogeneity inside a group in terms of needs, interests and beliefs. Rather, the social actors of cultural groups have different needs, interests and interpretations about the beliefs and practices of groups. Furthermore, in many cases, they consider these practices and beliefs quite contestable, discussable and open to different interpretations. Therefore, there is wide disagreement about cultural meaning (Benhabib, 2002). Anti-essentialists contend that there are too many exceptions to make essentialist claims. Therefore, there are a considerable number of counter-examples to this generalization (Phillips, 2007a; 2010; Schachar, 2001a). As a consequence, some anti-essentialists usually argue that these categories should be substituted by thinner categories. Thus, rather than speaking about women, one should speak about black women, or lesbian Muslim women.

Taking this into consideration, different, more flexible conceptions of culture have been suggested; perhaps the most well-known being the cosmopolitan conception of culture, defended by Waldron. In Waldron’s view, cultures are dynamic and in continuous creation and interchange (Waldron, 1991). Consequently, cultures overlap with each other, making it impossible to attribute exclusive properties to one single culture and to differentiate between them. In other words, according to this view, there is a mélange of cultures because people move between cultures by enjoying the opportunities that each provides. Hence, individuals live in a kaleidoscope of cultures, within which they enjoy and borrow practices (Waldron, 1996).

A question that arises is whether this criticism entails that any attempt to define culture is mistaken. Some anti-essentialists like Narayan (1998) contend that this is not the case. Rather, she contends that cultures can be defined if two points are taken into consideration. First, cultures are fluid and constantly changing; hence, any definition of culture should consider that cultures are always in flux. Second, broader categories should be substituted by thinner categories. This means that rather than using terms like ‘African Culture’, one should use terms like ’Tutsi culture in Rwanda’.

2. The Concept of Multiculturalism

In general terms, within contemporary political philosophy, the concept of multiculturalism has been defined in two different ways. Sometimes the term ‘multiculturalism’ is used as a descriptive concept; other times it is defined as a kind of policy for responding to cultural diversity. In the next section, the definition of multiculturalism as a descriptive concept will be explained, followed by a clarification of what it means to use the term ‘multiculturalism’ as a policy.

a. Multiculturalism as a Describing Concept for Society

The term ‘multiculturalism’ is sometimes used to describe a condition of society; more precisely, it is used to describe a society where a variety of different cultures coexist. Many countries in the world are culturally diverse. Canada is just one example, including a variety of cultures such as English Canadians, Quebecois, Native Americans, Amish, Hutterites and Chinese immigrants. China is another country that can also be considered culturally diverse. In contemporary China, there are 56 officially recognized ethnic groups, and 55 of these groups are ethnic minorities who make up approximately 8.41 percent of China’s overall population. The other ethnic group is that of Han Chinese, which holds majority status (Han, 2013; He, 2006).

There are a variety of ways whereby societies can be diverse, for example, culture can come in many forms (Gurr, 1993, p. 3). Perhaps the chief ways in which a country can be culturally diverse is by having different religious groups, different linguistic groups, groups that define themselves by their territorial identity and variant racial groups.

Religious diversity is a widespread phenomenon in many countries. India can be given as an example of a country which is religiously diverse, including citizens who are Sikhs, Hindus, Buddhists, among other religious groups. The US is also religiously diverse, including Mormons, Amish, Hutterites, Catholics, Jews and so forth. These groups differentiate from each other via a variety of factors. Some of these are the Gods worshiped, the public holidays, the religious festivals and the dress codes.

Linguistic diversity is also widespread. In the 21st century, there are more than 200 countries in the world and around 6000 spoken languages (Laitin, 2007). Linguistic diversity usually results from two kinds of groups. First, it results from immigrants who move to a country where the language spoken is not their native language (Kymlicka, 1995). This is the case for those Cubans and Puerto Ricans who immigrated to the United States; it is also the case for Ukrainian immigrants who moved to Portugal.

The second set of groups that are understood as a form of linguistic diversity are national minorities. The term ‘national minorities’ routinely refers to groups that have settled in the country for a long time, but do not share the same language with the majority. Some examples include Quebecois in Canada, Catalans and Basques in Spain, and the Welsh in the UK. Usually, these linguistic groups are territorially concentrated; furthermore, minority groups that fall into this category usually demand a high degree of autonomy. In particular, minority groups usually demand that they have the regional power to self-govern, that is, to run their territory as if it was an independent country or to succeed and become a different country.

A third kind of group diversity can results from distinct territory location. This territory location does not necessary mean that members of distinct cultures are, in fact, different. That is, it is not necessary that habits, traditions, customs, and so forth are significantly different. However, these distinct groups identify themselves as different from others because of the specific geographical area in which they are located. Possibly, in the UK, this is what distinguishes Scots from English. Even though there are historical differences between Scots and English, if one assumes that these two groups have little to distinguish themselves from each other, other than their geographical location, they would fit this third kind of group diversity. As mentioned above, these differences are conceptual and, in practice, cultural groups are characterized by a variety of features and not just one.

The fourth kind of group diversity is race. Races are groups whose physical characteristics are imbued with social significance. In other words, race is a socially constructed concept in the sense that it is the result of individuals giving social significance to a set of characteristics they consider that stand out in a person’s physical appearance, such as skin color, eye color, hair color, bone/jaw structure and so forth. However, the mere existence of different physical characteristics does not mean that there is a multicultural environment/society. For instance, it cannot be affirmed that Sweden is multicultural because there are Swedes with blue eyes and others with green. Physical characteristics create a multicultural environment only when these physical characteristics mean that groups strongly identify with their physical characteristics and where these physical characteristics are socially perceived as something that strongly differentiates them from other groups. That is, racial cultural diversity is not simply the existence of different physical characteristics. Rather, these different physical characteristics must entail a sense of common identity which, in turn, are socially perceived as something that differentiates the members of that group to others. However, many times this idea of common identity is exaggerated, as Waldron’s argument suggests. For instance, even though there is the idea that a black culture exists in the United States, Appiah (1996) denies that such black culture exists, since there is no common identity among blacks in the United States. An example of a physical difference that is considered socially significant and, therefore, creates a multicultural society/environment can be seen in the Tutsis and Hutus of Rwanda. In general terms, Tutsis and Hutus are very similar, due to the fact that they speak the same language, share the same territory and follow the same traditions. Nevertheless, Tutsis are usually taller and thinner than Hutus. The social significance given to these physical differences are sufficient for members of both groups, broadly speaking, to identify as members of one group or the other, and subsequently oppose to each other.

Obviously, groups are not, most of the time, identified only by being linguistically different, territorially concentrated or religiously distinct. In fact, most groups have more than one of these characteristics. For instance, Sikhs in India, besides being religiously different, are also characterized, in general terms, by their geographical location. Namely, they are localized in the Punjab region of India. The Uyghur, from China, have a different language, are usually Muslims and are usually located in Xinjiang. Thus, the classification is helpful for understanding the characteristics of each group, but does not mean that these groups are simply defined by that characteristic.

b. Multiculturalism as a Policy

The term ‘multiculturalism’ can also be used to refer to a kind of policy. This kind of policy has two main characteristics. First, it aims at addressing the different demands of cultural groups. That is, it is a kind of policy that refers to the different normative challenges (ethnic conflict, internal illiberalism, federal autonomy, and so forth) that arise as a result of cultural diversity. For example, these are policies that aim at addressing the different normative challenges that arise from minority groups, like Quebecois, wishing to have their own institutions in a different language from the rest of Canada. To contrast with redistributive policies, multicultural policies are not primarily about distributive justice, that is, who gets what share of resources, although multicultural policies may refer to redistribution accidentally (Fraser, 2001). Multicultural policies aim at correcting the kind of disadvantages that some individuals are victims of, and that result from these individuals’ cultural identity. For instance, these are policies that aim at correcting a disadvantage that may result from someone being a member of a certain religion. In the case of some Muslims, this can mean addressing the problem of Muslims living in a Christian country and demanding different public holidays than the majority to celebrate their own festivals such as Eid-al-Fitr.

Second, multicultural policies are policies that aim at providing groups the means by which individuals can pursue their cultural differences. Put differently, multicultural policies have as their objectives, the preservation, allowance or celebration of differences between different groups. Consequently, multicultural policies contrast with assimilation. That is, according to the assimilationist view, it is acceptable that people are different, but the final goal of policies should be to make the minority group become part of the majority group, that is, to be accepted by those in the majority group, and to somehow find a consensus position between different cultures. Contrastingly, multiculturalism acknowledges that people have different ways of life and, in general terms, the state ought not to assimilate these groups but to give them the tools for pursuing their own ways of life or culture. That is, from a multiculturalist point of view, the final objective of policies is neither the standardization of cultural forms nor any form of uniformity or homogeneity; rather, its objective is to allow and give the means for groups to pursue their differences.

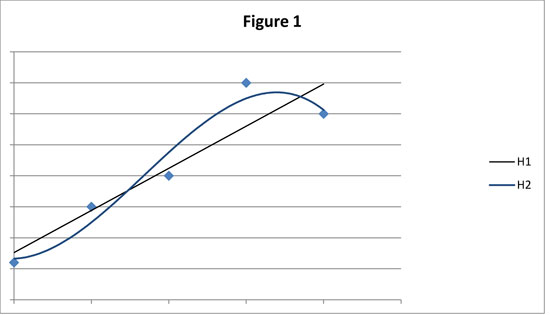

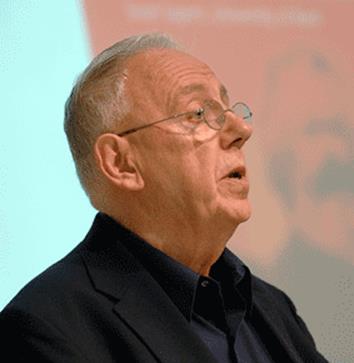

According to Kymlicka, in the context of contemporary liberal political philosophy, there have been two waves of writings on multiculturalism (Kymlicka, 1999a). This discussion of what policies ought to be undertaken in order to protect minority cultures is included in what Kymlicka called the first wave of the wave of writings on multiculturalism. In his view (1999a, p. 112), the first wave of writing focused on assessing to what extent it is just, from a liberal point of view, to give rights to groups so that they can pursue their cultural differences. In this first wave of writings, contemporary liberal political philosophers have discussed what kind of inequalities exist between majorities and minorities, and how these should be addressed. In other words, the discussion has been about what kind of intergroup inequalities exist, and what the state should do about them. In general terms, contemporary liberal political philosophers who have written about this topic have taken two different stands. On the one hand, some liberal political philosophers defend that state institutions should be blind to difference and that individuals should be given a uniform set of rights and liberties. In these authors’ views, cultural diversity, religious freedom and so forth are sufficiently protected by these sets of rights and liberties, especially by freedom of association and conscience. Therefore, those who stand for a uniform set of rights and liberties contend that ascribing rights on the basis of membership in a group is a discriminatory and immoral policy that creates citizenship hierarchies that are undesirable and unjust (Kymlicka, 1999a, pp. 112-113). Thus, in the view of these contemporary liberal philosophers, involvement in the cultural character of society is something that the state is under the duty to not do.

On the other hand, some philosophers have taken the opposite view on this matter. For example, there are some contemporary liberal political philosophers who are more sympathetic to the idea of ascribing rights to groups and have defended difference-sensitive policies. As Kymlicka (1999a, p. 112) points out, these contemporary liberal political philosophers have tried to show that difference-sensitive rules are not inherently unjust. In general terms, these contemporary political philosophers argue that a regime of difference-sensitive policies does not necessarily entail a hierarchization of citizenship and unfair privileges for some groups. Rather, they argue that difference-sensitive policies aim at correcting intergroup inequalities and disadvantages in the cultural market. Moreover, some of these philosophers contend that difference-blind policies favor the needs, interests and identities of the majority (Kymlicka, 1999a, pp. 112-114). These philosophers who consider that groups are entitled to special rights can be classified as a form of multicultural citizenship.

Those who defend special rights for groups have suggested a variety of policies. In his book The Multiculturalism of Fear, Levy (2000, pp. 125-160) systematically exposed the kinds of difference-sensitive policies that are usually discussed in the literature. According to him, difference-sensitive policies can be divided into eight categories: exemptions, assistance, symbolic claims, recognition/enforcement, special representation, self-government, external rules and internal rules.

Exemptions to laws are usually rights based on a negative liberty of non-interference from the state in a specific affair, which would cause a significant burden to a certain group. Or, to put it another way, exemptions to the law happen when the state abstains from interfering with or obliging a certain group who desire to practice something in order to diminish their burden. Exemptions can also be a limitation of someone else’s liberty to impose some costs on a certain group. Imagine that there is a general law that decrees corporations have the right to impose a dress code upon their employees. However, having this general law would burden those groups for whom dressing in a certain manner (that is, different from the one required by the company) is a very important value. For example, for many Sikh men and Muslim women it is very important to wear turbans and headscarves, respectively. Hence, it can be claimed that giving these individuals the option of either finding another job or rejecting their dress code can be a significant burden to them; given that the choice of dressing in a certain way is sometimes much harder for Sikh men and Muslim women than for a Westerner, and that it would undermine their identity, an exemption may be justified (Levy, 2000, pp. 128-133). Hence, these groups would be able to engage in practices that are not allowable for the majority of citizens.

Assistance rights aim to aid individuals in overcoming the obstacles they face because they belong to a certain group. In other words, assistance rights aim to rectify disadvantages experienced by certain individuals, as a result of their membership of a certain group, when compared to the majority. This can mean funding individuals to pursue their goals or using positive discrimination to help them in a variety of ways. Language rights are an example of this approach. Suppose that some individuals from Catalonia cannot speak Spanish. An assistance measure would be having people speak both Spanish and Catalan at public institutions, so that they can serve people from the minority as well the minority language group. Another example would be awarding subsidies to help groups preserve their cohesion by maintaining their practices and beliefs, and by allowing individuals from a minority to participate in public institutions as full citizens. Most of these practices are temporary, but they do not need to be (language rights, for example, are often not temporary) (Levy, 2000, pp. 133-137).

Symbolic claims refer to problems which do not affect individuals’ lives directly or seriously, but which may make the relations between individuals from different groups better. In a multicultural country, where there are multiple religions, ethnicities and ways of life, it may not make sense to have certain symbols that represent only a specific culture. Symbolic claims are ones that require, on the grounds of equality, the inclusion of all the cultures in a specific country in that country’s symbols. An example would be including Catholic, Sikh, Muslim, Protestant, Welsh, Northern Irish, Scottish, and English symbols on both the British flag and in the national anthem. Not integrating minority symbols may be considered as dispensing a lack of respect and unequal treatment to minorities.

Recognition is a demand for integrating a specific law or cultural practice into the larger society. If individuals want to integrate a specific law, they can ask for the law to become part of the major legal system. Hence, Sharia law could form part of divorce law for Muslims, while Aboriginal law could run in conjunction with Australian property rights law. It could also be a requirement to include certain groups in the history books used in schools–for example, to include the history of Indian and Pakistani immigrants in British history textbooks. Failing to integrate this law may bring a substantive burden to bear on individuals’ identity. In the Muslim case, because family law is of crucial importance to their identity, they may be considerably burdened by having to abide by a Western perspective of divorce. With regards to Aboriginal law, because hunting is essential for their way of life, if other individuals own the(ir) land this may undermine the Aboriginal culture.

Special representation rights are designed to protect groups which have been systematically unrepresented and disadvantaged in the larger society. Minority groups may be under-represented in the institutions of a society, and in order to place them in a position of equal bargaining power, it is necessary to provide special rights to the members of these groups. Hence, these rights aim to defend individuals’ interests in a more equal manner by guaranteeing some privileges or preventing discrimination. One way to achieve this is by setting aside extra seats for minorities in parliament (Kymlicka, 1995, pp. 131-152; Levy, 2000, pp. 150-154).

Self-government rights are usually what are claimed by national minorities (for example, Pueblo Indians and Quebecois) and they usually demand some degree of autonomy and self-determination. This sometimes implies demands for exclusive occupation of land and territorial jurisdiction. The reason groups sometimes may need these rights is that the kind of autonomy they give is a necessary condition by which individuals can develop their cultures, which is in the best interest of a culture’s members. More precisely, a specific educational curriculum, language right or jurisdiction over a territory may be a necessary requirement for the survival and prosperity of a particular culture and its members. This is compatible with both freedom and equality; it is compatible with freedom because it allows individuals access to their culture and to make their own choices; it is consistent with equality because it places individuals on an equal footing in terms of cultural access (Kymlicka, 1995, pp. 27-30; Levy, 2000, pp. 137- 138).

What Levy classifies as external rules can be considered as kinds of rights for self-government. They involve restricting other people’s freedom in order to preserve a certain culture. Hence, Aborigines in Australia employ external safeguards to protect their land. For example, freedom of movement is limited to outsiders who circulate in Aboriginal territory; furthermore, outsiders do not have the right to buy Aboriginal land. Demands that groups make for internal rules are those demands that aim at restricting individuals’ behavior within the group. Stigmatizing, ostracizing or excommunicating individuals from groups because they have not abided by the rules is what is usually meant by internal rules. Thus, this is the power given to groups to treat their members in a way that is not acceptable for the rest of society. An example can be if a certain individual marries someone from another group, which may then mean he is expelled from his own group. Another case is that of the Amish who want their children to withdraw from school earlier than the rest of society. In contrast to external rules, the restrictions on freedom apply to members of the group and not to outsiders. It is controversial whether internal rules are compatible with liberal values or not. On the one hand, authors like Kymlicka affirm they are not, because they undermine individuals’ autonomy, which is, in his view, a central liberal value. On the other hand, philosophers like Kukathas contend that liberals are committed to tolerance and, thereby, should accept some internal restrictions.

i. Multicultural Citizenship

Generally speaking, the philosophy of those authors who defend a multicultural citizenship, have five points in common. Firstly, they all contend that the state has the duty to support laws which defend the basic legal, civil and political rights of its citizens. Secondly, they argue that the state should participate in the construction of societal cultural character, thus its laws and policies should aim to protect culture. Thirdly, these philosophers contend that the character of culture is normative. Consequently, and this is the fourth common feature, individuals’ interest in culture is sufficiently strong enough that it needs to be supported by the state. Fifth, they both defend difference-sensitive/multicultural citizenship policies for protecting culture. Some of the philosophers who defend a multicultural citizenship are Taylor, Kymlicka and Shachar.

1. Taylor’s Politics of Recognition

According to Taylor, there are two forms of recognition; intimate recognition and public recognition. Taylor (1994b, p. 37) mainly discusses the idea of public recognition or recognition in the public sphere. This form of recognition is about respect and esteem for one’s identity in the public realm; being misrecognized in the public realm means to have one’s identity disrespected in a way whereby one is treated as a second-class citizen. Being misrecognized, in this sense, is to have an unequal citizenship status in virtue of one’s identity. Hence, someone is misrecognized in the public sphere if one has a legal disadvantage that results from one’s identity. To have respect and esteem for someone in the public sphere means to have citizenship rights that do not disadvantage one’s identity. In Taylor’s view, misrecognition can potentially be a form of oppression and helps to create self-hating images in those who are misrecognized. Bearing this in mind, recognition is a vital human need because the relation between recognition and identity (the way people understand who they are) is relatively strong; hence, misrecognition or non-recognition may have a serious harmful effect on individuals

In order to discuss the best way to achieve recognition in the public realm, Taylor draws a distinction between procedural and non-procedural forms of liberalism. He affirms that, according to the procedural version of liberalism, a just society is one where all individuals have a uniform set of rights and freedoms, and having different rights for different people creates distinctions between first-class and second-class citizens: this liberalism is only committed to individual rights and rejects the idea of collective rights. The state, according to this version of liberalism, should not be involved in the cultural character of society and the procedures of this society must be independent of any particular set of values held by the citizens of that polity. In other words, the state should be neutral and independent of any conception of the good life.

In Taylor’s (1994b, p. 60) view, procedural liberalism is inhospitable to difference and is unable to accommodate different cultures. Taylor believes that, in some cases, collective goals need to be aided so that they can be achieved. Sometimes cultural communities need to have power over certain jurisdictions so that they can promote their own culture; this is something that a procedural liberalism does not offer, according to Taylor. Due to the fact that Taylor considers recognition as important, this kind of liberalism that is inhospitable to difference should be rejected; rather, in Taylor’s view, a non-procedural liberalism that is involved in the cultural character of society in a way that enhances cultural diversity and is not hostile to difference is the kind of liberalism that should be endorsed. From Taylor’s point of view, this non-procedural liberalism is not neutral between different ways of life and it is grounded in judgments of what the good life is. According to Taylor, this liberalism takes into account differences between individuals and groups and by taking these into account it creates an environment that is not hostile to the flourishing of different cultures. Engaging in policies that promote culture is, in Taylor’s view, extremely important; cultural communities deserve protection owing to the fact that they provide members with the basis of their identities. The language of cultures provides the framework for the question of who one is. Taylor believes that identity is strongly influenced by culture; therefore, there is a moral and social framework given by the language of one’s culture that individuals need in order to make sense of their lives. Therefore, recognition and protection of individuals’ cultural communities is required for respecting and preserving one’s identity. However, in Taylor’s view, this commitment to promoting difference is acceptable only if the measures taken to promote difference are constant with what he considers to be fundamental rights. Taylor specifically mentions the rights to life, liberty, due process, free speech and free practice of religion.

From Taylor’s point of view, this non-procedural liberalism has implications for public policy. It means that there should be decentralized power so that communities can flourish. However, what this decentralization and non-procedural liberalism imply in practice depends on the context; in different countries with different kinds of minorities there may be different implications. Taylor mostly writes about the Canadian context and he believes that in this context the best policy is a form of federalism. In his view, Quebec should be given self-government rights so that it has power over a certain number of policies. In particular, Taylor affirms that it should have sovereign power over art, technology, economy, labor, communications, agriculture, and fisheries. In the case of language policies, Taylor contends that in some cases it is justified to violate liberal values, like freedom of expression, in order to protect the language of a community. For instance, in the case of Quebec, communications in English can be restricted by the state in order to promote the French language. Another example is that offspring of French parents do not have the option of choosing a language of instruction that is not French. Moreover, it should have shared power with the majority in immigration, industrial policy and environmental policy. Control over defense, external affairs and currency is given to the federal government. It is important to emphasize that, in Taylor’s view, federalism is not a necessary implication of non-procedural liberalism. Federalism is not at the core of the recognition idea; rather, federalism is a kind of system that Taylor considers is the adequate option in the Canadian context, which does not mean it is a good option in all contexts.

2. Kymlicka’s Multicultural Liberalism

Kymlicka believes that group rights are compatible and promote the liberal values of freedom and equality. As a result, Kymlicka offers arguments that relate freedom and equality with group rights. The argument based on freedom is strongly related to his idea of societal culture. In Kymlicka’s perspective (1995, p. 80), societal cultures promote freedom. From Kymlicka’s point of view, the reason why societal cultures are important for freedom is because they give individuals the groundwork from which they can make choices. In particular, according to Kymlicka, because societal cultures provide a framework with meaningful ways of life, then they provide the social conditions that are necessary for individuals to make autonomous choices. Autonomy, in turn, is only possible if and only if these social conditions are the ones of individuals’ societal cultures.

Taking this on board, Kymlicka’s argument is that societal cultures ought to be protected because they promote the liberal value of autonomy; they promote this value because societal cultures give, in Kymlicka’s perspective, a context of choice that is necessary for individuals to exercise their freedom. Put differently, from Kymlicka’s point of view, individuals’ own cultures provide the groundwork that individuals need in order to make free choices. Consequently, if liberals are committed to this value, they are committed to protecting the conditions (societal cultures) to achieve it. This means that if group rights are necessary for protecting this context of choice, then they are justified from a liberal point of view; for if group rights can protect the context of choice, then they are promoting autonomy. As mentioned above, from the three sources of diversity only national minorities have societal cultures. Hence, this argument only justifies group rights for national minorities in order to protect their societal cultures. In Kymlicka’s view, the context of choice is given by the access to one’s own culture, not just to any culture. So according to this view, for someone from Quebec, the societal culture of Catalonia does not provide a context of choice; likewise, for someone from an Amish community, the societal culture of Sikhs in India does not provide this Amish individual with a context of choice.

The three arguments based on equality that Kymlicka offers for defending group rights rely on a different line of reasoning. The first argument starts by observing that there is an inevitable involvement in the cultural character of society by the state and it is impossible to be completely neutral. Kymlicka affirms that the decisions made by governments, like what public holidays to have, unavoidably promote a certain cultural identity. Consequently, those individuals who do not share the culture promoted by the state are disadvantaged. In other words, they are in an unequal position. More precisely, by observing the unequal treatment that results from the inevitable involvement in the cultural character of society by the state, Kymlicka contends that uniform laws giving the same rights to all individuals from different cultures treat individuals unequally. To take the example of public holidays, the establishment of Christian public holidays disadvantages Muslims because their main festival, Eid-al-Fitr, occurs at a time of the year when there are no public holidays. Bearing this in mind, Kymlicka argues that if liberals are committed to equality, then they should endorse a kind of public policy that does not advantage some individuals over others; this, in turn, means that in order to equalize the status of different groups, the state ought to entitle different groups to different rights.

In Kymlicka’s view, group rights can correct these inequalities by providing the necessary and sufficient means by which individuals can pursue their culture. Although the argument for autonomy only applies to national minorities, this argument based on equality refers to national minorities and polyethnic groups. Inequalities between majorities and national minorities can take many shapes, but an example that Kymlicka likes to use is language rights inequalities. From his point of view, national linguistic minorities like those of Quebec and Catalonia would be treated unequally if they did not have the right to have their own institutions in their national language. The debate about Christian and Muslim holidays is an example of inequalities between majorities and polyethnic groups. Taking this on board, it is Kymlicka’s (1995) conviction that the two kinds of diversity can potentially be treated unequally by a set of uniform laws. As a result, any of these three kinds of diversity are entitled to group rights on grounds of promoting equality between groups within a liberal state.

Kymlicka’s second argument based on equality is that if it is the case that all individuals in society should have it, then the state is committed to promote a variety of cultures so that individuals have more options relating to choice. This argument, however, is not directed at minorities but rather at majorities, and it does not refer to a need of the minority; instead, it refers to how culture can make individuals’ lives better in general, by providing more options. Furthermore, Kymlicka (1995, p. 121) considers that because it is difficult to change one’s culture, this would not be a very attractive choice for everyone.

The third argument is that, according to Kymlicka, liberals should respect historical agreements. In Kymlicka’s view, many of the rights that minority cultures have in the early 21st century are the result of historical agreements. If the state is to treat individuals from different cultures with equal respect, then it should respect these agreements.

3. Shachar’s Transformative Accommodation

Shachar is another philosopher who has defended a kind of multicultural citizenship. Shachar endorses a joint governance model that she calls transformative accommodation. According to Shachar, this model relies on four assumptions. First, individuals have a multiplicity of identities. For example, Malcolm X was a Muslim, a male, an African-American, and a heterosexual. Hence, individuals have a multiplicity of affiliations that play a role in their identities. The second assumption is that both the group and the state have normative and legal reasons to shape behavior. There may be a variety of reasons for this, but at least one of them is that individuals have a strong interest both in preserving their cultures and protecting their individual rights. Third, both what the state and the group do impact on each other. For instance, the laws that the state makes about same-sex marriage has an impact on heterosexist minority groups; the heterosexism of minority groups, like the hate speech of the Westboro Baptist Church, also impacts on the state. Fourth, both the state and the group have an interest in supporting their members (Shachar, 2001a, p. 118).

On top of these four assumptions, transformative accommodation is based on three core principles; sub-matter allocation of authority, no monopoly, and the clear establishment of delineated options (Shachar, 2001a, pp. 118-119). According to the sub-matters allocation of authority principle, the holistic view that contested social arenas (family law, criminal law, employment law and so forth) are indivisible is incorrect. According to this principle, these social arenas can be divisible into sub-matters, that is, into multiple separable components that are complementary (Shachar, 2001a, pp. 51-54). In practice, this means that norms and decisions about disputed social matters can be determined separately. In other words, in each area of law, there are sub-areas and these sub-areas are partially independent; as a result, a decision made in a sub-area can be made independently of a decision made in another sub-area. In Shachar’s view, family law, for example, can be divided into demarcating and distributive sub-matters or sub-areas. In her (2001a, pp. 119-120) view, the demarcating sub-matter of family law is where group membership boundaries are defined. That is, it is in this sub-matter that the necessary and sufficient attributes (biological, ethnical, territorial, ideological and so forth) for membership are decided. The distributive sub-matter refers to the distribution of resources. For instance, it would be in the demarcating sub-matter where it would be decided who gets what after divorce.

To illustrate how this principle would work in practice, Shachar routinely uses a legal dispute that occurred with a Native-American tribe and one of their members. This is the case of Julia Martinez; Julia Martinez, was a member of the Santa Clara Pueblo tribe whose daughter’s membership of the group was rejected, a rejection leading to tragic consequences. In 1941, Julia Martinez, who was a daughter of members of the Santa Clara Pueblo tribe married a man from outside the group. With this man, she had a daughter, who was raised in the Pueblo reservation, subsequently participating in and learning the norms and practices of the tribe. However, according to this tribe’s law, only the offspring of male members are considered members; hence, although Julia Martinez’ daughter was raised on the reservation, she was not, in the eyes of the tribe leaders, a tribe member. When Julia Martinez’s daughter got ill, she had to go to the emergency section of the Indian Health Services. Nevertheless, she was refused emergency treatment on grounds of not being a member of the tribe; a refusal that later caused her death (Shachar, 2001a, pp. 18-20). According to the sub-matters principle, in the case of the Santa Clara Pueblo tribe, it would be the legislators in the demarcation sub-matter who would determine whether Julia Martinez’s daughter was a member of the tribe or not (Shachar, 2001a, pp. 52-54). Contrastingly, it would be in the distributive sub-matter would that her entitlement or not to use the Indian Health Services would be decided.

By establishing the second principle, the no monopoly rule, Shachar defends that jurisdictional powers should be divided between the state and the group. According to this principle, neither the state nor the group should hold absolute power over the contested social arenas. More precisely, the group should hold power over one sub-matter while the state should hold power over another. Consequently, legal decisions would result from an interdependent and cooperative relationship between the group and the state (Shachar, 2001a, pp. 120-122). In the case of family law, if there is a divorce dispute, the state could take control of distribution (for example, property division after divorce) and the group, demarcation (for example, who can request divorce and why) or vice-versa.

The third principle defended by Shachar is the definition of clearly delineated options. According to this principle, individuals should have clear options between choosing to abide by the state or the group jurisdiction. In particular, this means that individuals can either decide to abide by a jurisdiction or they can refuse to abide by it and exit that jurisdiction at predefined reversal points. These predefined reversal points are an agreement made between the state and the group, where it is decided when individuals can exit the group and in what circumstances.

ii. Negative Universalism

The other approach to the philosophical discussion about justice between groups can be called negative universalism (Festenstein, 2005). Two philosophers who endorse this approach are, according to Festenstein (2005), Barry and Kukathas. Despite the fact that the philosophies of Barry and Kukathas are different, as negative universalists, they have four features in common.

Firstly, both defend the neutrality of the state among different conceptions of the good. That is, individuals should be free to pursue their own conceptions of the good. Secondly, this impartiality does not have the same impact on all citizens’ lives, that is, some will be better-off than others. Nevertheless, this is not, according to these philosophers, a counter-argument against the liberal value of neutrality, because equality of impact is not a realistic goal. Thirdly, principles of liberal theory adopt ‘basic civil and political rights’ with differentiations that may be justified through fundamental basic rights such as freedom of thought and association. However, basic civil and political rights and justified deviations differ substantially when both are permitted simultaneously. Fourth, negative universalists are skeptical concerning the normative value of culture and about providing differentiated rights to individuals (Festenstein, 2005, pp. 91-92).

1. Barry’s Liberal Egalitarianism

Barry’s view is that liberal egalitarianism is the philosophical doctrine that offers the most coherent and just approach to protect these interests. In addition, from his viewpoint, liberal egalitarianism offers the normative groundwork for the challenges that illiberal and heterosexist cultural groups raise. His liberal egalitarian approach, in particular, has as core values neutrality, freedom and equality.

According to Barry, neutrality means that states are under the duty of not promoting or favoring some conceptions of the good over others. In general terms, this means that state policy should not promote the survival and flourishing of a conception of the good, a language, a religion and so forth. Rather, neutrality requires that states be committed to individual rights without any sort of collective goal, besides those that correspond to universal basic interests. When the state favors a specific conception of the good by assisting it, it is violating neutrality (Barry, 2001, pp. 28, 29, 122). In Barry’s version of liberal neutrality, conceptions of the good are a private extra-political matter, which refer to personal affairs (Barry, 1995, p. 118). Hence, non-secular states, like Iran or Saudi Arabia, violate neutrality in Barry’s sense because they promote a specific religion.

The other important value for Barry, freedom, means not having paternalistic restrictions on pursuing one’s own conception of the good. This implies that individuals should be provided with a considerable amount of independence to pursue their own conceptions of the good. According to Barry, all individuals should be given the means for this pursuit. In practice, this means that all individuals are entitled to freedoms that enable them to pursue their own conceptions of the good and lifestyles; in particular, Barry considers that freedom of association and conscience play a fundamental role in enabling individuals in this pursuit. Individuals may choose to live a lifestyle that liberals may disapprove of; however, Barry (2001, p. 161) considers that bad choices are something that individuals in a liberal society are entitled to make.

Barry’s third commitment, the one to equality, translates into two core ideas. First, treating people equally means to furnish individuals with an equal set of basic legal, political and civil rights. That is, equality requires endorsing a unitary conception of citizenship. Second, the commitment to equality entails that the state has the duty to promote equality of opportunity. For Barry, there is an equal opportunity when uniform rules generate the same set of choices to all individuals (Barry, 2005). This means that there is equality of opportunity if and only if, in a specific situation, different individuals have the capacity to make the choice that is needed to achieve their aims. For example, imagine that Sam and John want both to be medical doctors; imagine that Sam is from a working class family and John from an upper class family. Sam does not have the economic resources to study, but John has. In such a situation, assuming that the economic factor is the only relevant factor for equalizing choice, in order to achieve equality of opportunity, Sam should be given a similar amount of economic resources to John, so that he has the same capacity to make the choice of a career in medicine. Therefore, equality of opportunity requires that individuals be treated according to their needs. Barry also argues that equality of opportunity entails that the state is under the duty of equalizing choice sets, not equalizing the outcomes that result from the decisions people make in those choice sets.

Taking this normative groundwork on board, Barry offers six arguments against giving rights to cultural groups. Four of these are a result of his liberal theory; the other two are independent arguments not related to his theory.

The first argument against difference-sensitive policies for cultural groups presented by Barry is that this would be a violation of neutrality. For Barry, neutrality requires that there is no or little involvement in the cultural character of society; hence, if the state privileged a group either by promoting this group’s culture or by empowering the group with different rights from other groups, then the state would be violating neutrality. Barry believes that liberals are committed to non-interference in the cultural character of society; as a result, liberalism is incompatible with difference-sensitive policies. In practice, what this implies for multicultural demands is that any kind of exemption, recognition, assistance or any other kind of group right should be denied on the grounds of neutrality. For example, in Barry’s view, if a certain state does not criminalize homosexuality and the governing body of a minority religious group asks recognition of its religious courts that convict its gay members for same-sex acts, the state should not concede this recognition because doing so would be giving a different right to a different group and, therefore, it would be a violation of neutrality.

The second argument provided by Barry against group rights is that the unequal impact of policies on cultures is not an interference with freedom to pursue one’s own conception of the good. In Barry’s view, laws have the aim of protecting some interests against others; the fact that they have a different impact on a specific culture is not a sign of unfairness; rather, it is just a side effect of having laws (Barry, 2001, p. 34).

Third, in Barry’s view, the only group rights conceded, especially those exemptions to the law, are cultural practices that overlap with universal human interests. In other words, if the group right and, in particular the exemption to the law, promotes a universal human interest, then it is acceptable (Barry, 2001, pp. 48-50). For instance, Muslim girls cannot be refused education on the grounds of a minor issue such as dress codes, because education is a universal human interest.

Fourth, Barry contends that because neither culture nor cultural demands are a universal interest per se, then the unequal treatment that is acceptable for universal interests does not apply to these (Barry, 2001, pp. 12-13, 16). To recall, Barry’s conception of equality of opportunity entails that individuals can be treated unequally so that their choice sets are equalized. However, Barry affirms that these choice sets should be equalized only if these are choice sets about universal interests, which culture is not. In short, exemptions can and should be guaranteed for universal or higher-order interests but not for particular interests.

These four arguments are dependent on Barry’s liberal theory; they depend on his conception of freedom, neutrality and equality. To these arguments, he adds two ad hoc arguments. First, that difference-sensitive rights that aim to protect economic resources are temporary, while cultural rights are permanent. This means that those who need economic resources to equalize their choice sets only need this aid temporarily (Barry, 2001, pp. 12-13). Contrastingly, according to Barry, group rights to protect culture are required permanently. Like the case of the Sikh, a permanent law that exempted Sikhs from wearing helmets would be necessary. The other ad hoc argument is that when there is a reasonable argument it should be applied without exception. If there is a case for exception, then the rule should be abandoned. According to him, it is philosophically incoherent to provide a universal justification for a rule and then relativize the reason just given (Barry, 2001, pp. 32-50).

2. Kukathas’ Libertarianism

Kukathas’ approach to multiculturalism is, broadly speaking, based on two ideas: these ideas are what he considers to be human beings’ most fundamental interest and his theory of freedom of association. Kukathas considers that human beings have only one fundamental interest: the interest in living according to their conscience. In his opinion, the reason for this is, in part, that human beings are primarily moral beings and, consequently, are disposed to direct their lives/purposes towards what they consider to be morally worthwhile. Consequently, from Kukathas’ point of view, individuals find it difficult to act against their conscience. This tendency to govern one’s own conduct primarily by conscience and the difficulty to act against one’s moral beliefs can, in Kukathas’ (2003b, p. 53) view, be observed and has empirical support. An additional reason why acting according to one’s own conscience is a fundamental interest is because, according to Kukathas, the meaning of life is given by conscience (Kukathas, 2003b, p. 55). Hence, Kukathas considers that identity is connected with morality because what individuals are is their self-interpretation, which ultimately is provided by moral evaluation. It is important to notice that this says nothing about what each person’s morality is. A human rights activist and a terrorist can be both acting according to their conscience even if they are doing opposite things. Owing to the fact that conscience is a fundamental interest, Kukathas contends that the state is under the duty to protect this interest.

The second important aspect of Kukathas’ philosophy is his defense of freedom of association. According to Kukathas, freedom of association is primarily defined as the right to exit groups, that is, freedom of association exists when individuals have the freedom to leave or dissociate from a group they are part of. In other words, essential to this version of freedom of association is the idea that individuals should not be forced to remain members of communities they do not wish to associate with. Therefore, according to this definition, freedom of association is not about the freedom of entering a specific group; rather, it is about the freedom to leave those groups that individuals want to dissociate from (Kukathas, 2003b, p. 95).

According to Kukathas, there are two necessary and jointly sufficient conditions for individuals to have the freedom to exit. These conditions are that individuals are not physically barred from leaving, and that there is a place similar to a market society where they can exit. From Kukathas’ point of view, a place to go is a necessary requirement for exit because it would not be credible to think that individuals had a right to exit if all communities were organized on a basis of kinship, for the options available would be either conformity to the rules or loneliness.