African Philosophical Perspectives on the Meaning of Life

The question of life’s meaning is a perennial one. It can be claimed that all other questions, whether philosophical, scientific, or religious, are attempts to offer some glimpse into the meaning—in this sense, purpose—of human existence. In philosophical circles, the question of life’s meaning has been given some intense attention, from the works of Qoholeth, the supposed writer of the Biblical book, Ecclesiastics, to the works of pessimists such as Schopenhauer, down to the philosophies of existential scholars, especially Albert Camus and Sören Kierkegaard, and to twenty-first century thinkers on the topic such as John Cottingham and Thaddeus Metz. African scholars are not left out, and this article provides a brief overview of some of the major theories of meaning that African scholars have proposed. This is done by tying together ideas from African philosophical literature in a bid to present a brief systematic summary of African views about meaningfulness. From these ideas, one can identify seven theories of meaning in African philosophical literature. These theories include The African God-purpose theory of meaning, the vital force theory of meaning, the communal normative theory of meaning, the love theory of meaning, the (Yoruba) cultural cluster theory of meaning, the personhood-based theory of meaningfulness, and the conversational theory of meaning. Examining all these begins by explaining the meaning of “meaning” and the distinction between meaning in life and meaning of life.

Table of Contents

- Explaining Some Important Concepts

- African Philosophy and the Meaning of Life

- Conclusion

- References and Further Reading

1. Explaining Some Important Concepts

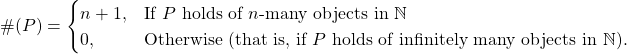

What is meant by the terms meaning and meaningfulness? The concept of meaning is about what all competing ideas about meaning are about. In the literature, meaning is thought of in terms of purpose (received or determined teleological ends that are worth pursuing for their own sake), transcendence (things beyond our animal nature), normative reasons for actions, and so forth. These singular or monistic views, while interesting, also have their flaws. They barely capture what only and all competing intuitions about meaning. This is why Thaddeus Metz proposed a pluralistic account of meaning where he tells us that meaning consists of:

roughly, a cluster of ideas that overlap with one another. To ask about meaning … is to pose questions such as: which ends, besides one’s own pleasure as such are most worth pursuing for their own sake; how to transcend one’s animal nature; and what in life merits great esteem or admiration (Metz, 2013, p. 34).

It is easy to agree with Metz’s family semblance theory since the pluralism he employs allows one to accommodate most theories or conceptions of meaning while rejecting peripheral ideas – like pleasure, happiness – that do not, on their own, possess the quality of a ‘final value’, needed in the usual understanding of what meaning entails. But while this is so, ideas about subjective accounts of meaning appear missing in Metz’s concept. In addition, what about the meaning of life? These questions have led Attoe (2021) to add two extra variables to Metz’s family of values. The first is the subjective pursuit for those ends that an individual finds worth pursuing (insofar as the individual considers those things/values as ends in themselves). A cursory glance at Metz’s approach shows that although it is tenable, it is more objectivist than it is all-encompassing. By inserting subjectivity into his approach, subjectivist views about meaning are immediately accommodated, which are often found to be instrumentality incompatible with ideas about meaning (See Nagel, 1979, p. 16). The second variable that Attoe (2021) proposes is coherence. By coherence, he means the identification of a narrative that ties together an individual’s life – perhaps those moments of meaningfulness or those actions that dot her life – such that the individual can adjudge her whole life as meaningful. This feature bears more on ideas about the meaning of life.

With this in mind, it is expedient to make further distinctions about the meaning in life versus the meaning of life – as these concepts mean two different things and shall be used in different ways later on in this chapter. By meaning in life, what is meant are those instances of meaning that may dot an individual’s life. Thus, a marriage to a loved one or the successful completion of a degree may subsist as a meaningful act or a moment of meaningfulness. With regards to the meaning of life, there are some, like Richard Taylor, who describes it as involving the meaning of existence, especially with regards to cosmic life, biological life, or human life/existence specifically. One can also use the term ‘meaning of’ in a narrower sense, where ‘meaning of’ delineates a judgment on what makes the life of a human person, considered as a whole (especially within the confines of an individual’s lifetime), meaningful (Attoe, 2021). This understanding is similar to Nagel’s understanding of the meaning of life. This distinction is important to note because instances of meaning do not always pre-judge the meaning of the entire life of an individual. So, the individual obtaining a degree can be a moment of meaningfulness (meaning in), but it would be hard to consider that individual’s life as a whole to be ultimately meaningful (meaning of) if that individual spent his time killing others for no reason, despite gaining a degree or marrying a loved one.

2. African Philosophy and the Meaning of Life

Having exhausted the more expedient distinctions, the following section delves into what could be considered African conceptions of the meaning of life. To fully understand the ideas that shall be put forth, a short detour is necessary to describe the metaphysics undergirding African thought as this would avail the reader the proper lenses with which to see the African view(s). For those who are new to African metaphysics, one can easily imagine that any talk of African metaphysics is predicated by some unsophisticated talk about fantastic religious myths and, perhaps, some witchcraft or voodoo. Fortunately, African metaphysics involves something deeper and it is this metaphysics that usually guides the traditional African worldview.

African metaphysics is grounded in an interesting version of empiricism that allows a monistic-cum-harmonious relationship between material and spiritual aspects of reality. It is empirical because most African thinkers are willing to grant the possibility of material and spiritual manifestations in everyday life. Indeed, it is because one can point to certain acts as manifestations of these spiritual acts that metaphysicians of this type are quick to pronounce the existence of spiritual realities and recognise said acts as spiritual. In other words, knowledge of the spiritual develops from certain manifestations that are verifiable by the senses. What is talked about as spiritual, for instance, does not diminish its empirical worth, since empiricism agrees that knowledge – whatever type it is – is gotten from experience.

Unlike much of Western metaphysics, which, as Innocent Asouzu states, is inundated with all sorts of bifurcations, disjunctions and essentialisation, African metaphysics considers the fragmentations we see in reality as evidence of a harmonious complementary relationship between and among realities. There is a tacit acknowledgment of the interplay between matter and spirit or between realities from all and any spectrum, such that each facet of reality is seen as equally important and the supposedly artificial divide between material and spiritual objects, non-existent. Ifeanyi Menkiti captures this idea:

[T]he looseness or ambiguity regarding what constitutes the domain of the physical, and what the domain of the mental, does not necessarily stem from a kind of an ingrown limitation of the village mind, a crudeness or ignorance, unschooled, regarding the necessity of properly differentiating things, one from the other, but is rather an attitude that is well considered given the ambiguous nature of the physical universe, especially that part of it which is the domain of sentient biological organisms, within which include persons described as constituted by their bodies, their minds, and whatever else the post-Cartesian elucidators believe persons are made of or can ultimately be reduced to. My view on the matter is that the looseness or ambiguity in question is not necessarily a sign of indifference to applicable distinctions demanded by an epistemology, but is itself an epistemic stance, namely: do not make distinctions when the situation does not call for the distinctions that you make. (Menkiti, 2004b, pp. 124-125)

Somehow, this messaging trickles down into the African socio-ethical space where, for the most part, achieving the common good or attaining one’s humanity generally involves communal living or a deep form of mutual coexistence – one that has a metaphysical backing. It is no wonder then that Africa is known for, and has provided the world with, series of philosophies that reflect harmonious co-existence – from Ubuntu (Ramose, 1999; Metz, 2017) to Ukama (Murove, 2007) to Ibuanyidanda philosophy (Asouzu, 2004; Asouzu, 2007) to Harmonious monism (Ijiomah, 2014), to Integrative humanism (Ozumba & Chimakonam, 2014), the list goes on.

What this slight but important detour seeks to show is that within the traditional African metaphysical space, most thinkers are inclined to believe that spiritual entities are, indeed, existent realities. It is also speculated that these spiritual realities can and do relate with other aspects of reality and that spiritual realities are not removed from our everyday reality – at least not in the way Descartes divided mind from matter – but are an important part of our understanding of reality as a whole – even more so than Spinoza’s parallelism. These ideas should be kept in mind as they help guide any exploration of African views of the meaning of life.

a. The African God-Purpose Theory of Meaning

To answer the questions about what constitutes African conceptions of the meaning of life, one can give a few answers. The first is the African God-purpose theory. Although the God-purpose theory is not a new one—as other scholars from other philosophical traditions have written about it (see: Metz, 2007; Metz, 2013; Mulgan, 2015; Poettcker, 2015; Metz, 2019)—nor a uniquely African view, the arguments contained in the view possess salient features that are African.

For some philosophers, a belief in the existence of God is often considered unnecessary when talking about the God-purpose theory. Why this is so, it is argued, is because what is spoken about is not whether or not God exists but rather what conditions are necessary for a God-purpose theory to subsist as a viable theory of meaning (Metz, 2013, p. 80). While this is a much-appreciated argument, it is hard to agree with its logic, for if we were to presume that the idea of a God or that the existence of a God was inconceivable then we would be forced to admit that a theory of meaning based on an inconceivable God, is not conceivable – indeed one can imagine it to be nothing more than wishful thinking. If, for instance, logical arguments were made for the capture of something as inconceivable as a three-winged leopard would grant one meaning, it would be odd for one to take such a theory as a plausible theory of meaning, since three-winged leopards do not exist. The same arguments can be made with regards to the God-purpose view. One must allow for some rational belief in a conceivable God before one can make claims about a God-purpose theory of meaning. For most traditional Africans, this is precisely the case. The African God-purpose theory begins with an all-pervading belief in God or the Supreme Being (the two terms will be used interchangeably). The belief in the Supreme Being features in the everyday life of the traditional African and Pantaleon Iroegbu makes this point clear:

So far, nobody to our knowledge, has disputed the claim that in African traditional societies, there were no atheists. The existence of God is not taught to children, the saying goes. This means that the existence of God is not learnt, for it is innate and obvious to all. God is ubiquitously involved in the life and practices of the people. (Iroegbu, 1995, p. 359)

This belief is not far-fetched and the ideas that govern this belief are plausible enough to grant the African God the mantle of conceivability. The reason for this is simple: From African philosophical literature, what immediately stands out is the fact that for most traditional Africans, nothingness is impossible. What is rather suggested is the idea of being-alone (as the African metaphysical equivalent of nothingness) and being-with-others as the full expression of reality (one immediately sees the communal metaphysics at play here). The African rejection of nothingness for being-alone comes from the African understanding of God as necessarily eternal (at least regressively speaking) and also the progenitor of the universe. Thus, the term being-alone not only encapsulates a necessarily eternal God, but it also underscores a God that necessarily existed without the universe.

However, being-alone also implies an unattractive mode of living, which does not tally with the more attractive communal ontology and/or mode of living. It is for this reason that one can plausibly speculate that the existence of the universe presupposes a supreme rejection of Its (the term “It” is used as a pronoun to denote a genderless God) being-alone in exchange for a more communal relationship with the other (the universe – understood as encapsulating all other existent realities) – one that legitimises Its existence. And so, the first overarching purpose of the universe is encountered – the legitimisation of God’s existence via a communal relationship with the universe (i.e. created reality). Since God – the source from which the Universe and other realities presumably sprang from – existed prior to other forms of existence, being-alone must have been a reality at some point. In the African view, the Cartesian cogito, acknowledging one’s existence, is not enough. Existence ought to be expressed via a relationship with another. It is in this way that other realities, which emerge from God, legitimise God’s existence as a being-with-others.

With this in mind deciphering God’s purpose for man, and how that translates to meaningfulness, becomes a much easier affair. With the ultimate goal of sustaining the harmony which preserves the universe and in turn legitimises the existence of God, living a meaningful life would involve living a life that ensures harmony. Perhaps this is another reason why complementarity is widespread in most communities in traditional Africa. But as far as it concerns living a meaningful life by doing God’s will, traditional African thinkers would agree that two methods stand out – fulfilling one’s destiny and obeying the divine law.

With regards to the destiny view, what is immediately clear is that the Supreme Being is responsible for the creation of destiny, as Segun Gbadegesin tells us. Whether such a destiny is chosen by the individual or imposed on the individual is unclear, but what is important is that such a destiny emanates from God. It must be iterated that destiny – as understood here – should be distinguished from, what may be termed, “fate(fulness)”. When one receives her destiny from God, one does not imply that the individual’s life would follow such a hard (pre)deterministic path such that whatever role one plays is devoid of the free will or the ability to control the trajectory of one’s life. One can still choose to pursue a certain destiny, choose to alter a given destiny, or choose not to pursue her destiny. Destiny is then thought of as an end that is specific to each individual and for which the individual can choose whether s/he wishes to pursue it or not.

Normative progression suggests that one attains personhood as time progresses and as the individual continues to gain moral experience. In this way, the older and more morally experienced an individual is, the closer the individual is to becoming a moral genius and a person. It would be quite plausible to assume that (even though there is no consensus on the matter) destinies are handed to the individual by God, since it would be harder to imagine—if one considers the African view of the normative progression of personhood—that an it, or even a yet to be developed human person, possesses the raw rational capacity to choose something as complex as its destiny. Although Gbadegesin reminds us that good destinies exist just as bad destinies do, and also that destinies are alterable, since one can choose to ignore a bad destiny and do good instead (Gbadegesin, 2004, p. 316), one can also argue that ignoring a bad destiny to do good is no different from ignoring a good destiny to do bad things. In both cases, what is being discussed is not the alteration of one’s destiny, but simply the neglect of one’s destiny. One can go as far as to assume that by ignoring one’s assigned destiny in such a manner, what is expressed is an inability to understand how one’s destiny ties to God’s overarching purpose and/or a willingness to live a meaningless life. Thus, the pursuit of bad destinies (as assigned by God) can also lead to meaningfulness – much like the Christian gospel of salvation is predicated on the betrayal of Jesus by Judas Iscariot. Hence, within the African God-purpose view, meaningfulness readily involves the pursuit and/or fulfilment of one’s God-assigned destiny. It would be meaningful since such a pursuit/fulfilment would be considered a source of great admiration and esteem both by the individual who has done the fulfilling and the members of his/her society who have understood that s/he has fulfilled his/her destiny, and life would be meaningless if one fails to pursue his/her destiny.

Another way in which one can think of the God-purpose theory as one that confers meaning is through divine laws. Divine laws are known to the individual via different conduits that serve as representatives or messengers of the supreme – usually lesser gods, spirits, ancestors, or priests (Idowu, 2005, pp. 186-187). What these laws are vary from culture to culture but the general idea is that one must avoid certain taboos or acts that allow discord in the community, and that one must engage in certain rites, customs, or rituals to flourish as an individual and obtain meaning. Indeed, as Mbiti reminds us, failing to adhere to divine law not only ensures meaninglessness, it also affects the grand purpose of sustaining the harmony that holds the universe to him. This is why acts of reparation – commiserate with the crime – are often advised once such discord is noticed.

It can be immediately noticed that the African God-purpose theory bears on both aspects of meaningfulness – i.e. meaning in life and the meaning of life. In the first instance, it is apparent that insofar as the individual performs those actions that are directly tied to his/her destiny, then those acts constitute for him/her a moment of meaningfulness. With regards to the latter, the narrative that ties the individual’s actions together and gives it its coherence is his/her destiny – or at least the pursuit of it. It is this narrative that allows one to sit back and adjudge a whole life as meaningful or meaningless.

While the African God-purpose theory of meaning offers an interesting approach to the question of meaning, two major criticisms that it is bound to face would be the instrumentality that regals God’s purpose and the narrowness of the view. These criticisms can be levelled against most God-purpose theories – especially those of the extreme kind. By locating meaning in what God wants the individual to do, one inadvertently admits that the individual only plays a functional role in the grand scheme of things. The imposition of God’s will – through destiny and/or divine law – disregards individual autonomy (whether one has the free will to choose or not) since meaning (especially in extreme versions of the God-purpose theory) only resides in doing God’s will. The second criticism lies in the fact that the African God-purpose theory fails to capture those instances of meaning that springs neither from one’s destiny nor divine law. Thus, the individual who strives to become a musical virtuoso would fail to achieve meaningfulness if that achievement or pursuit does not tally with his assigned destiny. Yet, it can be intuited that such a pursuit counts as a moment of meaningfulness.

b. The Vital Force Theory of Meaning

The second theory of meaning that can be gleaned from African philosophical literature is the vital force theory of meaning. To understand what this theory entails, it is important to first understand what is meant by “vital force”. Vital force or life force can be described as some sort of ethereal/spiritual force emanating from God and present in all created realities. Wilfred Lajul, in explaining Maduabuchi Dukor’s views, expresses these claims quite succinctly:

Africans believe that behind every human being or object there is a vital power or soul (1989: 369). Africans personify nature because they believe that there is a spiritual force residing in every object of nature. (2017, p. 28)

This is why African religious practices, feasts, and ceremonies cannot in any way be equated to magical and idolatrous practices or fetishism. Within the hierarchy of being, the vital force expresses itself in different ways, with those in humans and ancestors, possessing an animating and rational character – unlike those in plants (which are supposedly inanimate and without rationality) and those in animals (which possess animation without the sort of rationality found in man). Indeed, Deogratia Bikopo and Louis-Jacques van Bogaert opine that:

All beings are endowed with varying levels of energy. The highest levels characterise the Supreme Being (God), the ‘Strong One’; the muntu (person, intelligent being), participates in God’s force, and so do the non-human animals but to a lesser degree…Life has its origin in Ashé, power, the creative source of all that is. This power gives vitality to life and dynamism to being. Ashé is the creative word, the logos; it is: ‘A rational and spiritual principle that confers identity and destiny to humans.’…What subsists after death is the ‘self’ that was hidden behind the body during life. The process of dying is not static; it goes through progressive stages of energy loss. To be dead means to have a diminished life because of a reduced level of energy. When the level of energy falls to zero, one is completely dead. (Bikopo & van Bogaert, 2010, pp. 44-45)

If one must take the idea of a vital force seriously, then it must be admited that the vital force forms an important part of the individual, and that it can be either diminished or augmented in several ways. To diminish one’s vital force, one must look to illness, suffering, depression, fatigue, disappointment, injustice, failure, or any negative occurrence as contributors to the diminution of vital force. In the same vein, one can posit conversely that good health, certain rituals, justice, happiness, engaging positively with others, and so forth, contribute to the augmentation and fortification of vital force. These ideas lead us to vitalism as a theory of meaning.

On what can constitute a vital force theory of meaning, it should be kept in mind that great importance is placed on augmenting one’s vital force as opposed to diminishing it. Thus, being of paramount importance, it would be important for the individual to continually fortify her vital force. This is done by engaging in certain rituals and prayers and by immersing oneself in morally uplifting acts and positive harmonious relations with one’s community and environment. Thus, meaningfulness is obtained by the continuous augmentation of one’s vital force and/or those of others via the processes outlined above. Indeed, the well criticised Tempels alludes to this when he states that ‘Supreme happiness, the only kind of blessing, is, to the Bantu, to possess the greatest vital force: the worst misfortune and, in very truth, the only misfortune, is, he thinks, the diminution of this power’ (Tempels, 1959, p. 22). On the other hand meaninglessness, and indeed death would involve the inability to augment one’s life force and/or actively engaging in acts that seek to diminish one’s vital force or those of others. This theory of meaning focuses on a transcendental goal whose mode of achievement usually involves acts that garner much esteem and admiration. Thus, by enhancing her vital force, the individual engages in something that is inherently meaningful and valuable.

Beyond this traditional view of vitalism, some scholars of African philosophy have also put up a more naturalistic account of meaning that avoids the problems (mainly of proof) associated with theories dealing with spiritual entities (see: Dzobo 1992; Kasenene 1994; Mkhize 2008, Metz 2012). Within this naturalistic understanding, what is referred to as vital force are wellbeing and creative power, rather than the spiritual force of Tempels’ Bantu ontology. So meaningfulness would then involve engaging in those acts that constantly improve one’s wellbeing and engaging ones creative power freely.

Some criticisms can be leveled against the vital force theory. First is the more obvious denial of the existence of spiritual essences within the human body – especially since the brain and the nervous system are thought of as responsible for animating the human body and for the cognitive abilities of a human person (see: Chimakonam, et al., 2019). The second criticism focuses on the naturalist account and argues that ideas about wellbeing and creative power need not bear the moniker of vitalism to make sense. Indeed, one can refer to the pursuit of wellbeing or the expression of creative genius as separate paths to meaningfulness that need not be seen as vitalist.

c. The Communal Normative Theory of Meaning

The third African theory of meaning has been termed “the communal normative function theory” (Attoe, 2020). This theory of meaning is based on one of the most widespread views in African philosophy – communalism. This idea has been discussed by various African philosophers such as Mbiti, Khoza, Mabogo Ramose, Menkiti, Asouzu, Murove, Ozumba & Chimakonam, Metz, and so forth, in various guises and with reference to the several branches of African philosophy ranging from African metaphysics, African logic, African ethics and even down to African socio-political philosophy. An understanding of communalism is necessary to see how this view speaks to conceptions.Communalism is founded on a metaphysics that understands various realities as missing links of an interconnected complementary whole (Asouzu, 2004). This ontology then flows down to human communities and social relationships, where the attainment of the common good and the attainment of personhood is invariably tied to how one best expresses herself as that missing link. So, within this framework, interconnectedness as encapsulated in ideas such as harmony, solidarity, inclusivity, welfarism, familyhood, and so forth, play a prominent role. This is why dicta like Mbiti’s famous dictum ‘I am because we are and since we are, therefore I am’ (Mbiti, 1990, p. 106) or the Ubuntu mantra “… A person is a person through other persons…” or expressions like “..one finger cannot pick up a grain…. “ (Khoza, 1994, p. 3) are commonplace in explaining communalism. Scholars, like Menkiti have therefore gone on to even tie individual personhood to how well the individual tries to live communally and engage with the community.

From this understanding of communalism, a theory of meaning emerges where meaning involves engaging harmoniously with others. By engaging positively with others, the individual seeks to acquire humanity in its most potent form, and it is by acquiring and enhancing this humanity or this personhood that the individual also acquires meaning. By engaging harmoniously with others, the individual sheds petty animal desires, especially those that spring from selfishness, and instead focuses on moral/normative goals that transcend the individual and centre on communal flourishing. Thus, within this framework, the lives of individuals such as Nelson Mandela or Mother Theresa would count as meaningful because of their constant striving to ensure harmony and uplift the lives of others. While the meaningfulness is gained by performing one’s communal normative function, meaninglessness would then subsist in either not engaging positively with others or performing those acts that ensure disharmony or discord – which, in turn, leads to the loss of one’s humanity.

While being an attractive/plausible theory of meaningfulness from the African space, the major shortcoming of the communal normative function theory is that it does not accommodate other meaningful acts that are not designed for, or may not contribute to, communal upliftment. Thus, if our music enthusiast were to engage in her pursuit of achieving virtuoso status and did so without seeking to engage with others with her music, that achievement would not count as a meaningful act.

d. The Love Theory of Meaning

In an earlier paper titled “On Pursuit of the Purpose of Life: The Shona Metaphysical Perspective”, love (which according to Mawere is similar to the Greek concept of Agape) is understood in this context as the “unconditional affection to do and promote goodness for oneself and others, even to strangers” (Mawere, 2010, p. 280).

A few things can be noted from the above. The first point is that love is an emotion from which the desire to do good emanates. As an emotion, one can speculate that love is an emotional feature available to all human beings in the same way that rationality, anger, and happiness are emotions that are also available to every human being. This point is easily countered by various heinous acts that many human beings have perpetrated throughout history; genocides of all kinds portray a hateful instinct rather than a loving one. However, the response to this point would be that love is not the only emotion that the human being is born with, hence the expression of other unpleasant emotions. The second response would be that love is a capacity that is nurtured. Mawere points to this fact:

However, one may wonder why some human beings do not love if love is a natural gift and the sole purpose of life. It is the contention of this paper that the virtuous quality of love though natural is nurtured by free will. (Mawere, 2010, p. 281)

Mawere is vague with regards to what he means by “free will”, and one can only speculate. However, the preferable route to take in describing how love/agape is nurtured would be to think of it in terms of deliberate cultivation and/or expression of love. When one actively seeks to promote goodness for one’s self and others, one is nurturing a habit that takes advantage of our presumably innate capacity to love. By ridding one’s self of the blockades to unconditional love such as self-interest, nepotistic attitudes, unforgiveness, and so on, the individual begins to find himself or herself expressing love in the way Mawere envisions viz. “unconditional affection to do and promote goodness for oneself and others, even to strangers” (Mawere, 2010, p. 280).

It is from this framework that Yolanda Mlungwana (2020) draws her notion of love. Mlungwana tells us that Mawere’s theory of Rudo (love) is different from Susan Wolf’s version of the love theory. According to her, “Insofar as people are the only objects of love for Mawere, his sense of “love” differs from Susan Wolf’s influential account, according to which it is logically possible to love certain activities, things or ideals”. So, while the love theory of meaning is, for Mawere, people-centred or anthropocentric, the love view for Wolf is much more encompassing and may feature a variety of objects that are not exactly human. One can show love to the environment by advocating for and trying to perpetuate a greener planet earth. It could also be a love for abandoned animals or an endangered species. Of course, one can wonder, here, about the narrowness of the traditional African love theory of meaning, and it is a valid critique to have. But the point here is that the scope of the African love view only encapsulates human beings.

It is agreed by the African love theorists that the purpose of existence is love, and it is the sole purpose of human existence. While this might seem a strange claim to make (since one can think of certain acts that are prima facie meaningful – say becoming a musical virtuoso – without necessarily being an act of love), one must first understand what the claim means before settling for certain conclusions. According to Mawere, love permeates all aspects of human relations with others and society:

The Shona consider Agape as the basis of all good relations in society, and therefore as the purpose of everyone’s life. In fact, for the Shonas, all other duties of man on earth such as reproducing, sharing, promoting peace, respecting others (including the ancestors and God), among others have their roots in love. Had it not been love which is the basis of all relationships, it was impossible to promote peace, respect others. In fact, meaningful life on earth would have been impossible. (Mawere, 2010, p. 280)

This point immediately demonstrates that the idea of love is not as one-dimensional as one would think it – doing good to others and one’s self. In our everyday lives and our everyday practices, insofar as we relate with others in some way, such a relation can be rooted in love. Love, in this sense, is not solely thought of in terms of a direct show of some sort of altruistic behaviour towards a person, community, or thing, it is manifested in many differing things/activities like judgments and reparation, business dealings, governance/leadership, teaching/learning, sportsmanship, self-improvement, and so forth. In this way, our musician, who aims to become a virtuoso (whether or not he plays for an audience), does so because he wishes to improve himself – a manifestation of self-love.

When human beings fail to nurture love and begin to manifest hate, problems begin to arise. Mlungwana alludes to this point when she states “In the absence of love, which is the foundation of all relationships, there is no encouragement of peace, respect, etc.” Since love is the purpose of human existence (for people like Mawere), an existence that shows an absence of love is one that is simply meaningless. This meaninglessness further degenerates into anti-meaning when the individual not only fails to show love but actively seeks to pursue hate.

It is hard to fault the love view but one point stands out: Suppose one’s attempt at love causes harm to some other person, could such an act be judged as meaningful? For instance, suppose a person trains to become a brilliant Special Forces Soldier (self-love) to serve his country (love towards his/her society). Let us further suppose that in service to his country, this individual is responsible for the death and destruction of other communities. While his/her dedicated service to his/her country can be seen as an act of love, the destruction of lives and communities constitutes an act of hate (at least from the viewpoint of the communities he has negatively affected). This problem is compounded by the fact that for Mawere, this love must be unconditional. One response to this problem would be that meaningfulness is not as objective a value as one might like to think. By extension, since meaningfulness is subjective and love/hate is context-dependent, the individual’s life is both meaningful and meaningless, depending on the context involved. While this point might seem problematic within a two-valued logical system, such a view is well captured in the dominant trivalent logical systems in African philosophy, such as Chimakonam’s Ezumezu logic.

e. The Yoruba Cluster Theory of Meaning

Another theory of meaning emanating from African philosophy is what may be described as the “Yoruba Cluster View” (YCV). It is so-called because the cultural values which cluster to form this particular view emanate from the dominant views that are found in traditional Yoruba thought. This view was first systematically articulated by Oladele Balogun (2020) and, to some extent, Benjamin Olujohungbe (2020).

The Yoruba conception of meaningfulness is anchored on what Balogun refers to as, a holism. What this holism represents is a theory of meaningfulness that is not based on single isolated paths to meaningfulness (call it a monism) but is instead based on a conglomeration of different complementary “interwoven and harmonious accounts of a meaningful life considered as a whole and not in isolation” (Balogun, 2020, p. 171). Metz had made a similar move when defining the concept “meaning” but Balogun’s pluralism (or holism, as he prefers) is different in two main ways. These paths to meaningfulness are, for Balogun, necessarily dependent on each other, since he is quick to conclude that “isolating one condition from the other alters the constitutive whole” (Balogun, 2020, p. 171).

These interwoven paths to meaningfulness are drawn from a series of normative values that are referred to as “life goods”. These life goods mainly encompass certain normative values that reflect the spiritual social ethical and epistemological experiences. According to Balogun:

The term “life goods” refers to material comfort symbolised with monetary possession, a long healthy life, children, a peaceful spouse and victory over the vicissitudes of existence. The fulfilment of such “life goods” at any stage of human existence is accompanied by the remarks “X has lived a meaningful life” or “X is living a meaningful life”, where X is a relational agent in a social network. The “life goods”, though materialistic and humanistic, are factual goals providing reasons for how the Yorùbá ought to act in daily life. To the extent that such “life goods” ground and guide human actions, and humans are urged to strive towards them in deeds and acts; they are normative prescriptions in the Yorùbá cultural milieu. (Balogun, 2020, p. 171)

These life goods are positive values, and in desiring to acquire this cluster of values, the individual ipso facto desires meaningfulness. What this invariably means is that acquiring meaningfulness would involve a subjective pursuit of these seemingly objective normative values. On the other hand, acquiring one of these values alone, according to the view, would not translate to living a meaningful life, since these values are thought of as means (not ends) to an end (meaningfulness) and since the necessity of acquiring all the values in the cluster is what gives life meaning, according to the view.

Drawing from William Bascom, Balogun identifies this cluster of values to include the following: ranked in order of importance: long life or “not death” (aiku), money (aje, owo), marriage or wives (aya, iyawo), children (omo), and victory (isegun) over life’s vicissitudes. These values can serve as a yardstick for which one can measure if his/her life is meaningful. Furthermore, the judgment call about whether a life is meaningful or not is not merely tied to subjective valuing, it is also subject to external valuing. In this way, even when one is dead, that individual’s life can still be adjudged as meaningful by those external to the individual (that is, other living persons outside the individual). What this means is that the view that death undercuts meaningfulness in some way, does not hold for friends of the Yoruba cluster view. This is because death is merely a transition to more life, either as an ancestor or as another person, via reincarnation, and because even in death, individuals that are external to the dead individual can still make judgments about the meaning of his/her life.

The firm belief in life after death also allows ancestors to attempt to find meaningfulness themselves since they are very much alive. It is safe to assume that the cluster view does not apply in this particular instance, since aje, aya/iyawo and omo, are not achievable goals for ancestors. However, it is expected that ancestors intervene in the lives of the living by “[protecting] the clan and sanctioning of moral norms among the living” (Balogun 2020, 172).

The cluster view fully expresses itself as a theory of meaning when one realises that the values that make up the cluster intertwine with, and complement, each other. At the base is Aiku which undergirds any claim to meaningfulness. This is because a short-lived life is essentially derived the opportunity to pursue those means that allow for a meaningful life. Menkiti’s suggestion that children cannot attain personhood is instructive here. So, taking good care of one’s health and living a long life provides the individual with the time to achieve other values that are believed to constitute a meaningful life. Aje/owo offers the sort of material comfort that enables the individual to lead a comfortable life and one that allows the individual to take care of others. It is by possessing aje that the individual acquires the financial capacity to marry a wife/wives and take care of children. Without an iyawo, on the other hand, children are not possible except if one decides to bear children outside wedlock (which is frowned upon). As Balogun puts it, A life without a marital relationship and children is culturally held among the Yorùbá as meaningless. Given the pro-communitarian attitude of the Yorùbá, procreation within a network of peaceful spousal relationships is considered necessary for expanding the family lineage and clan. All this, combined with isegun, comes together to form a life that can be looked at an branded as meaningful.

Balogun goes further to augment these cluster values with morality. For the Yoruba, according to Balogun, “a meaningful life is a moral life. Within the Yorùbá cultural milieu, there is no clear demarcation between living a meaningful life and a moral life, for both are associated with each other” (Balogun 2020, 173). Olujohungbe, points to this fact when he concludes that a life filled with quality (one can say, a life that has achieved the cluster values that Balogun alludes to), must also be a morally good life:

A virtuous character thus trumps all other values such as long life, health, wealth, children and those other attributes making up the purported elements of well-being. In this connection, a distinction is thus made between quantity and quality of life. For a vicious person who dies at a “ripe” old age leaving behind children, wealth and other material resources, society often (though clandestinely) says akutunku e l’ona orun – which literally means “may you die severally on your way to heaven” and actually implies good riddance to bad rubbish. (Olujohungbe, 2020, p. 225)

So when one focuses the cluster values towards positively engaging with others, such an individual is living a meaningful life. Thus, when one purposes to alleviate the poverty of others with the aje/wealth that s/he has acquired, s/he is living a meaningful life. If one procreates and bears children, and guides those children into becoming good members of society, that individual is living a meaningful life. This applies to all the other cluster values used in tandem with each other.

f. Personhood and a Meaningful Life

Flowing from the views of scholars like Ifeanyi Menkiti and Kwame Gyekye and systematized into a theory of meaningfulness by Motsamai Molefe is the idea that attaining personhood can invariably lead to a meaningful life. Personhood, in African philosophical thought, is tied to more than mere existence. In other words, merely being a human being that exists is not a sufficient condition for personhood. One must exhibit personhood in others to be called a person.

There has been some debate in African philosophical thought, mainly between Menkiti and Gyekye, about the status of babies and young children with regards to personhood. Menkiti takes the more radical stand that children possess no personhood, and so cannot be persons. Gyekye, on the other hand, supposes that children are born with some level of personhood, and that this personhood, being in its nascent form, can be augmented by one’s level of normative function. What they both agree on, however, is that personhood is achievable and that one can strive to attain the highest form of personhood through positive relationships with the people in one’s community and with one’s culture.

Based on this general framework, Motsamai Molefe provided a systematic account of meaning based on the African idea of personhood. For him, meaningfulness begins when the individual develops those capacities and virtues that allow it to become the best of its kind. Life becomes meaningful with the acquisition of these virtues and “the conversion of these raw capacities to be bearers of moral excellence” (Molefe, 2020, p. 202).

So, because these virtues are bearers of moral excellence, Molefe further informs us that a meaningful life would, according to this theory, be construed in terms of the agent achieving the moral end of moral perfection or excellence. Moral excellence is not automatic, and like Menkiti opines, unlike other types of geniuses, moral geniuses (who have acquired moral excellence), only acquire that status after a long period of time. As a matter of fact, the passage of time only serves to enhance one’s moral experience.

Molefe also ties his idea of personhood and theory of meaning to dignity. Echoing Gyekye’s ideas, Molefe asserts that every individual has the capacity to be virtuous and that every individual ought to build that capacity to a reasonable level. In his words:

Remember, on the ethics of personhood, we have status or intrinsic dignity because we possess the capacity for moral virtue. The agent’s development of the capacity for virtue translates to moral perfection, which we can also think of in terms of a dignified existence. This kind of dignity is the one that we achieve relative to our efforts to attain moral perfection – achievement dignity. As such, a meaningful life is a function of a dignified human existence qua the development of the distinctive human capacity for virtue. I also emphasise that the agent is not required to live the best possible human life. The requirement is that the agent ought to reach satisfactory levels of moral excellence for her life to count as meaningful. (2020, p. 202)

The requirement to have satisfactory levels of moral excellence does not preclude the individual from aiming to live the best possible life. In this way, the requirement to have satisfactory levels of moral excellence stands as a bare minimum for one’s life to be considered meaningful. This allows us to think about the meaningfulness of life in terms of degrees of meaningfulness. In this way, if I live a meaningful life by sufficiently exuding some moral virtues, my life would be meaningful, but not to the degree that one may consider Nelson Mandela’s life to be meaningful.

g. The Conversational Theory

According to Chimakonam (2021), Conversationlism is a theory of meaning-making that strives to improve two main significists – the nwa-nsa and the nwa-nju – through the process intellectual/creative struggle (a process that conversationalists call “arumaristics”), anchored by the construction, deconstruction and reconstruction of seemingly contrary viewpoints. While Conversationalism is focused on conceptual forms of meaning-making, its implications for life is also apparent.

Meaning-making is a matter of conversations within one’s self and between one’s self and the objective values of the various contexts that s/he encounters in life. Within one’s self, meaning lies in self-improvement, achieved through the interrogation of one’s okwu (values, viewpoints, prejudices, and so forth), as Chimakonam (2019) calls it. It is not just that this okwu, which forms the content of the individual’s life, is improved, but the ability to ask new questions in a life-long dialogue is also improved. By questioning himself/herself and finding answers to those questions, the individual improves his/her okwu, each time at higher levels of sophistication. This positive augmentation of one’s okwu is precisely what makes life meaningful for the conversationalist, at least from a subjective point of view.

But the individual also exists within a community, and, for the conversationalists, the ideas often called objective are mainly the intellectual contributions of subjective individuals who belong to a particular communal context. What, then, counts as objective meaning? Objective meaning, in Conversationalism, would involve the individual’s ability to either imbibe (as the nwa-nsa) or interrogate (as the nwa-nju) the ideas, actions or values that a communal context considers worthwhile. By imbibing those values or performing those actions that one’s communal context considers valuable, the individual pursues ends that are worthy for their own sake, merits esteem and admiration, and transcends his/her animal nature – s/he identifies with ends that are beyond him/her. By assuming the role of nwa-nju (or questioner), s/he makes his/her life meaningful by allowing the improvement of the very values for which this communal context relies as purveyors of meaningfulness. In this way, the individual’s life becomes meaningful by becoming a meaning-maker or meaning-curator.

3. Conclusion

What has been presented above are six plausible theories of life’s meaning that can be gleaned from traditional African philosophical thought. While these six accounts of meaning may not necessarily account for all the possible theories of meaning that can be hewed from the African worldview, it is a good start that invites contributions and critical engagements from philosophers interested in this subject-matter. How attractive are these theories of meaningfulness? Are there contemporary African alternatives to the more traditional views of meaningfulness? Are there more pessimistic accounts within the corpus of African thought that embrace a more nihilistic approach to meaningfulness?

4. References and Further Reading

- Agada, A., 2020. The African vital force theory of meaning in life. South African Journal of Philosophy, 39(2), pp. 100-112.

- The article articulates and discusses the African vital force theory.

- Asouzu, I., 2004. Methods and Principles of Complementary Reflection in and Beyond African Philosophy. Calabar: University of Calabar Press.

- In this book, Innocent Asouzu develops his idea of complementary as a full-fledged philosophical system.

- Asouzu, I., 2007. Ibuanyidanda: New Complementary Ontology Beyond World Immanentism, Ethnocentric Reduction and Impositions. Zurich: LIT VERLAG GmbH.

- This book exposes some of the problems in Aristotelian metaphysics and builds a new complementary ontology.

- Attoe, A., 2020. Guest Editor’s Introduction: African Perspectives to the question of Life’s Meaning. South African Journal of Philosophy, 39(2), pp. 93-99.

- This article offers an introductory overview to the discussions about life’s meaning from an African perspective.

- Attoe, A., 2020. A Systematic Account of African Conceptions of the Meaning of/in Life. South African Journal of Philosophy, 39(2), pp. 127-139.

- This article curates from available clues, three African conceptions of meaning, namely, the African God’s purpose theory, the vital force theory and the communal normative function theory.

- Attoe, A. & Chimakonam, J., 2020. The Covid-19 Pandemic and Meaning in life. Phronimon, 21, pp. 1-12.

- This article considers the impact of the COVID-19 on the question of life’s meaning.

- Balogun, O., 2007. The Concepts of Ori and Human Destiny in Traditional Yoruba Thought: A Soft-Deterministic Interpretation. Nordic Journal of African Studies, 16(1), pp. 116-130.

- This article looks at the concept of destiny in traditional Yoruba thought.

- Balogun, O., 2020. The Traditional Yoruba Conception of a Meaningful Life. South African Journal of Philosophy, 39(2), pp. 166-178.

- This article examines the account of what makes life meaningful in traditional Yoruba thought.

- Bikopo, D. & van Bogaert, L.-J., 2010. Reflection on Euthanasia: Western and African Ntomba Perspectives on the Death of a King. Developing World Bioethics, 10(1), pp. 42-48.

- The focus of this article is the Ntomba belief about vitality and ritual euthanasia and its implications for the idea of euthanasia in African thought.

- Chimakonam, J., Uti, E., Segun, S. & Attoe, A., 2019. New Conversations on the Problems of Identity, Consciousness and Mind. Cham: Springer Nature.

- This book attempts to provide answers to the age-old problem of identity, mind-body problem, qualia, and so forth.

- Chimakonam, J., 2019. Ezumezu: A System of Logic for African Philosophy and Studies. Cham: Switzerland.

- This book is a novel attempt at curating and systematising African logic.

- Chimakonam, J., 2021. On the System of Conversational Thinking: An Overview. Arumaruka: Journal of Conversational Thinking, 1(1), pp. 1-46.

- This article presents a survey of the concept of conversationalism.

- Descartes, R., 1641. Meditations on first philosophy. Cambridge: Cambridge University Press (1996).

- This famous book discusses issues like the existence of God, the existence of the soul/self, and so forth.

- Dzobo, N., 1992. Values in a Changing Society: Man, Ancestors and God. In: K. Wiredu & K. Gyekye, eds. Person and Community: Ghanian Philosophical Studies. Washington: Center for Research in Values and Philosophy, pp. 223-240.

- In this chapter, Noah Dzobo discusses some African values, the sanctity/value of life, ancestorhood and the idea of vital force.

- Gbadegesin, S., 2004. Towards A Theory of Destiny. In: K. Wiredu, ed. A Companion to African Philosophy. Oxford: Blackwell Publishing, pp. 313 – 323.

- This article provides an in-depth discussion of the idea of destiny in African (Yoruba) thought.

- Gyekye, K., 1992. Person and Community in Akan Thought. In: K. Wiredu & K. Gyekye, eds. Person and Community. Washington D.C.: The Council for Research in Values and Philosophy, pp. 101-122.

- Gyekye, in this chapter, challenges Ifeanyi Menkiti’s radical communitarianism and discusses the idea of moderate communitarianism from the Akan perspective.

- Idowu, W., 2005. Law, Morality and the African Cultural Heritage: The Jurisprudential Significance of the Ogboni Institution. Nordic Journal of African Studies, 14(2), pp. 175-192.

- This paper examines the nature of the concepts of law and morality from the Yoruba (Ogboni group) perspective.

- Ijiomah, C., 2014. Harmonious Monism: A philosophical Logic of Explanation for Ontological Issues in Supernaturalism in African Thought. Calabar: Jochrisam Publishers.

- This book provides the first real look into the logic and ontology of harmonious monism.

- Iroegbu, P., 1995. Metaphysics: The Kpim of Philosophy. Owerri: International University Press.

- This book provides an overview of metaphysics, and introduces its own novel uwa ontology, which is based on the African view.

- Khoza, R., 1994. Ubuntu, African Humanism. Diepkloof: Ekhaya Promotions.

- This book provides a critical exposition of the Southern African notion of Ubuntu.

- Lajul, W., 2017. African Metaphysics: Traditional and Modern Discussions. In: I. Ukpokolo, ed. Themes, Issues and Problems in African Philosophy. Cham: Palgrave Macmillian, pp. 19-48.

- This chapter provides a brief survey of some of the issues discussed in African Metaphysics.

- Mawere, M., 2010. On Pursuit of the Purpose Life: The Shona Metaphysical Perspective. The Journal of Pan African Studies, 3(6), pp. 269-284.

- This article seeks to establish the idea of “love” as the purpose of human existence.

- Mbiti, J., 1990. African Religion and Philosophy. London: Heinemann.

- This famous book provides an overview of some of the religious and philosophical beliefs of some societies in Africa.

- Mbiti, J., 2012. Concepts of God in Africa. Nairobi: Acton Press.

- This book provides an overview of the various ideas about God in some African societies.

- Mbiti, J., 2015. Introduction to African Religion. 2nd ed. Illinois: Waveland Press.

- This book introduces readers to traditional African religious philosophy.

- Menkiti, I., 2004a. On the Normative Conception of a Person. Oxford: Blackwell Publishing.

- This chapter outlines Menkiti’s radical views about personhood in African thought.

- Menkiti, I., 2004b. Physical and Metaphysical Understanding: Nature, Agency, and Causation in African Traditional Thought. In: L. Brown, ed. African Philosophy: New and Traditional Perspectives. Oxford: Oxford University Press, pp. 107-135.

- This article focuses on the idea of causation in African metaphysics.

- Metz, T., 2007. God’s Purpose as Irrelevant to Life’s Meaning: Reply to Affolter. Religious Studies, Volume 43, pp. 457-464.

- In this article, Metz responds to Jacob Affolter’s claim that an extensionless God could ground/grant the type of purpose that makes life meaningful.

- Metz, T., 2012. African Conceptions of Human Dignity: Vitality and Community as the Ground of Human Rights. Human Rights Review, 13(1), pp. 19-37.

- In this article, Metz argues for a more naturalistic account of vitality, based on creativity and wellbeing, that could ground human dignity.

- Metz, T., 2013a. Meaning in Life. Oxford: Oxford University Press.

- In this book, Metz provides an analytic discussion of the question of meaning in life.

- Metz, T., 2017. Towards an African Moral Theory (Revised Edition). In: Themes, Issues and Problems in African Philosophy. Cham: Palgrave Macmillian, pp. 97-119.

- In this article, Metz provides a slightly revised version of an earlier article (with the same name), outlining his account of African metaphysics.

- Metz, T., 2019. God, Soul and the Meaning of Life. Cambridge: Cambridge University Press.

- This short book provides an overview of supernaturalistic accounts of life’s meaning.

- Metz, T., 2020. African Theories of Meaning in Life: A Critical Assessment. South African Journal of Philosophy, 39(2), pp. 113-126.

- In this article, Metz discusses vitalist and communalistic accounts of meaning.

- Mlungwana, Yolanda., 2020. An African Approach to the Meaning of Life. South African Journal of Philosophy, 39(2), pp. 153-165.

- In this article, Yolanda Mlungwana provides an examination of some African accounts of meaning such as the life, love and destiny theories of meaning.

- Mlungwana, Yoliswa., 2020. An African Response to Absurdism. South African Journal of Philosophy, 39(2), pp. 140-152.

- In this article, Yoliswa Mlungwana revisits Albert Camus’ absurdism in the light of African religions and philosophy.

- Molefe, M., 2020. Personhood and a Meaningful Life in African Philosophy. South African Journal of Philosophy, 39(2), pp. 194-207.

- This article provides an account of meaning that is based on African views on personhood.

- Mulgan, T., 2015. Purpose in the Universe: The Moral and Metaphysical Case for Ananthropocentric Purposivism. Oxford: Oxford University Press.

- The book argues for a cosmic purpose, but one for which human beings are irrelevant.

- Murove, M., 2007. The Shona Ethic of Ukama with Reference to the Immortality of Values. The Mankind Quarterly, Volume XLVIII, pp. 179-189.

- This article examines the Shona relational ethics of Ukama.

- Nagel, T., 1979. Mortal Questions. Cambridge: Cambridge University Press.

- The book explores issues related to the question of life’s meaning, nature, and so forth.

- Nagel, T., 1987. What Does It All Mean? A Very Short Introduction to Philosophy. Oxford: Oxford University Press.

- This book discusses some of the central problems in Western philosophy.

- Nalwamba, K. & Buitendag, J., 2017. Vital Force as a Triangulated Concept of Nature and s(S)pirit. HTS Teologiese Studies/Theological Studies, 73(3), pp. 1-10.

- This article examines the concept of vitality in African thought.

- Okolie, C., 2020. Living as a Person until Death: An African Ethical Perspective on Meaning in Life. South African Journal of Philosophy, 39(2), pp. 208-218.

- This article discusses attaining personhood as a possible route to meaningfulness.

- Olujohungbe, B., 2020. Situational Ambivalence of the Meaning of Life in Yorùbá Thought. South African Journal of Philosophy, 39(2), pp. 219-227.

- This article provides an account of Yoruba conception of meaning.

- Ozumba, G. & Chimakonam, J., 2014. Njikoka Amaka Further Discussions on the Philosophy of Integrative Humanism: A Contribution to African and Intercultural Philosophy. Calabar: 3rd Logic Option.

- This book introduces the idea of Njikoka Amaka or integrative humanism.

- Poettcker, J., 2015. Defending the Purpose Theory of Meaning in Life. Journal of Philosophy of Life, 5, pp. 180-207.

- The article provides an interesting defence of the purpose theory.

- Ramose, M., 1999. African Philosophy through Ubuntu. Harare: Mond Books.

- In this book, Mogobe Ramose provides a systematic account of Ubuntu and the ontology that undergirds it.

- Taylor, R., 1970. The Meaning of Life. In: R. Taylor, ed. Good and Evil: A New Direction. New York: Macmillian, p. 319–334.

- This chapter is an honest discussion on the reality of meaninglessness in relation to the question of life’s meaning.

- Tempels, P., 1959. Bantu Philosophy. Paris: Presence Africaine.

- This book provides a Westerner’s account of African views about vitality.

Author Information

Aribiah David Attoe

Email: aribiahdavidattoe@gmail.com

University of Fort Hare

South Africa

![Rendered by QuickLaTeX.com \begin{align*}{\sf RP}: (\forall a)(\forall b)(\forall c)(\forall d)[a : b = c : d \leftrightarrow (\forall e)(\forall f)(&(a \times e > b \times f \leftrightarrow c \times e > d \times f) \\ \land \ &(a \times e = b \times f \leftrightarrow c \times e = d \times f) \\ \land \ &(a \times e < b \times f \leftrightarrow c \times e < d \times f))] \end{align*}](https://iep.utm.edu/wp-content/ql-cache/quicklatex.com-5e6cc73c36720b7c50267e836c8d27f6_l3.png)