Mathematical Nominalism

Mathematical nominalism can be described as the view that mathematical entities—entities such as numbers, sets, functions, and groups—do not exist. However, stating the view requires some care. Though the opposing view (that mathematical objects do exist) may seem like a somewhat exotic metaphysical claim, it is usually motivated by the thought that mathematical objects are required to exist in order for mathematical claims to be true. If, for instance, it is true that there are infinitely many prime numbers, then prime numbers prima facie exist. Much contemporary work in mathematical nominalism divides into efforts to argue either that mathematical truths do not in fact require the existence of mathematical objects, or that we are entitled to regard mathematical claims, such as the one above, as false.

This article surveys contemporary attempts to defend mathematical nominalism. Firstly, it considers how to formulate mathematical nominalism, surveys the origins of the contemporary debate, and explains epistemic motivations for nominalism. Secondly, it examines a particularly prominent family of objections to mathematical nominalism, issuing from the applicability of mathematics. Thirdly, it looks at three kinds of response to that family of objections: reconstructive nominalism (aiming to show that, in principle, one can recreate the applications of mathematics without making mathematical claims), deflationary nominalism (aiming to show that the truth of mathematical claims does not require the existence of mathematical objects), and instrumentalist nominalism (aiming to show that one can make sense of standard mathematical practices without incurring a commitment to the truth of mathematical claims). Finally, it surveys the claims of some leading thinkers about the relationship between mathematical nominalism and naturalism.

Table of Contents

- Formulating Mathematical Nominalism

- The Origins of the Contemporary Debate

- Motivations for Nominalism

- Nominalism and the Application of Mathematics

- Reconstructive Nominalism

- Deflationary Nominalism

- Instrumentalism

- Mathematical Nominalism and Naturalism

- References and Further Reading

1. Formulating Mathematical Nominalism

At a first pass, one can describe mathematical nominalism as the view that mathematical entities do not exist. Some clarifications and caveats, however, should be kept in mind. Firstly, some theorists have held that mathematical entities are, in some sense, mental objects. (The Dutch mathematician and philosopher L.E.J. Brouwer is sometimes interpreted as having endorsed this view.) Nominalists, however, deny the existence of mathematical objects understood as abstract objects whose existence does not depend on any mental or linguistic activity. To understand this claim, one must appreciate the thought that all that there is, or that there might be, can be divided into two exclusive and exhaustive categories: the concrete and the abstract. Nominalists hold that abstract objects do not exist. Examples of concrete objects are tables, chairs, stars, human beings, molecules, microbes, as well as more exotic theoretical entities such as electrons, bosons, dark matter and so on. Paradigmatically, concrete objects are spatiotemporal, contingent, have causal powers and can themselves be affected, participate in events, and can be interacted with, even if only indirectly. There are two different senses in which entities can be abstract. On one sense, to be abstract means to be non-particular. It is in this sense that universals are said to be abstract. Universals are properties which can be instantiated by particular objects. The property of being negatively charged would be instantiated by particular electrons, for example. Some theorists have understood mathematical objects as universals (Bigelow 1988, Shapiro 1997). However, mathematical objects are typically conceived as being abstract in a different way: they are particular, but non-concrete. Paradigmatically, objects that are abstract in this sense are particular, but non-spatial, necessary, unchanging, acausal, and cannot be interacted with, even indirectly. (The distinction between concrete and abstract entities is, however, difficult to analyze. Rosen (2020) provides a detailed discussion. See also Lewis (1986, 81–86) and Fitzgerald (2003).) Mathematical nominalism then can be more specifically described as the view that abstract mathematical entities do not exist, in either sense of “abstract”.

Secondly, some theorists hold that the term “exist” and its cognates are not univocal (see, for instance, Russell 1903; Brandom 1994; Miller 2002; Vallicella 2002; Putnam 2004; Hirsch 2011; Hofweber 2016; McDaniel 2017; Kimhi 2018). For example, the meaning of “exist(s)” in “Electrons exist” may not be the same as in “Giants exist in both Mesopotamian and Shinto mythology” or “A special bond exists between all philosophers of mathematics”. Further, some theorists hold that some usages of “exist(s)” are not ontologically committing—that is, one can talk of some things existing without thereby committing to those things being part of the furniture of reality—and, additionally, that this is true of existence claims about mathematical objects (Azzouni 2010b, 2017). On this view, mathematical objects can be said to exist in a way that is not ontologically significant, such that the existence of mathematical objects makes no demand on the world. Put differently, mathematical objects could be said to exist regardless of what the world is like. Mathematical nominalism, then, can be more specifically described as the view that abstract mathematical entities do not exist independently of mental or linguistic activity and in an ontologically significant sense of “exist”. However, to avoid being unnecessarily involute, going forward, these caveats will mostly be left implicit.

The terminology used is not uniform across the literature. Those who hold that abstract mathematical objects exist independently of mental or linguistic activity, in an ontologically significant sense, are usually called mathematical platonists. However, small-“p” platonists in this sense are not necessarily followers of Plato, and some reserve the term “Platonist” for views that have more in common with Plato’s own platonism, distinguishing platonism from the more generic object realism (Linnebo 2017) or the apophatic anti-nominalism (Burgess and Rosen 1997). At least one theorist, Rayo (2016), refers to the view that mathematical objects exist in an ontologically insignificant sense of “exist” as trivialist platonism or subtle platonism.

2. The Origins of the Contemporary Debate

Understanding contemporary defenses of nominalism requires understanding the motivations underlying anti-nominalism. Perhaps primary among these is a broadly representationalist view of language, according to which declarative claims (at the very least, simple declarative claims of subject-predicate form) purport to represent or describe the world as being a certain way. Declarative claims (again, at the very least, simple declarative claims of subject-predicate form) are true just in those cases where the world is the way they represent it as being, and to take a claim of this sort to be true is to take the world to be the way the claim represents it as being. For example, “The Forth Rail Bridge is red” says of the Forth Rail Bridge (the subject) that it is red (the predicate). So, a claim of this sort purports to denote something (the Forth Rail Bridge) and attributes a property to that thing (redness). The claim is true just in case the thing it purports to denote exists and has the property it ascribes to it. If the Forth Rail Bridge was turquoise, or did not exist, the claim would not be an accurate representation or description.

Mathematics similarly contains simple declarative claims of subject-predicate form, such as “Seven is prime”. This says of the number seven that it is prime. So, according to this broadly representationalist view, the claim purports to denote something (the number seven) and attributes a property to that thing (primeness). Since simple declarative claims are true just in those cases in which they accurately describe that of which they speak, “Seven is prime” is true just in case the thing it purports to denote exists and has the property it ascribes to it. If seven was not prime, or did not exist, the claim would not be an accurate representation or description.

A major influence here is Gottlob Frege’s pathbreaking work in the foundations of mathematics. In his Grundlagen der Arithmetik (1884), Frege defended the view that numerical expressions function as singular terms, and that singular terms are the parts of language which purport to pick out or refer to exactly one object. For Frege, singular terms are those that can correctly flank an identity sign “=”, or “is” when used to express identity, for instance: “The shortest serving prime minister of the twentieth century is Bonar Law” or “The smallest number expressible as the sum of two cubes in two different ways = 1729”. The terms “The shortest serving prime minister of the twentieth century”, “Bonar Law”, “the smallest number expressible as the sum of two cubes in two different ways”, and “1729” all purport to refer to exactly one object. For Frege, the truth of claims such as these not only requires that they purport to refer to exactly one object, but also that they succeed in doing so; that is, there must be such an object. The claim “The smallest number expressible as the sum of two cubes in two different ways is 1729” is true because there is a number, 1729, that is the smallest number expressible as the sum of two cubes. On the other hand, no claim about the largest prime number can be true, because there is no largest prime. These semantic considerations lay the groundwork for a simple but influential argument for mathematical platonism: some mathematical claims are true; therefore, there are mathematical objects. Since, it is widely supposed, these claims are true regardless of what anyone thinks or says, the existence of mathematical objects is mind- and language-independent.

The Polish logician Alfred Tarski’s (also pathbreaking) work on truth helped to ensconce this broadly representationalist picture. Tarski’s own interests were not in defending a philosophical account of language, but in showing how to define a notion true-in-L for some formal language L in a way that avoids the Liar Paradox (Tarski 1935, 1944). What emerged is known as a semantic theory of truth, so-called not because it has to do with meaning per se, but because, in line with contemporaneous usage of the word “semantic”, it has to do with relations between words and things. Tarski’s approach depends in part on stipulating what each singular term in L denotes, and which things or sequences of things “satisfy” the predicates of L. For example: “x is red” is satisfied by the Forth Rail Bridge if and only if the Forth Rail Bridge is red, and “x admires y” is satisfied by the ordered pair <Thom, Jonny> if and only if Thom admires Jonny.

Tarski’s work formed the basis of a new branch of mathematics, model theory, and, subsequently, of the model-theoretic accounts of language, which became mainstream in formal semantics by the end of the twentieth century. This is the approach to semantics familiar from logic textbooks. There, an interpretation of a language is understood as a function from the set of elements of the language itself—variables, constants, predicates, sentences—to the domain of that language—the set of things that language is about (given the interpretation).

Like Frege’s analysis of singular terms, the formal semantics that stems from Tarski’s semantic account of truth appears to entail platonism, so long as one holds that there are true mathematical sentences. This point was made by Paul Benacerraf in a canonical and widely cited 1973 paper “Mathematical Truth”. Benacerraf holds that Tarski’s is “the only viable systematic general account we have of truth” (Benacerraf 1973, 670) and that a uniform semantics or theory of truth should be given to both non-mathematical parts of natural language (for example, “There are at least three large cities older than New York”) and to mathematese (for example, “There are at least three perfect numbers greater than 17”). One reason is that:

The semantical apparatus of mathematics [should] be seen as part and parcel of that of the natural language in which it is done, and thus that whatever semantical account we are inclined to give of names or, more generally, of singular terms, predicates, and quantifiers in the mother tongue include those parts of the mother tongue which we classify as mathematese. (Benacerraf 1973, 666)

A distinct, but closely related, reason is that logical consequence is standardly defined in terms of truth (Tarski 1936). Roughly speaking: a set of sentences Σ logically entails a sentence ϕ just in case there is no interpretation function according to which Σ is true but ϕ is false. If mathematical truth is not understood along Tarskian (and therefore apparently platonist) lines, we would require a new account not just of mathematical truth but also of logical consequence, but no such accounts are forthcoming (Benacerraf 1973, 670).

Similarly influential was the Harvard logician W.V.O. Quine. Quine’s (also canonical and widely cited) 1948 paper “On What There Is” did much to establish as orthodox the view that the existential quantifier is ontologically committing. In Quine’s own words:

The variables of quantification, ‘something’, ‘nothing’, ‘everything’, range over our whole ontology, whatever it may be; and we are convicted of a particular ontological presupposition if, and only if, the alleged presuppositum has to be reckoned among the entities over which our variables range in order to render one of our affirmations true. (Quine 1948, 32)

Despite the paper’s influence, what the argument for that conclusion is, or whether it offers an argument at all, is disputed. Elsewhere, however, Quine defends the claim that the existential quantifier expresses existence on the grounds that its meaning is given by the English phrase “there is an object x such that…” and that this expresses existence (Quine 1986, 89).

3. Motivations for Nominalism

Although mathematical nominalism is the metaphysical claim that no mathematical objects exist, the chief argument for nominalism centers around the epistemological concern that we cannot have knowledge of mind-independent and language-independent mathematical objects even if they do exist, or, more weakly, that it is a mystery how we could have knowledge of mathematical objects so conceived. (See the article on The Benacerraf Problem of Mathematical Truth and Knowledge.) The upshot of these arguments is not the de facto claim that mathematical objects do not exist, but the de jure claim that we ought not to believe in mathematical objects. The epistemological problem with mathematical objects arises from the difficulty in squaring what abstract objects are like, if they exist, with what we know about ourselves as enquirers with particular capacities, abilities, and faculties for gaining knowledge of what the world is like. Mathematical objects as abstract objects are not only the sort of things that cannot be touched or seen, but they also cannot be interacted with or manipulated in any way. They have no effects, nor do they participate in events that could, even in principle, impinge on one’s experience. Facts about abstract objects, as the platonist understands them, cannot make a difference to any data one might have or come to acquire, or to any beliefs one might come to hold.

The canonical articulation of the epistemological problem is due to Benacerraf (in the same paper in which he advocates a Tarskian account of mathematical truth). Our account of mathematical knowledge, Benacerraf claimed, “must fit into an over-all account of knowledge in a way that makes it intelligible how we have the mathematical knowledge that we have” (Benacerraf 1973, 667), in particular:

A causal account of knowledge on which for [some person] X to know that [some sentence] S is true requires some causal relation to obtain between X and the referents of the names, predicates, and quantifiers of S. (Benacerraf 1973, 671)

A causal criterion for knowledge immediately rules out knowledge of abstract objects since they are acausal.

As the causal theory of knowledge waned in popularity so did Benacerraf’s particular formulation of the epistemological problem. However, it is too quick to conclude from the failure of causal analyses of knowledge that there is no sound causal-epistemological argument against the possibility of knowledge of abstract objects. This depends on whether the analysis fails because an appropriate causal connection between an agent and the object of belief is not sufficient for knowledge, or because such a connection is not necessary for knowledge. If it is the latter, then showing that there are no causal connections between an agent who holds mathematical beliefs and mathematical objects would not show that something required for knowledge is lacking. On the other hand, if appropriate causal connections are insufficient but necessary for knowledge, then the causal objection would go through. The most influential objections to causal theories are of the former sort: appropriate causal connections, it is argued, are necessary but not sufficient for knowledge, as an appropriate causal connection can exist between a person’s belief and the object of this belief, while the belief is true only by luck, and hence not known (Goldman 1976). Others however have claimed that causal connections cannot be necessary for knowledge, as this would rule out knowledge of the future (Burgess and Rosen 1997; Potter 2007).

Some have argued for more modest, restricted versions of a causal criterion, which are not committed to the claim that all knowledge requires an appropriate causal connection between the knower and the object of her knowledge. Colin Cheyne (1998, 2001) claims that the causal criterion applies to existential knowledge, arguing that this is supported by examples from empirical science. However, subsequently Nutting (2016) has argued that a Benacerraf-like argument can be made that does not rely on a general causal criterion, but on the more defensible claim that direct knowledge (that is, knowledge of a claim that is not gained via an inference from another claim) requires some kind of appropriate causal connection. This, along with the premise that the objects of mathematical knowledge are acausal mathematical objects, and the premise that if we have any mathematical knowledge, some of it must be direct, entails that mathematical knowledge is impossible.

Others have characterized the epistemological objection in different terms. W.D. Hart claims that the epistemological problem does not concern causal theories of knowledge in particular, but empiricism more generally. Empiricism, as Hart understands it, is “the doctrine that all knowledge is a posteriori” (Hart 1977, 125). A posteriori knowledge is “justified ultimately by experience” (ibid.). Experience, in turn, “requires causal interaction with the objects experienced” (ibid.). Yet, causal interaction with mathematical objects is impossible. Though there is not a strict incompatibility between these tenets—unless one reads them as making the more specific claim that, for all x, knowledge of x requires experience of x—it is enough to set up a prima facie tension between empiricism and platonism.

Hartry Field (1989) reformulated the epistemic problem as a challenge to explain how our beliefs about abstract, mathematical objects could be reliable. Realists about mathematical objects think that their beliefs about mathematical objects are largely true. If so, those beliefs are highly correlated with the mathematical facts. The platonist, however, “must not only accept the reliability, but must commit himself or herself to the possibility of explaining it” (Field 1989, 26). However, there appears to be serious difficulties in doing so. On the one hand, the platonist conception of mathematical objects as acausal and mind-independent:

means that we cannot explain the mathematicians’ beliefs and utterances on the basis of those mathematical facts being causally involved in the production of those beliefs and utterances; or on the basis of the beliefs and utterances causally producing the mathematical facts; or on the basis of some common cause producing both. (Field 1989, 231)

On the other hand, “it is very hard to see what [a] supposed non-causal explanation could be” (Field 1989, 231). If the reliability of mathematical beliefs—as understood by the Platonist, that is, as beliefs about mind-independent abstract objects—appears impossible to explain, this would “undermine the belief in mathematical entities, despite whatever reason we might have for believing in them” (Field 1989, 26).

A prominent attempt to explain the reliability of mathematical beliefs, given platonism, is due to Balaguer (1998). Balaguer responds to Field’s challenge by invoking a “full-blooded platonist” view (often referred to as “set-theoretic pluralism” or as a “set-theoretic multiverse” view) according to which, roughly, every coherently describable universe of mathematical objects exists. So long, then, as our mathematical belief-forming methods result in consistent mathematical beliefs, they will accurately describe some mathematical objects. This, Balaguer argues, offers a platonistic explanation of the reliability of mathematical beliefs.

Two issues should be noted. Firstly, for the explanation to succeed, there must be something about our linguistic practices that makes it the case that our mathematical claims are always about the mathematical objects of which they would be true (Clarke-Doane 2020). For example, Zermelo-Fraenkel set theory with the axiom of choice (ZFC) is consistent both with the hypothesis CH that there is no set with cardinality larger than the integers but smaller than the real numbers, and with the negation of CH. On a universe view, there is one set-theoretic universe (characterizable, say, by ZFC), and it is the case that CH either accurately or inaccurately characterizes that universe. On a multiverse view, there is a plurality of set-theoretic universes characterizable by ZFC (as well as yet further universes characterizable by different consistent sets of axioms), some of which are accurately characterizable by CH (ZFC + CH universes) and other are accurately characterizable by the negation of CH (ZFC + ¬CH universes). To secure reliability, it must be the case that when one makes claims that are true of for instance ZFC + CH universes, one is in fact talking about ZFC + CH universes and not, rather, making false claims about ZFC + ¬CH universes. There are some difficulties in pinning down what it is about our language that would make this the case. (See Putnam (1980). Button (2013) gives a book-length treatment and Button and Walsh (2018, chapter 2) provides an introduction to these issues concerning reference.)

Secondly, meeting Field’s challenge removes one epistemological objection to platonism, but does not show that knowledge of mathematical objects, as understood by the platonist, is possible. This is because reliability is a necessary, but not a sufficient, condition for knowledge. It is possible for a belief-forming process to be serendipitously reliable in a way that is not sufficient for knowledge. For instance if someone has a brain lesion that causes them to believe that they have a brain lesion (Plantinga 1993), that person’s belief-forming process reliably leads, in the case of this belief (that they have a brain lesion), to a true belief, but still involves a kind of epistemic luck that is antithetical to knowledge. Standard accounts of epistemic luck appeal to safety or sensitivity conditions to secure knowledge, which in turn are analyzed in terms of what the agent would have believed in metaphysically possible worlds suitably related to the actual world. For this reason, they are often taken to be inapplicable to mathematical platonism. If platonism is true, it is true in all metaphysically possible worlds. The upshot is that standard safety and sensitivity conditions are trivially met in cases of necessary truths, so that every belief whose object is a necessary truth would count as knowledge even if it is gained by luck. Collin (2018) argues that it is possible to formulate an epistemological argument against platonism in terms of epistemic luck: by analyzing safety and sensitivity conditions in terms of epistemically possible scenarios, rather than metaphysically possible worlds, one can apply safety and sensitivity conditions to necessary truths.

No formulation of the epistemological objection is uncontroversial, but a felt sense that something is epistemically worrying about abstract objects is common in the philosophical literature. Pinning down exactly what the epistemological problem with mathematical objects is remains an open task for nominalists.

4. Nominalism and the Application of Mathematics

The semantic argument for mathematical platonism presented above suggests two genera of nominalist responses. The first is to deny the mainstream semantic assumptions that undergird the inference from the truth of simple declarative mathematical claims to the existence of mathematical objects. In broad terms, this could mean either dropping representationalism—and holding that simple declarative mathematical claims do not purport to represent a domain of mathematical objects in any substantive sense of “represent”—or retaining representationalism but holding that the mathematical objects being represented are non-existent objects. The second is to accept mainstream semantic assumptions, but to hold that simple declarative mathematical claims are false because there are no mathematical objects. On this second kind of view, the standards of correctness or incorrectness for mathematical claims are not, strictly speaking, standards of truth and falsehood.

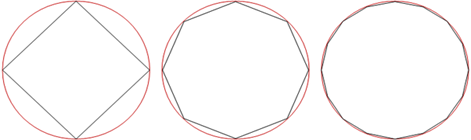

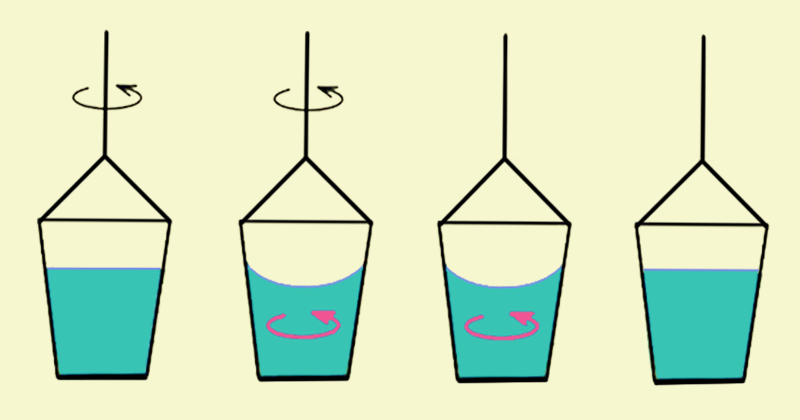

Both sorts of response are complicated by the applicability of mathematics. There exists a large literature on the applicability of mathematics (see, for example, Frege 1884; Suppes 1960; Carnap 1967; Putnam 1971; Krantz and others 1971; Field 1980; Resnik 1997; Shapiro 1997; Steiner 1998; Azzouni 2004; Chang 2004; Chihara 2004; van Fraassen 2008; Bueno and Colyvan 2011; Bangu 2012; Pincock 2012; Weisberg 2013; Morrison 2015; Bueno and French 2018; Ketland 2021; Leng 2021; the article on The Applicability of Mathematics). However, a brief overview is enough to reveal its relevance to mathematical nominalism. At a high level of generality, mathematics is applied within the sciences in the following way. Scientists devise equations which can be used to model or represent concrete systems. Measurement procedures are used to assign mathematical values to aspects of the target concrete system and these values are “plugged in” to the equations. When mathematics is not merely a predictive tool, different values within the equations correspond to different magnitudes of properties of the concrete system. By manipulating the equations, one can then make predictions about the concrete system. These predictions are (directly or indirectly) testable when the mathematical results are (directly or indirectly) associated with measurement procedures.

Consider, for example, a closed vessel of volume V (in m3) containing a gas. The pressure P (in Pascals) of the gas can be measured using a manometer, and the temperature T (in Kelvin) can be measured using a thermometer. Letting n be the number of moles of gas (where 1 mole = 6.02 x 1023 molecules), and R be the ideal gas constant (~ 8.314), the ideal gas law (in molar form) tells us:

PV = nRT

Though only approximately accurate, the ideal gas law allows one to calculate physical quantities and make predictions about the behavior of gasses in a range of circumstances. It not only tells us, for instance, that increasing the temperature of the gas while holding fixed the volume of the vessel will increase the pressure but allows us to calculate precisely (idealization notwithstanding) to what extent this is the case. It also allows us, for instance, to calculate the number of moles of gas, so long as we are able to measure the pressure, volume, and temperature of the system, by rearranging the equation:

n = PV/RT

In other cases, the mathematics and the measurement procedures are far more complex. There are also enduring questions in areas such as the philosophy of quantum mechanics about what physical quantities mathematical objects such as wavefunctions correspond to, or whether they are merely predictive tools. However, the general contours of the picture remain the same: representing properties of physical systems using numbers allows algebraic reasoning to be used to describe, make predictions about, and explain features of the concrete world.

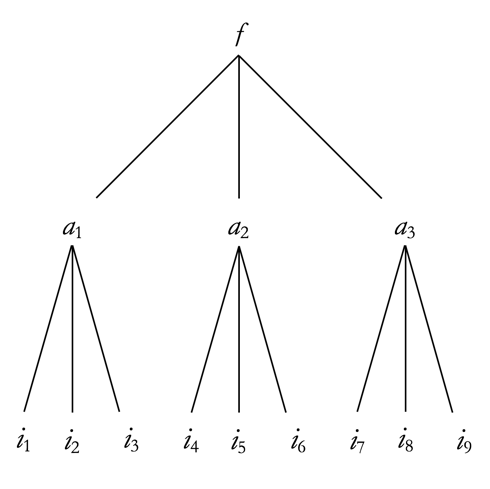

One, then, can think of the language of mathematical science as being two-sorted, that is, ranging over two kinds of thing:

-

- concrete entities, using primary variables: x1, x2,…,xn.

- abstract entities, using secondary variables: y1, y2,…,yn.

and, therefore, containing three kinds of predicate:

-

- concrete predicates, expressing relations between concreta: C1, C2,…

- abstract predicates, expressing relations between abstracta: A1, A2,…

- mixed predicates, expressing relations between concrete and abstract objects: M1, M2,…

Measurement is one clear example of (iii). Measurements describe physical quantities by associating them with numerical magnitudes. Take the claim “The mass of d1 is 5 kilogrammes”, where d1 is a concrete object, or “The temperature of d2 is 30 Kelvin”, where d2 is a concrete system. The first is expressed more formally as “Mkg(d1) = 5”—which describes a function from a concrete object, d1, to an abstract object, the number 5—and the second as “Tk(d2) = 30”—which describes a function from a concrete system, d2, to an abstract object, the number 30. Although these are, in one sense, about the concrete world, they refer to both physical objects and abstract mathematical objects. Scientific theories, then, when regimented, involve a combination of claims about concrete entities, claims about mathematical entities, and claims about both concrete and mathematical entities.

a. The Indispensability Argument

This puts pressure on the two genera of nominalist views mentioned above. Regarding the first, if nominalists deny that mathematical sentences have the same semantics as sentences about concrete objects, then a puzzle arises about mixed sentences, roughly: what is the semantics for mathematical sentences and how does it combine with ordinary semantics to produce meaningful mixed sentences? Regarding the second, if nominalists deny that mathematical sentences are (strictly speaking) true, then they must also deny that many of the best scientific theories are true, for the best scientific theories are replete with mathematical claims. Moreover, we appear to have at least some justification for believing that the best scientific theories are true, as they receive empirical confirmation as a result of making testable predictions.

Considerations like these have brought about a very important and influential challenge to nominalism: the indispensability argument. In fact, to talk of the indispensability argument is misleading since there are a number of distinct arguments that fall under that rubric (see, for instance, Quine 1948, 1951, 1976, 1981; Putnam 1971; Maddy 1992; Resnik 1995; Colyvan 2001; Leng 2010; the article on the Indispensability Argument). Something like a core indispensability argument can however be isolated, and, because many forms that nominalism might take have come about largely in response to the premises of this core argument, describing it allows us to produce a useful taxonomy of nominalisms. At its heart, the indispensability argument is designed to show that nominalism is incompatible with the claims of science. Science—or at least the science of the twentieth and beginning of the twenty-first centuries—asserts the existence of abstract objects, so if its claims are true, nominalism is false; if we are justified in believing its claims, we are not justified in believing nominalism. This core indispensability argument has three premises:

(Realism) The best current scientific theories are true (or at least approximately true).

(Indispensability) The best current scientific theories indispensably quantify over abstract objects.

(Quine’s Criterion) The existential quantifier ∃x expresses existence.

Something should be said about each of the premises. (Realism) is not as straightforward as it looks, since the denial of realism, instrumentalism, can be characterized in a number of different ways. The anti-nominalists John Burgess and Gideon Rosen have characterized a rejection of (Realism) as amounting to the claim that “standard science and mathematics are no reliable guides to what there is” (Burgess and Rosen 1997, 60–61). However, the most fully developed instrumentalist nominalism, that of Mary Leng, seeks to provide an account of how a denial of realism is compatible with substantive scientific knowledge of the concrete world. (Quine’s Criterion) is motivated by broadly the sort of semantic considerations discussed earlier. Finally, (Indispensability) is also not wholly straightforward. For one thing, technical results show that, strictly speaking, (Indispensability) is false. The method of Craigian elimination can transform a two-sorted theory Γ, quantifying over two kinds of things, into a theory Γ° with infinitely many primitives and axioms that quantifies over only one of those kinds of things. So, there is a known mechanism by which quantification over mathematical entities can be dispensed with. Recommending Craigian elimination as a response to (Indispensability) appears however not to be sufficient for the nominalist, partly because the process is not thought to explain the success of mathematical theories (see Burgess and Rosen 1997, I.B.4.b for details). For another thing, the indispensability of quantification over mathematical objects may appear idle with respect to the argument. If the best scientific theories are true and assert the existence of mathematical objects, then nominalism is false regardless of whether it is possible to formulate other theories that dispense with quantification over mathematical objects. However, as it is expounded below, some have taken programs of dispensing with quantification over mathematical objects in physical theories as a means of explaining the predictive success of theories that do quantify over mathematical objects, without assuming the truth of what they say about mathematical objects, thereby undercutting the main motivation for (Realism). Alternatively, or in addition, nominalists who take it to be possible to dispense with quantification over mathematical objects may argue that the resulting theories are superior, perhaps on the grounds of ontological parsimony, or on the grounds that they avoid the epistemological problems associated with abstract objects, or on the grounds that they provide more perspicuous intrinsic descriptions and explanations of physical systems and their behavior—descriptions and explanations that appeal to intrinsic facts about those systems rather than their relations to mathematical objects. In that case, the best scientific theories will not quantify over mathematical objects.

A fourth premise, confirmation holism, is also often thought to be crucial to the indispensability argument, both by those who defend and those who resist the argument (see, for instance, Colyvan 2001; Maddy 1997; Sober 1993; Leng 2010; Resnik 1997). Confirmation holism is the claim that confirmation accrues to theories as a whole rather than accruing only to proper parts of those theories. (Realism), (Indispensability) and (Quine’s Criterion) mutually entail the falsity of nominalism, so confirmation holism is not required to make the argument logically valid. However, confirmation holism is sometimes taken to support (Realism). If empirical confirmation could accrue only to proper parts of theories, nominalists might be able to argue that it only accrues to those claims that quantified only over concrete objects. If confirmation holism is true, however, then the empirical confirmation the best scientific theories enjoy also applies to the claims they make about mathematical objects.

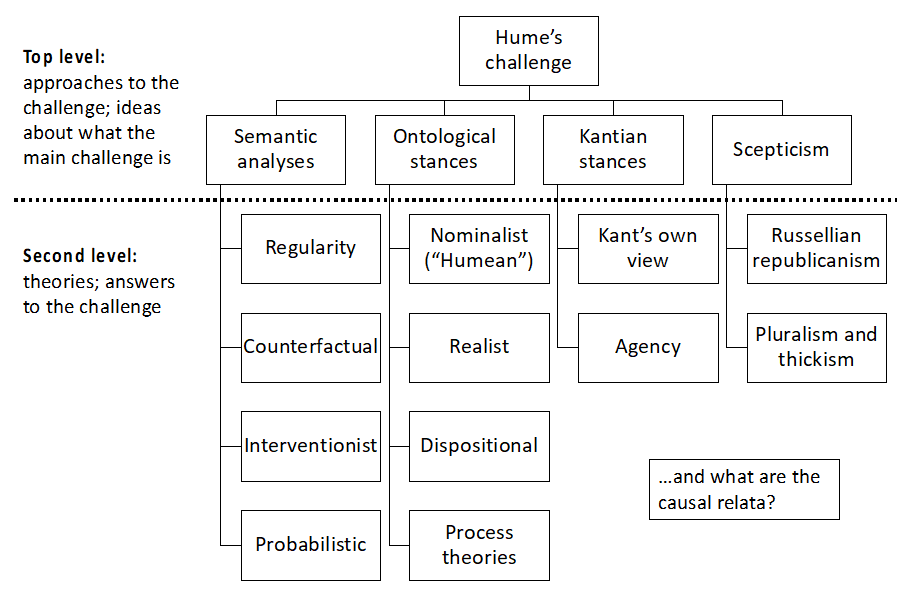

The three premises of the core indispensability argument are reflected in a trifurcation of approaches to nominalism. Some nominalists reject (Indispensability) and attempt to show that we can (in certain important contexts) get by without talking about abstract objects. This is reconstructive nominalism. Others reject (Quine’s criterion): one can make true claims “about” abstract objects without abstract objects existing. “There are infinitely many primes” really can be true without any primes existing (in an ontologically significant sense). This is hermeneutic or deflationary nominalism. Still others reject (Realism). They take mathematical claims, even those that appear in the best scientific theories, to be strictly false, and do not attempt to show that we can get by without them but offer an account of why we speak this way and why it is useful to do so that is not committed to the existence of mathematical objects. This is instrumentalist nominalism.

5. Reconstructive Nominalism

The first of the premises to be concertedly challenged by nominalists was (Indispensability), and there have been many attempts to discharge or partially discharge this aim (a useful overview can be found in Burgess and Rosen 1997, III.B.I.a). Hartry Field’s efforts, and responses to them, have been dominant in the philosophical literature on indispensability, to the extent that some discussion of indispensability carries on as though the failure of Field’s project would amount to the failure of reconstructive nominalism. Here we examine two important and representative strategies of dispensing with reference to and quantification over mathematical objects in some detail: Charles Chihara’s modal strategy, and Field’s geometrical strategy.

a. Chihara

Originally, Chihara responded to (Indispensability) by developing a predicative system of mathematics which avoided quantification over mathematical objects by using constructibility quantifiers instead of the standard quantifiers (Chihara 1973). Concerned that not all of the mathematics needed for contemporary science could be reconstructed in a predicative system, he has since retained the use of constructibility quantifiers but developed a different system without these restrictions (Chihara 1990, 2004, 2005). It is Chihara’s developed view that is discussed here.

In standard mathematics, the “official claims”, as it were, come in an apparently existential form: they appear to be claims about what mathematical objects exist and what relations they bear to each other. Things were not always so. In Euclid’s Elements we find the following axioms of geometry:

A straight line can be drawn joining any two points;

Any finite straight line can be extended continuously in a straight line;

For any line a circle can be drawn with the line as radius and an endpoint of the line as center.

These axioms concern not what exists or is “out there”, but what it is possible to construct. The claims of Euclidean geometry are modal rather than existential and, as a result, do not have any obvious ontological commitments to abstract (or, for that matter, concrete) objects. Geometry was principally carried out in this modal language for thousands of years, though by the twentieth century it had become common to make geometrical claims in existential language. Hilbert in his 1899 Grundlagen der Geometrie (Foundations of Geometry) gives the following as his first three axioms of geometry:

For every two points A, B there exists a line L that contains each of the points A, B;

For every two points A, B there exists no more than one line that contains each of the points A, B;

There exist at least two points on a line. There exist at least three points that do not lie on a line.

Hibert’s axioms, in contrast to Euclid’s, appear existential, describing which points and lines exist. For the nominalist, this may be philosophically significant. It shows that it is possible to practice mathematics—at least one part of mathematics—without making any claims about the existence of abstract mathematical objects. Chihara’s nominalism takes its cue from Euclid’s modal geometry; it aims to do all mathematics—or all the mathematics we need—in the modal, rather than the existential, mode. His goal is to:

Develop a mathematical system in which the existential theorems of traditional mathematics have been replaced by constructibility theorems: where, in traditional mathematics, it is asserted that such and such exists, in this system it will be asserted that such and such can be constructed. (Chihara 1990, 25)

Although Chihara works out this project in a good deal of technical detail, the fundamental idea behind it is straightforward enough. Where Field, as it is explained below, attempts to replace mathematized physics with nominalistic physics, Chihara attempts to replace standard pure mathematics with a system of mathematics that makes no claims about the existence of mathematical objects. This nominalistic surrogate for standard mathematics, then, could be true without mathematical objects existing.

i. Constructibility theory

Chihara works out a modal version of simple type theory (henceforth STT) called “constructibility theory” (henceforth Ct). The language of STT contains the standard quantifiers “∃x” (meaning “there is an object x such that…”) and “∀x” (meaning “every object is such that…”) and the set-theoretic membership relation “∈” which is used to express which entities are in a set. “Thom ∈ {Thom, Jonny, Phil, Colin, Ed}” means that Thom is in the set containing Thom, Jonny, Phil, Colin and Ed, “√2∈ℝ” means that the number √2 is in the set of real numbers, and so on. As the language of STT contains “∃x”, “∀x” and “∈”, STT is used (at least apparently) to make assertions about which sets exist. Sets can contain ordinary objects, both concrete and abstract, and they can also contain other sets. The claims that can be made in STT about which sets exist are not wholly unrestricted; if they were, one could claim that there is a set which contains all and only those sets that do not contain themselves: ∃x∀y(y∈x↔y∉y). Consider the set just described: does it contain itself? If it does not contain itself, then it follows that it does contain itself, as it is the set that contains all sets that do not contain themselves. On the other hand, if it does contain itself, then it follows that it does not contain itself because it is the set that contains only those sets that do not contain themselves. This is Russell’s paradox. To avoid this incoherence, sets in STT are on levels: a set can only contain objects or sets on a lower level than itself. On level-0 there are ordinary objects; on level-1, sets containing ordinary objects; on level-2, sets containing sets that contain ordinary objects; and so on.

In Chihara’s system, the existential quantifier “∃x” and universal quantifier “∀x” are supplemented with modal constructibility quantifiers “Cx” and “Ax”, which, instead of making assertions about what exists, make assertions about which sentences are constructible. Corresponding to the existential quantifier “∃x” is “Cx”. Claims of the form “(Cϕ)ψϕ” mean:

It is possible to construct an open sentence ϕ such that ϕ satisfies ψ

Corresponding to the universal quantifier “∀x” is “Ax”. Claims of the form “(Aϕ)ψϕ” mean:

Every open sentence ϕ that it is possible to construct is such that ϕ satisfies ψ

To understand the constructibility quantifiers “Cx” and “Ax”, one must understand what it is for an open sentence to be satisfied or to satisfy other open sentences. Take the open sentence “x is the writer of Gormenghast”. This sentence is satisfied by Mervyn Peake—that is, the person who wrote Gormenghast. So, open sentences can be satisfied by ordinary objects. But they can also be satisfied by other open sentences. Consider the sentence “There is at least one object that satisfies F”. This is satisfied by the open sentence “x is the writer of Gormenghast”. Open sentences, like the sets of STT, are on levels: at level-0, there are ordinary objects; at level-1, open sentences that are satisfied by ordinary objects; at level-2, open sentences that are satisfied by open sentences that are satisfied by ordinary objects, and so on.

For a sentence to be constructible is just for it to be possible to construct. The sort of possibility at play here is not practical possibility; no particular person need be capable of constructing the relevant sentences. What Chihara has in mind is metaphysical possibility, which is sometimes (somewhat misleadingly) called “broadly logical possibility”. This is absolute possibility concerning how the world could have been. (Chihara sometimes also talks in terms of “conceptual possibility”, although conceptual and metaphysical possibility are not generally thought by philosophers to be equivalent.) Notice that the constructibility quantifiers are not epistemic in any way. That an open sentence ϕ is constructible does not mean that we know how to construct it, or even that it is possible in principle to know how it can be constructed. Similarly, it need not be the case that it is in principle knowable which objects or open sentences would satisfy ϕ.

After this sketch of STT and Ct, it is quite easy to see, in a general way, how Chihara’s strategy works. For every claim in STT about the existence of particular sets there corresponds a claim in Ct about the satisfiability of open sentences. Where STT says, for example, “There is a level-1 set x such that no level-0 object is in x”, Ct can say “It is possible to construct a level-1 open sentence x such that no level-0 object would satisfy x”. The set-theoretic inclusion relation “∈” is replaced by the satisfaction relation between objects and open sentences (or open sentences and other open sentences), and assertions about sets are replaced by assertions about the constructibility of open sentences. In this way, Chihara creates a branch of mathematics that does not require reference to or quantification over abstract objects. Everything one can do with STT one can do with Ct. STT is a foundational branch of mathematics, which is to say that other branches of mathematics can be reconstructed in it. Plausibly, then, STT is sufficient for any applications of mathematics that might arise in the sciences. Since Ct is a modalized version of STT, Ct, plausibly, is itself sufficient for any application of mathematics that might arise in the sciences. According to Chihara, (Indispensability) is therefore false.

ii. Constructibility Theory and Standard Type Theory

Chihara thinks of Ct as a modal version of STT, but Stewart Shapiro (1993, 1997) has claimed that Ct is in fact equivalent to STT and so could have no epistemological (or other) advantage over STT. In defense of this, Shapiro provides a recipe for transforming sentences of Ct into sentences of STT: first, replace all the variables of Ct that range over level-n open sentences with variables of STT that range over level-n sets; second, replace the symbol for satisfaction with the “∈” symbol for set membership; third, replace the constructibility quantifiers “Cx” and “Ax” with the quantifiers of predicate logic “∃x” and “∀x”. Call a sentence of STT “ϕ” and its Ct counterpart “tr(ϕ)”. Shapiro shows that ϕ is a theorem of, that is provable in, STT if and only if tr(ϕ) is a theorem of Ct, and that ϕ is true according to STT if and only if tr(ϕ) is true according to Ct. That sentences of Ct can be transformed this way into sentences of STT and that these transformations preserve theoremhood and truth show, Shapiro claims, that the two systems are definitionally equivalent—that Ct is a mere “notational variant” of STT.

Chihara (2004) responds by noting that the ability to translate sentences of STT into Ct does not show that they are equivalent in a way that undermines his project. Though the ability to translate between the two theories would show that the sentences of STT and Ct share certain mathematically significant relationships, it would not show that these sentences have the same meaning, are true under the same circumstances, or are knowable or justifiably believed under the same circumstances. Sentences of STT entail the existence of sets and are true only if sets exist, whereas sentences of Ct do not and are not. Additionally, the two theories are confirmed in different ways. The Ct sentence “It is possible to construct an open sentence of level-1 that is not satisfied by any object” is supported by laws of modal logic, considerations about what is possible, coherent, and so on. The STT counterpart sentence “There exists a set of level-1 of which nothing is a member” is not supported by those considerations.

iii. The Role of Possible World Semantics

Another objection arises from the fact that Chihara (1990) uses possible world semantics to spell out, in a precise way, the logic of “Cx” and “Ax”. Possible world semantics is an extension of the model-theoretic semantics sketched earlier. Roughly speaking, instead of a single domain containing objects and sets of objects, possible world semantics makes use of possible worlds each with their own domain (at least in variable domain semantics). The basic, extensional model-theoretic semantics sketched before has an interpretation function mapping non-logical terms of the language to their extension: names are mapped to individuals of the (single) domain and predicates to sets of individuals in the (single) domain. Possible world semantics has an interpretation function mapping names and predicates to intensions. For names, intensions are functions mapping worlds to individuals in that world’s domain. For predicates, intensions are functions mapping worlds to sets in that world’s domain. Intuitively, an intension tells us what individual (if any) a name picks out at any given possible world, and what set of objects a predicate applies to at any given possible world. For example, the intension associated with “… is red” would map each world to the (possibly empty) set of red things in that world’s domain. Part of the philosophical interest of possible world semantics is that it allows one to characterize a logic (in fact a range of logics) for possibility and necessity operators. Intuitively, for some claim “ϕ”, the claim “Possibly ϕ” is true if and only if “ϕ” is true in at least one possible world, and the claim “Necessarily ϕ” is true if and only if “ϕ” is true in all possible worlds.

Possible world semantics itself, then, is a mathematical theory, quantifying over sets and functions. (Instead of modelling physical systems, it models meanings.) It might therefore be asked whether it is legitimate to engage in such possible worlds talk without believing in the mathematical objects it quantifies over, or whether this would be an instance of intellectual doublethink. Shapiro (1997) claims that possible world semantics is not available to the nominalist since it not only quantifies over abstract objects but is used in explanations. If possible world semantics is just a myth, then its falsehood precludes it from explaining anything, just as a story about Zeus (assuming his non-existence) cannot explain facts about the weather. Chihara (2004) responds by drawing a distinction between scientific explanations of natural phenomena and explications of ideas and concepts. The role of possible world semantics in Ct is not akin to a scientific explanation of an event, but to an explication of a concept. Possible world semantics is used to spell out how to make inferences using constructibility quantifiers. Put more picturesquely, it shows one how to reason with the constructibility quantifiers in broadly the same way that an allegorical tale, such as Animal Farm, shows one how to reason about totalitarian government (though the latter does so in a less rigorous but more open-ended way than the former). Just as a novel is capable of doing this without the things depicted in it really existing, so, too, possible world semantics is capable of doing this without possible worlds really existing.

None of the prominent objections to Chihara’s brand of reconstructive nominalism are decisive. Although the view has received comparatively little attention in the literature, it remains a live option for the nominalist who denies indispensability.

b. Field

Field’s reconstructive project has been utterly dominant in the literature on reconstructive nominalism since the publication of Field’s short but remarkable monograph Science Without Numbers in 1980, even if Field himself has said little about his project in print since the early nineteen nineties. Field takes there to be no mathematical objects, but also holds that the truth of mathematical sentences requires the existence of mathematical objects. As such, for Field, standard mathematical theories are (strictly speaking) false (see the introduction to Field (1989)). Mathematized science, however, uses mathematical models, equations, and so on to represent concrete systems. As a reconstructive nominalist, Field aims to show firstly that the best scientific theories can be restated in a way that avoids using mathematics. Here is a point of contrast with Chihara. Whereas Chihara claims not that mathematics per se is dispensable to science, but only the sort of mathematics that quantifies over abstract objects, Field’s project is to formulate scientific theories that do not make use of mathematics of any sort. Field also wants to establish that mathematical language is dispensable in principle: there is no context in which science would require mathematics to do something which it could not do without mathematics. To this end, the second goal of his project is to show that adding mathematical claims to claims about the concrete world does not allow us to infer anything about the concrete world that claims about the concrete world would not allow us to infer on their own.

i. Field’s Program

Field calls the process of removing reference to and quantification over mathematical objects “nominalization”. Field does not nominalize all of contemporary science—the task would be colossal—but one important theory: Newtonian Gravitational Theory (NGT). His hope is that, in doing so, he would show that a complete nominalization of science is, at least in principle, accomplishable.

Some mixed claims, expressing relationships between concrete and abstract objects, are easy to reformulate in a purely nominalistic way. “There are exactly two remaining Beatles” can be parsed:

∃x∃y(Bx∧By∧x≠y)∧∀x∀y∀z(Bx∧By∧Bz→x=y∨y=z∨x=z)

Where “Bx” means “x is a remaining Beatle”. The best scientific theories, however, go far beyond claims about how many of a particular kind of object there are, so the means of nominalizing these theories will be more complex. As a result, the details of Field’s project are highly technical. A non-technical overview of its general contours, however, can be given.

NGT describes the world by numerically assigning properties such as mass, distance, and so on, to points in space-time. Space-time itself is represented with a mathematical coordinate system, and quantity claims such as “The mass of b is 5kg” are understood as meaning that there exists a mass-in-kilograms function f from a domain of concrete objects C to the real numbers ℝ such that f(b)=5. Instead of describing the concrete world by assigning it numerical values, Field’s theory (henceforth FGT) describes the concrete domain directly, using comparative language. In particular, distance claims are made using a betweenness relation “y Bet xz”, a simultaneity relation “x Simul y”, and a congruence relation “xy Cong zw”. These are primitives of the theory, but intuitively “y Bet xz” means that y is between x and z, “x Simul y” that x and y are simultaneous, and “xy Cong zw” that the distance from x to y is the same as the distance from z to w. In the same way, mass claims are expressed using mass-betweenness and mass-congruence relations. From these building blocks, Field develops a scientific theory capable of describing space-time and many of its properties without quantifying over mathematical objects.

ii. Representation Theorems

The next step is to show that FGT really is a (nominalistic) counterpart to NGT. To this end, Field proves a representation theorem. Intuitively, what Field’s representation theorem shows is that the domain of concrete things represented by FGT using comparative predicates (a space-time with mass-density and gravitational properties) has the same structural features as the abstract mathematical model of space-time with mass-density and gravitational properties given by NGT. NGT is a mathematical mirror image of FGT. In more detail, Field proves that:

there is a structure-preserving mapping ϕ from the sort of space described by FGT onto ordered quadruples of real numbers;

there is a structure-preserving mapping ρ from the mass-density properties that FGT ascribes to space-time onto an interval of non-negative real numbers;

there is a structure-preserving mapping from ψ from the gravitational properties that FGT ascribes to space-time onto an interval of real numbers.

Where ϕ is unique up to a generalized Galilean transformation, ρ is unique up to a positive multiplicative transformation, and ψ is unique up to a positive linear transformation. (What this means, in essence, is that choice of measurement scales is conventional. Different measurement scales can be used, so long as they preserve the structural features of the measurement scales they replace. Saying that something is 95.6 kilograms or saying that it is 15.2 stones are two different ways of representing the same concrete fact about mass; no unique significance attaches to the numbers 95.6 or 15.2.)

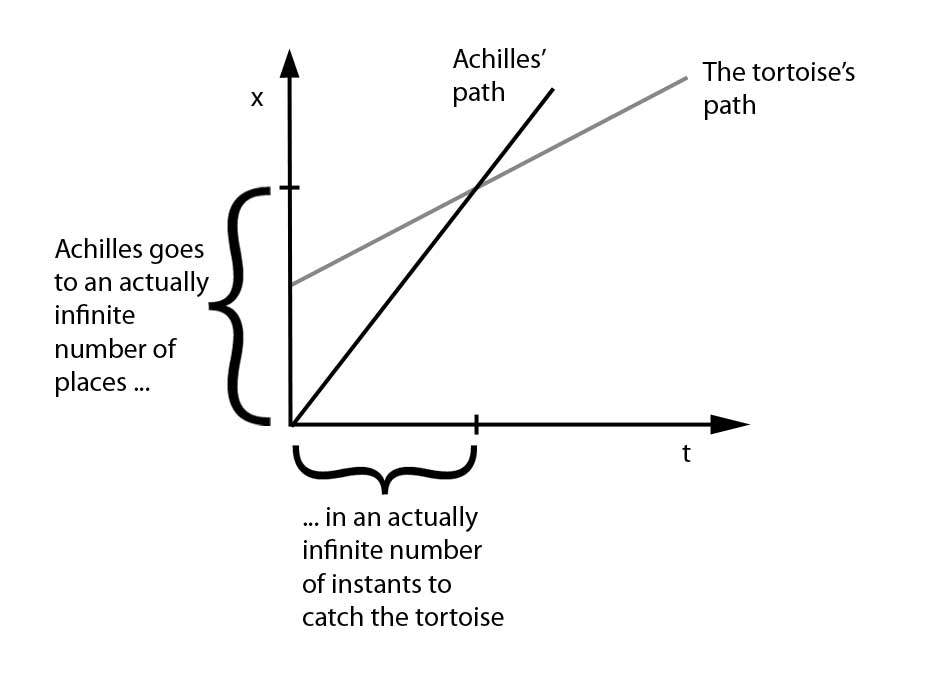

The representation theorem explains the utility of false mathematical theories: if the abstract mathematical model they describe has the same structure as the concrete world (described by true nominalistic theories), then reasoning about the abstract mathematical model will not lead us astray when making inferences about the concrete world. The following picture of nominalistic physics and its relation to scientific practice emerges: Nominalistic claims N1 … Nn have abstract counterparts N*1 … N*n which use mathematical methods to describe the same physical world described by N1 … Nn. One can ascend from N1 … Nn to N*1 … N*n, carry out derivations within the mathematical theory to arrive at some mathematized conclusion A*, and then descend to its nominalistic counterpart A. Mathematics facilitates inferences about the physical world, but these inferences could, according to Field, be made without mathematics, albeit more laboriously.

iii. Conservativeness

Having developed a nominalistic physics and proved a representation theorem, Field also needs to show that mathematics is truly dispensable to physics, at least in principle. This involves showing that there are not claims about the physical world that follow from nominalistic theories plus mathematics but that would not follow from nominalistic theories alone. In the jargon, that mathematics is conservative over purely nominalistic theories. Conservativeness is important to Field’s kind of reconstructive nominalism. Firstly, because if the mathematics we apply is not conservative, then there are things that can be said about the physical world with mathematics that could not be said without mathematics, showing that it is not dispensable after all. Secondly, because it provides part of the explanation of why we manage to use (false) platonistic theories so successfully: they have the same consequences about the concrete world as the true, nominalistic theories underlying them. Field informally describes conservativeness in the following way:

A mathematical theory S is conservative [if and only if] for any nominalistic assertion A, and any body N of such assertions, A isn’t a consequence of N + S unless A is a consequence of N alone. (Field 2016, 16)

Some clarifications are in order. The first is that Field does not have in mind pure mathematical theories, that is, mathematical theories whose vocabulary only ranges over mathematical objects. These theories are more or less trivially conservative: as they do not say anything about the physical world, they do not entail anything about the physical world (unless they are inconsistent, since, in classical logic, a contradiction entails all sentences). The second is that Field does not have in mind physical theories that use mathematics. These are more or less trivially nonconservative: it is their job to say substantive things about the physical world. If for instance N is some meagre body of nominalistic assertions, and S is Newtonian physics, then N + S will clearly enough have consequences that N does not. Instead, Field has in mind impure mathematical theories. Impure set theory, for instance, posits the existence not only of sets, but also conditionally posits the existence of sets of physical objects. Roughly speaking, for any physical object or objects, there exists a set containing that object or objects. More specifically, consider ZFC (Zermelo-Fraenkel set theory with the axiom of choice)—a theory of pure mathematics within which all core mathematics can be modelled. ZFC can be made into an applied, impure theory by adding supplementary axioms. In particular:

- a comprehension scheme, allowing definable kinds of concrete objects to form sets;

- a replacement scheme, saying that if a function from a set of concrete objects to other objects can be defined, then those latter objects also form a set. (Note that this allows one to formulate the kinds of quantity claims mentioned earlier.)

Call the theory obtained from ZFC by adding these supplementary axioms ZFCV(N). Field argues that good mathematics should be conservative; mathematics, on its own, ought not to impose constraints on the way the concrete world is. Were it to be discovered that it did, that would be a reason to consider it in need of revision:

[I]f it were to be discovered that standard mathematics implied that there are at least 106 non-mathematical objects in the universe, or that the Paris Commune was defeated […] all but the most unregenerate rationalists would take this as showing that standard mathematics needed revision. Good mathematics is conservative; a discovery that accepted mathematics isn’t conservative would be a discovery that it isn’t good. (Field 1980, 13)

In addition to this, Field proves an important conservativeness result. An equivalent way of describing conservativeness is to say that a mathematical theory S is conservative if and only if for any consistent body N of nominalistic assertions, N + S is also consistent (Field 2016, 17). Field’s proof, in essence, gives a procedure that, beginning with a nominalistic theory N assumed to be consistent (that is, satisfied by a domain D of non-sets), shows one how to construct a domain that satisfies both N and ZFCV(N) (that is, shows that N and ZFCV(N) are jointly consistent). If one is able to construct a nominalistic counterpart theory such as FGT, conservativeness ensures that adding ZFCV(N) to that nominalistic theory will not entail any purely nominalistic claims that are not entailed by the nominalistic theory alone.

iv. The Prospects of Field’s Project

Field provides a nominalistic reconstruction of one important theory of physics, but some philosophers have questioned whether his project could be devloped with nominalistic reconstructions of other theories such as General Relativity and quantum mechanics (QM). NGT uses mathematics to represent facts about concrete objects which FGT represents more directly. QM works differently. The mathematical formalism of QM is sometimes used to represent probabilities of measurement events, and a probability is not a concrete object. Even if QM could be reformulated to avoid reference to mathematical objects, it would remain a theory about probabilities, which is to say, a theory that talks about entities the nominalist does not take to exist. Balaguer (1996, 1998) has suggested that the Fieldian nominalist could take these probabilities to represent the propensities of the concrete systems they model. However, Balaguer admits that even if the details of any such nominalization were worked out, this would not provide a means of nominalizing phase space theories. Phase space theories use vectors to represent possible states of a concrete system. A Fieldian reconstruction of a phase space theory which avoids quantification over vectors would still quantify over possible states of concrete systems (Malament 1982), and these abstract objects could not be taken to represent propensities of concrete systems in the way that, plausibly, probabilities do.

A number of commentators have taken these considerations to license pessimism about the prospects of nominalizing the best contemporary scientific theories, but the Fieldian nominalist could contest this conclusion. In the first place, one can question the inference from the fact that mathematical language has not, at some point in time, yet been dispensed from the best scientific theories to the modal conclusion that mathematical language is indispensable from the best scientific theories. One would not similarly conclude that because Goldbach’s conjecture has not, at some point in time, yet been proven, it is unprovable. In the second place, progress has been made since Science Without Numbers was first published in 1980. For instance, Arntzenius and Dorr (2012) take on the task of nominalizing general relativity, which uses differential equations to describe the behavior of fields and particles in curved space-time and vector bundles. They express confidence that, given an interpretation of what concrete facts the mathematical formalism of QM represents, nominalizing strategies could be extended to apply in these cases.

v. Conservativeness Again

Field’s conservativeness claim has been criticized on a number of grounds. One is that semantic accounts of logical consequence quantify over sets. According to these, a theory is logically possible just in case it has a model in the set-theoretic sense sketched above. A claim ϕ is a consequence of a theory Γ if and only if there is no model of Γ & ¬ϕ. Logical consequence, it is sometimes argued, can only be understood if one posits the existence of sets. Field (1989, chapter 3; 1991) has a response: he takes logical possibility to be a primitive notion, not ultimately to be explained in terms of the existence of certain sets. There are considerations in favor of this. Explaining modal facts in terms of set-theoretic ones may get things backwards. As Leng (2007) argues, one ought to explain the fact that there exists no set of all sets on the grounds that there could not exist a set of all sets, rather than to explain why there could not exist a set of all sets on the grounds that there is no set of all sets.

Another objection concerns the scope of Field’s conservativeness proof. Jospeh Melia (2006) points out that although Field provides a proof of the conservativeness of ZFCV(N), he does not provide an argument that all useful applied mathematics can be carried out in ZFCV(N). Unless the Fieldian nominalist provides reasons to believe that this is the case, it is hard to assess the significance of Field’s proof, as it is hard to assess whether future applications of mathematics will be carried out in ZFCV(N).

vi. The Best Theory?

Mark Colyvan argues that talking of mathematical objects is not dispensable to the best scientific theories because nominalistic versions of those theories will be worse than their mathematical counterparts. Good theories must be both internally consistent and consistent with observations, but there are additional theoretical virtues that must be taken into consideration. Colyvan (2001) lists the following:

- Simplicity / Parsimony: Given two theories with the same empirical consequences, we should prefer the theory that is simpler to state, and which has simpler ontological commitments;

- Unificatory / Explanatory Power: We should prefer theories that predict the maximum number of observable consequences with the minimum number of theoretical devises;

- Boldness / Fruitfulness: We should prefer theories that make bold predictions of novel phenomena over those that only account for familiar phenomena;

- Formal Elegance: We should prefer theories that are, in a hard-to-define way, more beautiful than other theories.

Colyvan contends that mathematical theories are often more virtuous than nominalistic ones. (See the section Unification, Explanation and Confirmation in the article on The Applicability of Mathematics and the section The Explanatory Indispensability Argument in the article on The Indispensability Argument for examples of the unificatory and explanatory power of mathematics.)

Field, however, takes nominalistic theories to have greater explanatory power in some respects. They provide intrinsic explanations of physical phenomena, rather than appealing to extrinsic mathematical facts, and they eliminate the arbitrariness in the choice of units of measurement that accompany mathematical theories (Field 1980, 1989 chapter 6). It is open to the Fieldian nominalist to argue that her theoretical virtues are somehow better or more fundamental than those enjoyed by mathematical theories. Since there is no agreed-upon metric for measuring theoretical virtues, nor agreement over their epistemic significance (are they really indicators of truth or mere pragmatic expediencies?), reaching a resolution in this area might not be easy.

6. Deflationary Nominalism

At the beginning of the twentieth century, attempts at reconstructive nominalism ebbed and many nominalists came to accept (Indispensability) or to see it as somehow orthogonal to ontological questions. As the indispensability argument is valid, this requires rejecting either (Realism) or (Quine’s Criterion). This section explores the latter option.

A number of philosophers have questioned the semantic assumptions driving (Quine’s Criterion). Many natural language sentences employ apparent reference or quantification even when commitment to the existence of the things apparently referred to or quantified over would be, for various reasons, implausible:

- There is a better way than this.

- His lack of insight was astounding.

- There are many similarities between Sellars and Brandom.

- There is a chance we will make it in time.

- I have a beef with the current administration.

- The view from my office is wonderful.

- She did it for your sake.

If one takes the semantic argument discussed earlier seriously, then, in holding these sentences to be true, one would be committed to the existence of ways, lacks, similarities, chances, beefs, views and sakes. Many, however, take it that positing the existence of objects such as these is bizarre, since they do not seem to be part of the “furniture of the universe”. One response, then, is to deny that “there is” always expresses existence, in an ontologically significant sense.

a. Azzouni

Jody Azzouni has been the most prominent defender of this approach to nominalism. Azzouni’s view (2004, 2007, 2010a, 2010b, 2017) is that both quantifiers and the term “exists” are neutral between ontologically committing and non-ontologically committing uses. Context decides whether they are being used in ontological or non-ontological ways. “God exists” uttered in a discussion between an atheist and a theist would (usually) express ontological commitment, but “Important similarities between Sellars and Brandom really do exist” would not (usually) be intended to express ontological commitment to similarities.

i. Quantifier Commitments and Ontological Commitments

Azzouni, then, draws a distinction between mere quantifier commitments and existential commitments (here understood in an ontologically significant sense); not all quantifier commitments are existential commitments. He replaces Quine’s semantic criterion for what a discourse is committed to with a metaphysical criterion for what exists. Something exists, according to Azzouni, if and only if it is mind- and language-independent. This requires both rejecting the Quinian criterion and motivating the metaphysical one. With respect to the latter, Azzouni does not give a metaphysical argument for the criterion but appeals instead to the de facto practices of people in general. One should adopt mind- and language-independence as a criterion for what exists because of the sociological fact that the community of speakers takes ontologically dependent items not to exist.

Given this criterion for existence, the heart of Azzouni’s account consists in spelling out the implications of mind- and language-independence for our knowledge-gathering behavior. For instance, language- and mind-independent objects cannot be stipulated into existence. Inventing a fictional character on the other hand involves nothing more than thinking of her. Fictional entities are paradigmatically mind-dependent and hence non-existent. This is to be contrasted with the way we form beliefs about mind-independent objects. In particular, our epistemic access to mind-independent posits possesses the following salient features: robustness, refinement, monitoring and grounding:

- Robustness: Epistemic access to a posit is robust if results about that posit are independent of our expectations about it. For example, Newtonian mechanics (in conjunction with some auxiliary assumptions) predicted that the planet Uranus would have a particular perihelion which observation revealed it not to have.

- Refinement: Epistemic access to a posit exhibits refinement when there are means by which to adjust or refine access to that posit. For example, more powerful telescopes allow improved access to distant parts of the observable universe.

- Monitoring: Epistemic access to a posit involves monitoring when what the posit does through time can be tracked or when different aspects of the posit can be explored. For example, C.T.R. Wilson’s experiments appeared to reveal the trajectory of atoms by their observable effects on water vapor.

- Grounding: Epistemic access to a posit exhibits grounding when properties of the posit itself explain why we can discover what properties the posit has. That stars emit light explains why they are visible to the naked eye at night.

If the way in which we establish truths about a posit does not fit with the way we establish truths about mind- and language-independent posits, then we are treating, in practice, this posit as mind- and language-dependent. Given Azzouni’s criterion for existence, this amounts to treating this posit as non-existent. The leading idea here is that an examination of scientific practice shows that we treat concrete posits—observables, but also theoretical posits such as subatomic particles—as mind- and language-independent but treat mathematical objects as mind- and language-dependent.

When robustness, refinement, monitoring, and grounding are active in deciding whether a posit exists and what features it has, one has what Azzouni calls “thick epistemic access” to it. Not all scientific posits which Azzouni takes to exist enjoy thick epistemic access, however. To take an example: because the expansion of the universe is accelerating, there are parts of the universe which are sufficiently distant from the Earth so that no information from them will ever reach observers on Earth—these regions are outside our past light cone. Accepted cosmology posits the existence of galaxies, stars, nebulae, and suchlike outside our past light cone, yet concrete entities in these regions of the universe clearly fail to exhibit, at the very least, monitoring and grounding. Azzouni calls the sort of access we have to entities such as these “thin epistemic access”. In Azzouni’s developed view, thin posits are “the items we commit ourselves to on the basis of our theories about what the things we thickly access are like” (Azzouni 2012, 963). Moreover, thin posits require “excuse clauses”: explanations, stemming from the scientific theories that describe them, of why we fail to have thick access to them. In the case of galaxies outside our past light cone, we have thick access to things inside our past light cone; widely accepted cosmological theories about the features of the posits in the early universe which, in conjunction with natural laws, entail the existence of galaxies outside our light cone. Hence, theories about the things we thickly access commit us to the existence of galaxies which we cannot thickly access. They also provide the needed excuse clause: special relativity does not allow entities to travel through space faster than the speed of light.

ii. The Coherence of Denying Quine’s Criterion