The Golden Rule

The most familiar version of the Golden Rule says, “Do unto others as you would have them do unto you.” Moral philosophy has barely taken notice of the golden rule in its own terms despite the rule’s prominence in commonsense ethics. This article approaches the rule, therefore, through the rubric of building its philosophy, or clearing a path for such construction. The approach reworks common belief rather than elaborating an abstracted conception of the rule’s logic. Working “bottom-up” in this way builds on social experience with the rule and allows us to clear up its long-standing misinterpretations. With those misconceptions go many of the rule’s criticisms.

The article notes the rule’s highly circumscribed social scope in the cultures of its origin and its role in framing psychological outlooks toward others, not directing behavior. This emphasis eases the rule’s “burdens of obligation,” which are already more manageable than expected in the rule’s primary role, socializing children. The rule is distinguished from highly supererogatory rationales commonly confused with it—loving thy neighbor as thyself, turning the other cheek, and aiding the poor, homeless and afflicted. Like agape or unconditional love, these precepts demand much more altruism of us, and are much more liable to utopianism. The golden rule urges more feasible other-directedness and egalitarianism in our outlook.

A raft of additional rationales is offered to challenge the rule’s reputation as overly idealistic and infeasible in daily life. While highlighting the golden rule’s psychological functions, doubt is cast on the rule’s need for empathy and cognitive role-taking. The rule can be followed through adherence to social reciprocity conventions and their approved norms. These may provide a better guide to its practice than the personal exercise of its empathic perspective. This seems true even in novel situations for which these cultural norms can be extrapolated. Here the golden rule also can function as a procedural standard for judging the moral legitimacy of certain conventions.

Philosophy’s two prominent analyses of the golden rule are credited, along with the prospects for assimilating such a rule of thumb, to a universal principle in general theory. The failures of this generalizing approach are detailed, however, in preserving the rule’s distinct contours. The pivotal role of conceptual reductionism is discussed in mainstream ethical theory, noting that other forms of theorizing are possible and are more fit to rules of thumb. Circumscribed, interpersonal rationales like the golden rule need not be viewed philosophically as simply yet-to-be generalized societal principles. Instead, the golden rule and its related rationales-of-scale may need more piecemeal analyses, perhaps know-how models of theory, integrating algorithms and problem-solving procedures that preserve the specialized roles and scope. Neither mainstream explanatory theory, hybrid theory, nor applied ethics currently focuses on such modeling. Consequently, the faults in golden-rule thinking, as represented in general principles, may say less about inherent flaws in the rule’s logic than about shortfalls in theory building.

Finally, a radically different perspective is posed, depicting the golden rule as a description, not prescription, that portrays the symptoms of certain epiphanies and personal transformations observed in spiritual experience.

Table of Contents

- Common Observations and Tradition

- What Achilles Heel?

- Sibling Rules and Associated Principles

- Golden Role-Taking and Empathy

- The Rule of Love: Agape and Unconditionality

- Philosophical Slight

- Sticking Points

- Ethical Reductionism

- Ill-Fitting Theory (Over-Generalizing Rules of Thumb)

- Know-How Theory (And Medium-Sized Rationales)

- Regressive Default (Is Ancient Wisdom Out-Dated?)

- When is a Rule Not a Rule, but a Description?

- References and Further Reading

1. Common Observations and Tradition

“Do unto others as you would have them do unto you.” This seems the most familiar version of the golden rule, highlighting its helpful and proactive gold standard. Its corollary, the so-called “silver rule,” focuses on restraint and non-harm: “do nothing to others you would not have done to you.” There is a certain legalism in the way the “do not” corollary follows its proactive “do unto” partner, in both Western and Eastern scriptural traditions. The rule’s benevolent spirit seems protected here from being used to mask unsavory intents and projects that could be hidden beneath. (It is sobering to encounter the same positive-negative distinction, so recently introduced to handle modern moral dilemmas like abortion, thriving in 500 B.C.E.)

The golden rule is closely associated with Christian ethics though its origins go further back and graces Asian culture as well. Normally we interpret the golden rule as telling us how to act. But in practice its greater role may be psychological, alerting us to everyday self-absorption, and the failure to consider our impacts on others. The rule reminds us also that we are peers to others who deserve comparable consideration. It suggests a general orientation toward others, an outlook for seeing our relations with them. At the least, we should not impact others negatively, treating their interests as secondary.

This is a strongly egalitarian message. When first conveyed, in the inegalitarian social settings of ancient Hebrews, it could have been a very radical message. But it likely was not, since it appears in scripture as an obscure bit of advice among scores of rules with greater point and stricture, given far more emphasis. Most likely the rule also assumed existing peer-conventions for interacting with clan-members, neighbors, co-workers, friends and siblings. In context, the rule affirmed a sentiment like “We’re all Jews here,” or “all of sect Y.” Only when this rule was made a centerpiece of social interaction (by Jesus or Yeshua, and fellow John-the-Baptist disciples) did it become a more radical message, crossing class, clan and tribal boundaries within Judaism. Of special note is the rule’s application to outcasts and those below one’s station—the poor, lepers, Samaritans, and certain heathens (goyem). Yeshua apparently made the rule second in importance only to the First Commandment of “the Father” (Hashem). This was to love God committedly, then love thy neighbor as thyself, which raised the rule’s status greatly. It brought social inclusivity to center stage, thus shifting the focus of Jewish ethics generally. Yet the “love thy neighbor” maxim far exceeds the golden rule in its moral expectations. It stresses loving identification with others while the golden rule merely advises equal treatment.

Only when the golden rule was applied across various cultures did it become a truly revolutionary message. Its “good news,” spread by evangelists like Paul (Saul of Tarsus), fermented a consciousness-shift among early Christians, causing them actually to “love all of God’s children” equally, extending to the sharing of all goods and the acceptance of women as equals. Perhaps this was because such love and sharing radically departed from Jewish tradition and was soon replaced with standard patriarchy and private property. The rule’s socialism might have fermented social upheaval in occupied Roman territories had it actually been practiced on a significant scale, which may help explain its persecution in that empire. Most likely the golden rule was not meant for such universalism, however, and cannot feasibly function on broad scales.

The Confucian version of the golden rule faced a more rigid Chinese clan system, outdoing the Hebrews in social-class distinctions and the sense that many lives are worthless. More, Confucius himself made the golden rule an unrivaled centerpiece of his philosophy of life (The Analects, 1962). The rule, Kung-shu, came full-blown from the very lips and writings of the “morality giver” and in seemingly universal form. It played a role comparable to God’s will, in religious views, to which the concept of “heaven” or “fate” was a distant second. And Confucius explicitly depicted the “shu” component as human-heartedness, akin to compassion. Confucian followers succeeding Mencius into the neo-Confucians, however, emphasized the Kung component or ritual righteousness. They increasingly interpreted the rule within the existing network of Chinese social conventions. It was a source of cultural status quoism—to each social station, its proper portion. Eventually, what came to be called the Rule of the Measuring Square was associated with up to a thousand ritual directives for daily life encompassing etiquette, propriety and politeness within the array of traditional relationships and their strict role-obligations. The social status quo in Confucian China was anything but compassionate, especially in the broader community and political arenas of life.

In traditional culture, the “others” in “do unto others” was interpreted as “relevant others,” which made the rule much easier to follow, if far less egalitarian or inspiring. One’s true peers were identified only within one’s class, gender, or occupation, as well as one’s extended family members. Generalizing peer relations more broadly was unthinkable, apparently, and was therefore not read into the rule’s intent. Confucius spoke of hopelessly searching in vain, his whole life for one person who could practice Kung-shu for one single day. But clearly he meant one “man,” not person, and one “gentleman” of the highest class. This classism was a source of conflict between Confucianism and Taoism, where the lowest of the low were often depicted as spiritual exemplars.

For the golden rule to have become so pervasive across historical epochs and cultures suggests a growing suspicion of class and ethnic distinctions—challenging ethnocentrism. This trend dovetails nicely with the rule’s challenge to egocentrism at the personal level. The rule’s strong and explicit egalitarianism has the same limited capture today as it did originally, confined to distinctly religious and closed communities of very limited scope. It is unclear that devout, modern-day Jews or Christians vaunt strong equality of treatment even as an ideal to strive toward. We may speak of social outcasts in our society as comrades, and recognize members of “strange” cultures and unfriendly nations as “fellow children of God.” But we rarely place them on a par with those closer by or close to us, nor treat them especially well. Neither is it clear, to some, that doing so would be best. Instead, the rule’s original small scope and design is preserved, limited to primary groups at most.

Biblical scholars tend to see Yeshua’s message as meant for Jews per se, extending to the treatment of non-Jews yes, but as Jews should treat them. And this does not include treating them as Jews. The golden rule has a very different meaning when it is a circumscribed, in-group prescription. In this form, its application is guided by hosts of assumptions, expectations, traditions, and religious obligations, recognized like-mindedly by “the tribe.” This helps solve the ambiguity problem of how to apply the rule within different roles: parents dealing with children, supervisors with rank-and-file employees, and the like.

2. What Achilles Heel?

When considering a prominent view late in its history, its paths of development also merit analysis. How were its uses broadened or updated over time, to fit modern contexts? Arguably the Paulist extension of the rule to heathens was such a development, as was the rule’s secularization. The rule’s philosophical recasting as a universal principle qualifies most within moral theory. Just as important are ways the rule has been misconstrued and misappropriated, veering from its design function.

We must acknowledge that the golden rule is no longer taken seriously in practice or even aspiration, but merely paid lip service. The same feature that makes the golden rule gleam—its idealism—has dimmed its prospects for influence. The rule is simply too idealistic; that is its established reputation. Note that over-idealism has not discredited Kantian or Utilitarian principles, by contrast, because general theory poses conceptual objects, idealized by nature. They focus on explanation in principle, not application in the concrete. But the golden rule is to be followed, and following the golden rule requires a saintly, unselfish disposition to operate, with a utopian world to operate in. This is common belief. Cloistered monasteries and spiritual communes (Bruderhofs, Koinonia) are its hold-out domains. But even as an ideal in everyday life, the rule is confined to preaching, teaching, and window dressing. Why then make it the object of serious analysis? The following considerations challenge the rule’s blanket dismissal in practice.

First, the silver component of the golden rule merely bids that we do no harm by mistreating others—treating them the way we would not wish to be treated. There is a general moral consensus in any society on what constitutes harms and mistreatments, wrongs and injustices. So to obey this component of the golden rule is something we typically expect of each other, even without explicitly consulting a hallowed precept. Adhering specifically to the golden rule’s guidelines, then, raises no special difficulty. Its silver role is mostly educative in this context, helping us understand why we expect certain behavior from each other. “See how it feels” when folk violate expectations?

The gold in the rule asks more from us, treating people in fair, beneficial, even helpful ways. As some have it, we are to be loving toward others, even when others do not reciprocate, or in fact mistreat us. This would be asking much. But despite appearance, the golden rule does not ask it of us. Nothing about love or generosity is mentioned in the rule, nor implied, much less letting oneself be taken advantage of. Loving thy neighbor as oneself, or turning the other cheek, are distinct precepts—distinct from the golden rule and from each other. These rules are not stated or identified with the golden rationale in biblical or Confucian scripture. Nor are they illustrated together, say in the parables.

We may wish we loved everyone and that everyone loved us, but a wish is not a prescription or command—“Do unto.” And we cannot feasibly love on demand, either in our hearts or actions. (Can we learn to love others as ourselves over a lifetime?) But we can certainly consider how we need or prefer to be treated. And we can treat others that way on almost all occasions, on the spot, without needing to undergo a prior regimen of prayer, meditation, or working with the poor.

As noted, the golden rule may deal more with being other-directed and sensitive rather than proactive. Leading with the word “Do” does not necessarily signal the rule’s demand for action anymore than parents saying to teenagers, “Be good,” when they go on a date. Whether they are (should be) a certain way isn’t the point. There is no need for them to engage their character and its traits, for example. The focus here is on what they do, actually, and should not do. Likewise with “Do your part” or “Don’t get in the way”: these are general directives of how to orient ourselves on certain occasions. They prime us to take certain sorts of postures, showing a readiness to cooperate or to ask others if we are being a pest, though we may not succeed even if we try. They prime us to apologize if in fact we do get in the way, but maybe not more than that.

No altruism (self-sacrifice) is needed for golden-ruling in this psychological form for adopting a certain “other-orientation” in “the spirit of” greater awareness toward others. Usually one bears no cost to engage empathetic feelings, if that is what is needed. One wonders whether an implicit sense of this merely attitudinal “spirit” of the golden rule helps account for why we do not practice it—no hypocrisy required. If so, it would allow an uplifting turnaround in our moral self-understanding and self-criticism.

Conjuring up certain outlooks or orientations is an especially feasible task when provided a golden recipe for how—by role-taking, for example, or empathy or adherence to reciprocity norms. Once our heart goes out to others, following its spontaneous pull hardly requires going the extra foot, much less a mile in effort for anyone. We simply do what we feel, as much as the pull tugs us to. The truth is that we interact largely in words, and kindly words are free. We’re often not occupied when called upon to respond to others, so that responding lushly is easy—there is no hefty competition for our time or interest. Consider the sort of “do-unto” that can make a person’s week: “I wanted to mention how much I appreciate your support during this transition time for me. It’s noticeable, and it means a lot.”

It pays moral philosophy to think the golden rule through in such actual everyday circumstances before imagining the rule’s costs in principle, or worst-case scenarios. Where school systems routinely include some degree of moral education in their curricula, the case for golden-rule feasibility in a society is even stronger. And, arguably, most children already get some such training in school and at home implicitly.

The same reduced-effort scenario holds when sizing up moral exemplarism, often associated with the golden-rule, and with living its sibling principles. Ministering to the poor and ill often involves the routine work of truckers or dock workers, loading canned food or medical supplies to be hauled away, or hauling it oneself. It may involve primitive nursing or cooking, and point of contact service work routinely taken on as jobs by non-exemplars. These are not seen as careers in saintly heroism. Pursuing such work as a mission, not an occupation, takes significant commitment and gumption. But many exemplars report gradually falling into their roles, without really noticing or thinking clearly (David Fattah of Umoja House) or of being dragged into “the life” by others (Andrie Sakharov and Martin Luther King, for example.) (See Colby and Damon 1984, Oliner and Oliner 1988, The Noetics Institute “Creative Altruist” Profiles). More, everyday exemplars report doing their work out of an atypical outlook on society and their relation to it. This comes spontaneously to them, as ours comes to us. No additional, much less extraordinary effort is required. This seems the point of Mother Teresa’s refrain to those asking how she could possibly work with lepers and the dying, “Come see.”

If the golden rule is designed for small-group interaction, where face-to face relations dominate, a failure to reciprocate in kind will be noticed. It cannot be hidden as in anonymous, institutionally-mediated cooperation at a distance. Subtle pressures will be felt to conform with this group norm, and subtle sanctions will apply to those who take more than they give. Conforming to norms in this setting will be easier than usual, as well, since in-groups attract the like-minded. And in such contexts requiring extraordinarily helpful motivations and actions from others would be seen as unfair.

By assessing the golden rule outside of such contexts we miss its implicit components, the network of mutual understandings, and established community practices that make its adherence feasible and comprehensible. Such considerations are also crucial in determining the adequacy of the golden rule. The shortfalls that have been identified by the rule’s detractors seemingly arise when the rule is over-generalized and set to tasks beyond its design. If its function is primarily psychological, its conceptual or theoretical faults are not key. If its design is small-scale, fit to primary relations, its danger of allowing adherents to be stepped on is not key. The rule should not be used where those around you let them happen or can’t see it happen. And if the rule’s guidance is judged too vague to follow reliably, we should look to the myriad expectations and implicit assumptions that go with it to see if they supply needed precision and clarity.

The golden rule is not only a distinct rationale within a family of related rationales. It is a general marker, the one explicit component in networks of more implicit rationales and specific prescriptions. Teachings that abstract the rule from its implicit corollaries and situational expectations fail to capture what the rule even says. Theoretical models of the rule that further abstract the rule’s logic from its substance, content or process, likely mutilate it beyond recognition.

“How would you feel if?” puts the golden rule’s peer spirit in a mother’s teaching hands when urging her egocentric, but sensitive child to consider others. As a socializing device, the rule helps us identify our roles within mutually respectful and cooperating community. How well it accomplishes this socializing task is another crucial mark of its adequacy, perhaps the most crucial. The prospect of first engaging this rule typically captures childhood imaginations, like acquiring many highly useful social skills. (Fowler 1981, Kohlberg 1968, 1982)

Putting these considerations together allows us to identify where the golden rule may be operating unnoticed as a matter of routine—in families, friendships, classrooms and neighborhoods, and in hosts of informal organizations aiming to perform services in the community. Isn’t it in fact typical in these interactions that we treat each other reciprocally, as each other would wish, want, choose, consent or prefer?

3. Sibling Rules and Associated Principles

The foregoing appeals for feasibility are not primarily defenses of the golden rule against criticism. They are clarifications of the rule that expose misconceptions, central to its long-standing reputation. We now question, also, the much admired roles of empathy and role-taking in the golden rule, which can ease adherence to it, but are not necessary. The rule is certainly not a guideline for empathizing or role-taking process, as most believe and welcome. However, empathy can help apply the rule and the rule can provide many “teaching moments” for promoting and practicing empathy, which is advantageous. But distinguishing empathy from the rule’s function also is fortunate for the empathetically challenged among us, and those not able to see the others’ sides. Their numbers seem legion. The golden rule can be adhered to in other ways.

The golden rule is much-reputed for being the most culturally universal ethical tenet in human history. This suggests a golden link to human nature and its inherent aspirations. It recommends the rule as a unique standard for international understanding and cooperation—noble aims, much-lauded by supporters. In support of the link, golden logic and paraphrasing has been cited in tribal and industrialized societies across the globe, from time immemorial to the present. This supposedly renders the rule immune to cultural imperialism when made standard for human rights, international law, and the spreading of western democracy and education—a prospect many welcome, while others fear it. Note that if the golden rule is truly distinct from the related principles such as loving thy neighbor as thyself and feeding the poor, these cherished claims for the rule are basically debunked.

Analysis of this endless stream of sightings shows no more than a family resemblance among distinct rationales (See golden rule website in references below.). Some rationales deal with putting oneself in another’s place, with others viewing everyone as part of one human family, or divine family. Still others promote charity, forgiveness and love for all. Culturally, the golden rule rationale is mostly confined to certain strands of the Judeo-Christian and Chinese traditions, which are broad and lasting, at least until recently, but hardly universal (See Wattles 1966).

The golden-rule’s distinctness, here, is seen relative to its origins. The original statement of the golden rule, in the Hebrew Torah, shows a rule, not an ethical principle, much less the sort of universal principle philosophers make of it. It is one of the simpler and most briefly stated dos and don’ts among long lists of particular rules in Leviticus (XIX: 10-18). These directives concern kosher eating, animal sacrifice procedures, threads that can’t be used together in weaved clothing, and even the cleansing of “impurity” (such as menstruation) by bringing pigeons and doves to a rabbi for ceremonial disposition. If one blinks, or one’s mind wanders, one would miss it, its golden gleam notwithstanding. And even a devout Jew is likely to lose concentration when perusing these outdated, dubious and less than riveting observations.

No fair reading of Levitticus XIX: 18 would term its statement the golden rule, not in our modern sense, first stated in Matthew 7:12. For in Levitticus the commandment is merely not to judge an offender by his offense, and thereby hold a grudge against a fellow Jew for committing it. But love him as yourself. The latter, a crucially different principle, is meant here differently than we now interpret it as well. It perhaps can be rendered as `Remember that you offend fellow Jews also and so you are like the offender on other occasions.’

Seen amid such concrete and mutually understood practices of a small tribe, the golden rule poses no role-taking test. Any community member can comply simply by knowing which reciprocity practices are approved or frowned on. Recollecting what it was like to be on the receiving end of others’ slights or benefits also can help. But that would mean taking one’s own perspective, not another’s, in the past. Doing so is not essential to “golden-ruling” however, nor likely reliable. If a kind of imaginative role-playing is contemplated, one need only conjure up images of community elders frowning or fawning over a variety of choice options and everyday practices.

Neither in eastern nor western traditions did the golden rule shine alone. Thus viewing and analyzing it in isolation misses the point. The golden rule’s relation to sibling principles, associated altered its meaning and purpose in different settings. The most prominent standard bearer for this family of rules seems to have been, “loving thy neighbor as thyself.” This “royal law” is a very different sort of prescription from the golden rule, foreseeing a variety of extraordinarily benevolent practices born of extraordinary identification with others. In Judaism, benevolence usually meant helping family members and neighbors primarily, focusing on one’s kind—one’s particular sect. Generosity meant hospitality to the stranger or alien as well, remembering that the Jews were once strangers in a strange land. Alms were given to the poor; crops were not gleaned from the edges of one’s farm-field so that the poor might find sustenance in the remains. Farmland was to lay fallow each seventh year (like the Sabbath when God rested) so that, in part, the poor then could find rest there, and room to grow (Deuteronomy XV: 7, Leviticus XXIII: 22, XXV: 25, 35).

Turning the other cheek (Luke 6:29), loving even one’s enemy (Matthew 5:44) and not turning away when anyone asks of you (5:42)—these go well beyond normal charity or benevolence, even more than identifying with our neighbor. What neighbor would strike or steal from you (taking our cloak so that you must give him your coat also (Matthew 5:40)? Such practices are not at all required or asked of the Confucian “gentleman” whose Kung-shu practice is more about respect for elders and ancestors, and fulfilling hosts of family and community responsibilities.

With regard to Yeshua’s teachings on feeding the hungry, sheltering the homeless, or praying for those who shamefully use and abuse you, he summarily urged that followers “be perfect, even as your Father in Heaven is Perfect” (5:48). This far exceeds what the golden rule asks—simply that we consider others as comparable to us and consider our comparable impacts on them. These do not represent fair or equal reciprocity in fact. Ask how you would wish to be treated if you were a shameful abuser or even homeless person. There is sufficient testimony revealing that many abusers and homeless do not at all want to be shown charity, for example, but condemnation or punishment, in the first case, and being left alone to fend in a “street community” in the second. They feel this is what they deserve. (To abuse-counselors and homeless shelter workers, this goes without saying.) What the abusive and homeless should want, or calculate as their desert, may be something different. But golden-rule role-taking will not tell.

There is one area where the golden rule extends too far, directly into the path of a turning of the other cheek. When we are seriously taken advantage of or mistreated, the rule bids that we treat them well nonetheless. We are to react to unfair treatment as if it were fair treatment, ignoring the moral difference. Critics jump on this problem, as they should, because the golden rule seems designed to highlight such cases. Here is where the rule most contrasts with our typical, pre-moral reaction, while also rising above (Old Testament) justice. In the process, it promotes systematic and egregious self-victimization in the name of self-sacrifice. Yet, is self-sacrifice in the name of unfairness to be admired? Benevolence that suborns injustice, rather than adding ideals to it, seems morally questionable. Moreover, under the golden rule, both victimization and self-victimization seems endless, promoting further abuse in those who have a propensity for it. No matter how much someone takes advantage of us, we are to keep treating them well. Here the golden rule seems simply unresponsive. Its call to virtuous self-expression is fine, as is its reaction to the equal personhood of the offender. But it neither addresses the wrong being committed, nor that part of the perpetrator to be faulted and held accountable. Interpersonally, the rule calls for a bizarre response, an almost obtuse or incomprehensible one. While a “forgiving” response may be preferable to retribution, why should just desert be completely ignored? It can certainly be integrated into the high-road alternative. In this type of case, the golden rule sides with its infeasible siblings. It bids us to play the exemplar of “new covenant” morality—the morality of love for all people as people, or as children of God. And this asks too much.

These criticisms have merit, but can be mitigated. When dealing with cases of unfairness and abuse, critics assume the golden rule requires us to “take the pain” uncomplainingly. There is no such proviso in the rule. As the Gandhi-King method has shown, it is perfectly legitimate to fault the action—even condemn the action—while not condemning the person, or taking revenge. The practice of abusing or taking advantage of someone does not define its author as a person after all, even when it is habitual. The wrongs anyone commits do not eradicate his good deeds, nor our potential for reform. And the golden rule has us recognize that. But the spirit of silent self-sacrifice is found more in the sibling principles than the golden rule, and should be kept there. In the current case we can readily respond to our oppressor by calling a spade a spade—“You took advantage of me, I noticed.” That would be a first response. “You keep taking advantage of me: that was abusive. I don’t like it; it’s not OK with me.” The abuser responds, “It seems like you like it. Why else would you take it and respond as if it’s OK?” We reply, “Why should I let your abuse drag me down to your level, compounding your offence?”

There are nice and not so nice ways to make this point. If Yeshua is our guide, not so nice approaches are acceptable. To treatment from those known as most righteous in Jerusalem, for example, he responded, “Woe to you Scribes and Pharisees, hypocrites all..you are like whited sepulchers, all clean and fair without, and inside filled with dead man’s bones and all corruption…yours is a house of desolation, the home of the lizard and the spider…Serpents, brood of vipers, how can any of you escape damnation?” (Matthew 23:13-50 as insightfully condensed by Zefferelli.) If this be love, then it is certainly hard love, especially when we note that Yeshua faults the person here, not just the act.

We must also see these cases in social context to see how far the golden rule bids us go. If we are sensible, and have friends, it is unlikely we will place ourselves in the vicinity of serious abusers, or remain there. The social convention of avoiding those who hurt us also must figure into the rule’s understanding. The defense our friends will put up for us against abuse must figure into the rule’s feasibility as well.

Most morally important, these abuse cases do not illustrate the golden rule’s standard application—quite the contrary. Fair-dealing with unfairness and abuse, in particular, call for special principles of rectification, including punishment, recompense or reform. When used in this context, without alteration, the golden rule poses an alternative to the typical ways these practices are performed. But it remains this sort of special principle. Among its aims, the rule certainly seems bent on goals like rectification, recompense and reform, but indirectly. Arguably the rule has us exemplify the right path—the path the perpetrator might have taken, but did not, thus demonstrating its allure, its superiority. This includes, for observers in the community, the superiority of fairness over retribution (“’Vengeance is mine,’ sayeth the Lord.”) Teaching this lesson is aimed at raising moral consciousness, especially in the perpetrator. As such, it resembles the practice of “bearing unmerited suffering” in the Gandhi-King approach, aimed at piquing moral conscience in those oppressing us (King 1986).

Ideally, a perpetrator will think better of his practice, apologizing for past wrongs and making up for them. At least it might move him to abandon this sort of practice. And if moral processes are not awakened, then at least placing the offender in a morally disadvantageous position within the group will bring pressures to bear on his behavior. Exemplifying fairness in this way also shows demonstrates putting the person first, holding his status paramount relative to his actions, and our sense of offense.

Exemplifying a moral high road, so as to edify others does not show passivity or weakness. It is normally communicated in a strong, positive pose. Standing above a vengeful or masochist temptation uplifts the supposed victim, not making him further trodden down. Indeed, its courageous spirit is key in working its effect, an effect achieved by Gandhi, King and legions of followers under the most morally hostile conditions. Aside from giving abusers pause, high-minded responses bring loud outcries of protest in one’s cause from outside observers, making reform prudent, and practically necessary.

Again, these realities of the rule can only be seen in context, looking into the subtleties of interpersonal relating, communicated emotion, performance before a social audience and the like. The mere logic or golden principle of the thing is silent on them. The same holds for the less feasible sibling rules of the golden rule family, from giving to the poor to turning the other cheek. Trying them out makes a world of difference in understanding what they say. Consider an experiment with trying to “say yes to all who ask,” and substituting “yes” generally, where we routinely say “no” or “maybe.” Doing so may add much less than expected to our load because, first, it makes us more interested in being kinder, which is a rewarding experience, as it turns out. Second, we find that people do not generally ask much, especially when they see you at risk of being taken advantage of for your exceptional good will. Finding simple ways to make the most needy more self-reliant—such as simply encouraging them to be so—also may lighten the helping load. The good it does may be exceptional.

But what of the lingering “doormat problem” for those who are especially dependent and masochistic, all but inviting victimization from abusers? No full mitigation may be possible here. The golden rule, if not exacerbating the problem in practice, at least serves to legitimatize it. Its rationale has been exploited by many, including some Christian churches and clergy who suborn victimization as a lifestyle, especially for wives and mothers. A rule cannot be responsible for those who misuse it, or fail to grasp its purposes. But those sustaining the rule bear a responsibility to clarify its intent. It certainly would be better if the rule itself made its intentions clear or included illustrations of proper use. Currently, it relies on the chance intervention of moral teachers or service organizations—those opposed to, say, domestic violence. Even Yeshua’s disciples complained that the parables, supposedly illustrating tenets like the golden rule, were perplexing. Confucian writing was definitely not geared to rank and file Chinese, much less children learning their moral lessons. This is an intolerable shortfall for an egalitarian socialization tool.

Consider a second corollary (the “copper” rule?) that might address such difficulties. “When misused by those do unto fairly, do not quietly bear the offense, instead defending and deflecting if with as much understanding as can be summoned.” Notice that defending does not conflict with praying for those who shamelessly abuse us. (The “summoning understanding” proviso is meant to forestall reversion to a more pragmatic alternative such as “by any means necessary.”)

4. Golden Role-Taking and Empathy

“Putting oneself in the other guy’s place” is yet another distinct principle, as is “walking a mile in the other guy’s moccasins” (the Navaho version). The first involves taking a perspective, the second, gaining similar life experience in an ongoing way. Notice that “loving thy neighbor as thyself” requires neither of these operations presuming that we know how to love ourselves and need only extend that to someone. But of course we may not know how to love ourselves, or how to do so in the right way. The same can be said with identifying, role-taking or learning from another’s type of experience. Given that we may not be loving enough to ourselves, loving our neighbor is best accomplished by referring to prevailing standards. Our own proclivities or values are certainly not the final word. Just as with acting as we’d have others act toward us, loving thy neighbor concerns how we’re supposed to love others, as we should love ourselves. We must consult the community, its ethical conventions or scriptures (including Kantian or Utilitarian scriptures). The last word comes through a critical comparison of these conventions, in experience, with our proclivities and values.

Neither we nor our neighbors likely think it is legitimate, or even kind, to give a thief additional portions of our property. Doing so might well be masochistic, or even egotistical, thinking about our own character development most, thereby exacerbating crime and endangering the community. If we were the thief, we might very well not think that we should be given more of a victim’s property than we stole. Instead, perhaps, we might wish to steal it. Role-taking cannot guide us here. In fact, it could easily lead us astray in various misguided directions. Some would consider it ideal to be unconcerned with property because it puts spiritual concerns over materialism, or it puts charity before just desert. Others could make a case for better balancing the competing principles involved. What good does role-taking do here? And how can it work in a non-relativistic way, where everyone taking the other’s role would come to a similar realization of what to do correctly? The golden rule is not meant to raise such questions.

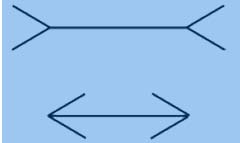

Philosophers deal with these problems by standardizing the way roles are taken, the thinking that goes on in the roles, and so forth. This is what the Kantian veil of ignorance or Rawlsian (1972) original position or Habermasian (1990) ideal speech rubric is for. But surely the commonsense role-taking precepts we are talking about here do not even dream of such measures.

Prescriptions for role-taking are likely prominent in many cultures both for the increased psychological perspective they breed and the door they open to better interpersonal interaction. The interpersonal skill involved is perhaps the best explanation of their widespread use and praise, not their power of edification. It is true that if we truly wished to treat others as ourselves, or the way we would want to be treated—if we were them, not ourselves merely placed in their position—role-taking would help. But it is not unusual for primarily psychological or interpersonal tools to aid ethics without being part of ethics itself.

The golden rule’s (emotional) empathy component is as unclear as its role-taking component. To empathize is not really to take another’s perspective. If we truly took that perspective, we would not have to empathize. Being in that perspective would moot an attempt to “feel with” it from another (Noddings 1984, Hoffman 1987). Even if we took the perspective without the associated emotion, our task would then be to conjure up the emotion in the perspective. It would not be to “feel with” anything. We’d be imaginatively in the other’s head and heart, imaginatively feeling their feelings directly. More, in any relevant context, the golden rule urges to think before we act, then imagine how we would feel, not how the other would. Thus any empathy involved would involve imaginatively “feeling with” myself, at a future time, recipient of another’s similar action. The point here is to supplant the other’s perspective and imagined reaction with our own. This is not how one empathizes. Emotionally, the appropriate orientation toward causing someone possible harm is worry or foreboding. Toward the prospect of doing future good, it’s anticipation of shared joy, perhaps. “Feeling with” or empathizing with others would be prescribed as, “Do unto others in a way that brings them the likely joy you’d happily share.”

Consider more closely what we are supposed to achieve from role-taking and empathy via the golden rule. We get a sense of how others are different from us, and how their situation differs from ours, uniquely tailored to their perspective and feelings on the matter. We then put ourselves in their place with these differences in tact, added on to ours, and subtracting from ours where necessary. So we occupy their perspective as them, not us, just as we’d wish them to do toward us when acting. (We wouldn’t want them to treat us as they’d wish to be treated, but as we’d wish to be treated when they took our perspective.)

But this already is a consequence of applying the rule, not a way of applying it. If depicted as a rule’s rationale it would say, “Treat others the way they’d wish or choose.” Seemingly the best way to do that is to ask them how they’d like to be treated. If we can’t ask, then perhaps we are not so much doing unto them a way as guessing what they’d like. Putting oneself in their place here would not seem a good idea. Neither would empathy, as opposed to prediction. A good prediction would rest on some track record of what they’ve liked in the past, perhaps acquired from a friend of theirs or one’s own experience with them as a friend.

Without involving others, such role-taking is a unilateral affair, whether well-intended or otherwise. It is often paternalistic, choosing someone’s best interest. The whole process is typically done by oneself, within one’s self-perspective or ego, and it can be spun as one wishes, no checks involved. Fairer and more respectful alternatives would involve not only consulting others on their actual outlooks, but including them in our decision making. “Is it OK with you if….” This approach negotiation is based on a different sort of mutuality, democratizing our choices and actions so that they are multilateral.

5. The Rule of Love: Agape and Unconditionality

To some, the gold in the golden rule is love, the silver component, respect. The love connection is likely made in part by confusing the golden rule with its sibling, love thy neighbor as oneself. Traditionally, ethics could have made the connection semantically—it used the term “self-love” where we now say self-interest. This could render like interest in others as other-love. But this is not really in the spirit of unconditional love.

A more likely path to connecting agape with the golden rule is to consider how we’d ideally wish to be treated by others and most wish we could treat them in turn. Wouldn’t we prefer mutual love to mere respect or toleration? This formulation has appeal though it ignores an important reality. Though we might wish to be treated ideally, we might not wish, or feel able to reciprocate in kind. Keeping mutual expectations a bit less onerous—especially when they apply to strangers and possible enemies—may seem more palatable.

But this is to think in interested and conditional terms. Agapeistic love is disinterested or indifferent, if in a lushly loving way. Its bestowal is not based on anything in particular about the person, but only that they are a person. This sufficiently qualifies them as a beloved. And agape does not come out of us as an interest we have, whether toward people, the good, or anything similar. It comes only out of love, expressing love, or the good luring us with its goodness. Our staking claim or aim toward the good as a personal goal is not involved. The same is true for self-regard. We love ourselves because we are lovable and valuable, like anyone else. The basic or essential self, the soul within us is lovable whether we happen to like and esteem ourselves or not. (Outka 1972).

The most obvious ethical implication of agape is that it is not socially discriminating. We do not love people because they are attractive, or hold compatible views, or work in a profession we respect. Are they friend, stranger, or opponent? It doesn’t matter. Most surprising, we do not prefer those close to us or in a special relationship, including parent and child. (Children in agapeistic communities are often raised by the adults as a whole, and in separate quarters from parents, primarily inhabited by peers.)

For moral idealists, agape is most alluring. To love in a non-discriminating way has a certain unblemished perfection to it. Pursuing moral values simply for their value or goodness seems clearly more elevated than pursuing them out of personal preference. Loving someone because they happen to be related to us, or a friend, or could do us a favor is shown up as somewhat cheap and discriminatory by comparison. Seeing ourselves as special is revealed for the trap it is—being stuck with ourselves and our self-preference, a burden to aspiration. What is this condition but the ultimate hold of ego over, binding us to all our attachments? (In philosophy, intellectual ego is a chief obstacle between us and truth, causing us to believe ourselves because we are ourselves, despite knowing that there are thinkers just as wise or wiser, with just as well-seasoned beliefs. Why be led around by the nose of our particular beliefs and interests just because they blare most loudly in our heads?)

Agape is worth pondering as a fit purveyor of the golden rule. What could be more golden? The golden rule’s raison d’être is indeed focused on countering egocentrism and self-interest. But promoting other-directedness is its remedy, not unconditionality. And concern for others’ interests is key to establishing equality as the rule directs. A plausible rendering of the golden rule, making its implicit concern for interests more visible would go, `Treat others the way you would be interested in being treated, making adjustments for their differing interests.’ In these terms, unconditional loving is a bad fit. Are we really “interested” in being treated as anyone should be treated regardless of the interests we identify with, as someone with a soul but no interests worth catering to? Likely not. This same lack of interest haunts Kant’s notion of respecting personhood unconditionally. The golden-rule problem is not that we’re failing to notice others’ personhood, but what others desire or prefer. We could indeed be faulted for ignoring others as persons, treating them like potted plants in the room, but that would only result if they craved our notice, attention, or participation. Typically, it would be fine with others if we just went about our business while not getting into theirs.

To be told that we should not be interested, or to be dealt with by people who will not relate to us in interested terms, basically undermines the golden rule’s effectiveness. As with empathy, we cannot be uninterested on demand, or even after practicing to do so long and hard. And if we do not have our self-identified interests taken seriously, we feel that we are not taken seriously, whether we ideally should or not. Ethics is not only about ideals, nor in fact, primarily about ideals. If interest were not key to ours and theirs, the golden rule would be moot. With unconditional love, reciprocity is beside the point, along with its social reciprocity conventions. Taking any perspective is the same as taking any other. In fact, taking one’s own perspective in particular is discriminatory, even when expressing generosity to others. So is taking the perspective of any particular other. Happening to be ourselves, or a particular other, and taking that as a basis for favoritism, seems a condition—a failure in unconditionality. I could have been anyone, any of them, as they could have been me. So why do I take who I am or who they are so seriously?. Unlike every other ethic, agape provides no basis for according ourselves special first-person discretion or privacy. The self-other gap is transcended. It’s not even clear how the typical moral division of labor is justified in agapeistic terms. In principle, when we raise our spoon filled with breakfast cereal at the morning table, the matter of whose mouth it goes into is in question.

Some agapeists would not go this far, instead keeping our self-identification intact. But there is good reason to go farther. Gandhi and King have forwarded a view of loving non-violence that doesn’t even allow self-defense because it involves the preference of self over other. Gandhi characterizes personal integrity as “living life as an open book” since one’s life is not one’s own, but merely one example of everyone’s life. And of course there are the turn the other cheek precepts of Yeshua, which push in this direction.

In any event, ethics is not built for such concerns. It is a system designed to handle conflicts of interest, the direction of interests toward values and, perhaps, the upgrading and transformation of interests into aspirations. Agape would function, within the golden rule, as something more like a song or affirmation for the self-transformations achieved. It is the very admirable diminution or lack of self-interest, in agapeistic love and in social discrimination that puts an agapeistic golden rule out of reach. Its double dose of moral purity and perfection puts it doubly out of reach. We arguably cannot be perfect as our Father in Heaven is perfect (or complete). We also cannot realistically strive toward it, and most likely should not. Religiously, to do so seems a sacrilege—pretending to the level of understanding, wisdom and “lovability” of infinite godhood. Secularly, its beautiful intentions have unwanted consequences. Aside from the impersonality of childrearing, anyone who has borne the impersonal treatment or unearnable support from someone bent on “treating everyone the same” can testify to its alienating quality. We wish to be loved for us, for our self-identity and the values we identify with. When we are not loved this way, we do not feel loved at all—not loved for whom we are. Ethically, we expect to be unique, or at least special in others’ eyes when we’ve created a special history. We are entitled to it. We build rights around it. And we feel callously disregarded when a loving gaze shows no special glint of recognition as it surveys us among a group of others. This is less egoism than a sense of distinctness and uniqueness within the additional expectations of realized relationship.

Putting the matter more generally, human motivational systems come individually packaged. They are hard-wired to harboring and pursuing interest. And a valid ethics is designed to serve human nature, even as it strives to improve it. If we can transcend human nature, then we need a different system of values, or perhaps nothing like an ethical system. We have risen beyond good and evil, indifferent to harm of death.. We are born, and remain psychologically individualized throughout life, not possessed of a hive mind in which we directly share our choice-making and experiences. We are each unquestionably possessed of this natural, immutable division of moral labors, which gives us direct and reliable control only of our own self. Hence we are held responsible only for our own actions, expected to do for ourselves, provided special standing to plead our own case of mistreatment, and accorded great discretion in our own individual sphere, to do as we like. When agapeistic morality puts our very nature on the spot, bidding us to recast basic motivations to suit—when it sets us in lifetime struggle against ourselves—it fails to acknowledge morality as our tool, not primarily our taskmaster. These considerations provide the needed boundary line to situate the golden rule this side of a feasibility-idealism divide. The golden rule is indeed designed for human nature as it is and for egos with interests, trying to be better to each other.

Admittedly the question of agape’s realism may not be decidable given the distinctly spiritual nature of their view. Christian agape, like Buddhist indifference and non-attachment is said to be inexpressible in words. It can only be understood correctly through direct insight and experience. Granted, adherents of these ideals place the achievement of spiritual insight out of common hands. Only a few of the most gifted or fortunate adherents achieve it in a lifetime. As such, spiritual love cannot be the currency of the golden rule as we know it, negotiating mutual equality for the vast majority of humanity in everyday life.

What agapeists may be onto is that the golden rule has a dual nature. At a common level, it is a principle of ethical reciprocity. But for those who use its ethic to rise above good and evil in a mundane sense, the golden rule is a wisdom principle. It marks the transcendence of interested and egoistic perspectives. It points toward its sibling of loving thy neighbor as thyself because thy neighbor is us in some deeper sense, accessible by deeper, less egoistic love.

6. Philosophical Slight.

With the foregoing array of “considered judgments” in hand, we are at last positioned to begin distinct philosophizing on the golden rule. That project starts by consulting philosophy’s reconstitution of traditional commonsense ethics—an added context for golden rule interpretation. Philosophical treatments of the golden rule itself come next, with an evaluation of their alternative top-down approach.

One reason philosophers emphasize the juxtaposition of ethics and human nature stems from the moralistic, if not masochistic cast of ethical traditions. Nietzsche’s depiction of “slave morality” in Christianity is a case in point (Nietzsche 1955). Moral suspicion of medieval shira laws in Islam is another. Because the golden rule is prominent in these suspect traditions, philosophy’s concerns are directly relevant. Self-interest has been rehabilitated in philosophical ethics, along with happiness as satisfying interests, not necessarily matching ethereal ideals or god’s will. Ethics in general has also been feminized to encompass self-caring as well, a kind of third-person empathy and supportive aid to oneself (Gilligan 1982). Here, a clarified golden rule notion can fit well.

The role of ethics as our tool and invention has been promoted over traditional views of its partial “imposition” by Nature, Reason or natural law. As Aristotelians note, the good for anything depends on its type or species: ethics is for “creatures like us,” and because we are not saintly beings we fall short of by nature. Ironically, this a line preached by Yeshua continually in upholding spirituality, or the heart of “the law,” over the legal letter. “The law (Sabbath) was made for man, not man for the law.” (Mark 2:27-28) On this view, ethics should not fate its users to a life of hypocrisy and of not feeling good enough.

For philosophers, however, even a clarified or unbiased depiction of the golden rule cannot overcome its shortfalls in specificity and decisiveness. Ply the rule in the handling of complex and nuanced problems of complex institutions and it is at sea. We cannot imagine how to begin its application. Exercise it within networks of social roles and practices and the rule seems utterly simplistic. (This said, the irony should not be lost here of critics setting the rule up to fail by over-generalizing its intended scope and standards for success.)

Maximum generalization is the dominant philosophical approach to the rule. And in this form there is no question that its shortfalls are many. The rule seems hopeless for dealing with highly layered institutions working through different hierarchies of status and authority. Yet the rule has been posed by philosophers as the ultimate grounding principle of the major moral-philosophic traditions—of a Kantian-like categorical imperative, and a Utilitarian prototype. It has been claimed, in fact, that the rule’s logic was designed for this generalization across cases, situations, and all varieties of societies (Singer (1963) and Hare (1975)).

These interpretations are highly unlikely judging from the rule’s strikingly ethnic origin and design function, as a bottom-up approach makes clear. As noted, this is a tribal or clan rule, cast in highly traditional societies and nurtured there. There is no evidence that it was ever originally intended to define human obligations and problem solving within the human community writ large, or in complex institutional settings in particular. And so shortfalls found in taking it out of its cultural context—ignoring the range of practices and roles that it presumed, placing it in types of social context that didn’t exist when it was born and raised should be no surprise. The golden rule’s format invites first-person use, addressing interacting with one or two others. Since the rule’s chief role in society seemingly became the instruction of children, alerting them to impacts on others, its shortfalls in complex problem solving seem irrelevant. Likewise Kant’s categorical imperative falls short in deciding who does the laundry in a marriage, especially once emotions have become too frayed and raw to import formulae into the discussion.

In small-group interactions what would normally be tolerated as diversity of opinion and practice can be legitimately identified as problematic instead. Being like-minded, most often group members have expressed commitment to common beliefs, values, and responsibilities. But more important, the rule is vastly more detailed and institutionalized here than it seems because of its guidance by established practices, conventions, and understandings. One’s reputation as a group member depends on holding up one’s end of approved norms, including the golden rule, lest one be considered unreliable and untrustworthy. In such contexts, one can imagine a corollary to the golden rule that would make sense: “Show not consideration to him who receiveth without thought of rendering back.” This seems contrary to the golden rule due to our mis-identification of the rule with sibling rationales of forgiveness and unconditional love—letting others abuse and take advantage of us. Moreover, this corollary may not sanction an actual comeuppance of offenders, in violation of golden-rule spirit, functioning instead as a threat or gentle reminder of joint expectations. Such expectations are a commonly accepted part of “doing unto each other” in a neighborhood or co-worker context where conventions of fairness, just desert and doing one’s share go with the territory.

Marcus Singer, in standard philosophical style, portrays the golden rule as a principle, not a rule. This is because it does not direct a specific type of action that can be morally evaluated in itself. Instead, it offers a rationale for generating such rules. Singer is a kind of “father of generalization” in ethics, holding that the rationale for action of any individual in types of situations holds for any other in like situations (Singer, 1955) Singer argues further that the golden rule is a procedural principle, directing us through a process—perspective-taking, either real or imaginary, for example—to generate morally salient action directives.

Singer’s is the “ideal” or top-down theoretical approach, as contrasted with our building from common sense. It starts from an abstracted logical ideal, elaborating a theory around it by tracing its logical implications. The approach is notably uninfluenced by the golden rule’s 2,500 year history. Of course, philosophy need not start from the beginning when addressing a concept, nor be confined by an original intent or design or its cultural development. The argument must be that the rule’s inner logic is the only active ingredient. The rest is chaff or flourish or unnecessary additives.

In principled form, Singer’s golden “rule” serves also as a standard for judging rules and directives for actions that impact us. The rationale of a contemplated action must adhere to the rubric of a self-other swap to pass ethical muster in the way that, say, our maxim of intentions must pass the universalization test of the Kant’s categorical imperative.

Singer’s view has merit, especially in emphasizing procedure. Still, the distinction between principles and rules may not be as sharp as claimed. General rules (rules of legal evidence, for example) also can be used to derive more specific rules based on their logics; principles need not be consulted. For example: Do nice things; do nice things, anonymously for close neighbors in distress; leave breakfast bakery goods at the doorstep of a next door neighbor the morning after they attend a close relative’s funeral; leave donuts and muffins on your next-door neighbor’s welcome map, rewrapped in a white bag with a sedate silvery bow: leave bagels with chive cheese if they are Jewish, sfagliatelle if they are Italian. The most general rule here, “Do nice things,” targets a type of action that can be morally evaluated as right or wrong, but still needs a procedure for determining specific actions that fall in that category, especially at the borders. Consulting community reciprocity standards or conventions might be one. Thus, do nice things by consulting community standards would proceduralize a rule to generate more specific action directives. Again, no consultation with principles are needed.

A great asset of Singer’s view is its accent on the practical within the prescriptive essence of the rule. Most philosophical principles of ethics are explanatory, providing an ultimate ground for understanding prescriptions. These also can be used to justify moral rationales. But they are prescriptive only in the logical sense of distinguishing “shoulds” from “woulds” or “ares,” not the directive sense—do X in way Y. Singer’s take exposes the how-to or know-how of the golden rule. From here, the rule’s interpersonal role in communication and explanation to others is readily derived, especially during socialization. The rule is not portrayed, then as a stationary intellectual object notched on the wall of an inquiring mind. It takes on a life for the moral community living its life.

R. M. Hare basically places the golden rule in the company of the Kantian and Utilitarian theories, or his own “universal prescriptivism.” That is, he interprets it as a universal grounding principle, a fundamental explanatory principle—for reciprocal respect. This conceives ethical theory on the model of scientific theory, especially a physical theory with its laws of nature. A highlighted purpose of Hare’s account is to bring theoretical clarity and rational backing to what he sees as piecemeal intuitionist and situation-based ethics. These latter approaches typically use examples of ethical judgments that the author considers cogent, leaving the reader to agree or disagree on its intuitive appeal. Yet, Hare renders the crucial “as you would have them do” directive of the golden rule as both what we would “wish” them to do to us (before doing it) and what we are “glad” they did toward us (afterwards). He holds that the golden rule’s logic remains constant, despite these word and tense changes. Notably, no grounding is offered for this claim—for the switch from “would have” to “wish” or “glad,” as if these were obviously the same ideas. Hare apparently feels that they are.

But wishes, choices, preferences, and feelings of gladness certainly do not seem the same thing. Choices can come from wishes, though they rarely do, and one feels glad about the results of choices, if not wishes, generally. Wishing typically has higher goals and lower expectations than wanting; it’s bigger on imagination, weaker on real-world motivation. Choosing is usually endorsing and expressing a want, whether or not it expresses a preference among desired objects. None of these may auger a glad feeling, though one would hope they do, hoping also that one’s choices turn out well and that their consequences please us, which they often, sadly, do not.

7. Sticking Points

The greatest help that the golden rule’s common sense might seek from philosophy is a conceptual analysis of the “as you would have” notion (Matthew 7:12). This is a tricky phrase. Rendering the rule’s meaning in ways that collapses wish and want obscures important differences, as just noted. An alternative rendering is how you prefer they treat you, singling out the want that has highest priority for you in this peculiar context of mutual reciprocity, not necessarily in general. Further alternatives are treatments we would accept, or acquiesce in or consent to as opposed to actively and ideally choose or choose as most feasible. These are four quite different options. Or would we have others do unto us as we believe or expect they should treat us based on our or their value commitments and sense of entitlement? Are the expectations of just the two or three people involved to count, or count more than the so-called legitimate expectations of the community? Such interpretations can ride the rule of gold in quite different directions, led by individual tastes, group norms, or transcendent religious or philosophical principles. And we might see some of these as unfair or otherwise illegitimate.

In such contexts, philosophical analysis usually answers questions, clarifying differences in concepts, meanings and their implications. Hare’s account may very likely compound them. I may choose, wish or want that you would treat me with great kindness and generosity, showing me an unselfish plume of altruism. But if I then was legitimately expected to reciprocate out of consistency, I might consent, agree, or acquiesce only in mutual respect or minimal fairness, at most. This is all I’d willingly render to others, certainly, if they did not even render respect and fairness back. From this consent logic we move toward Kantian or social contract versions of mutual respect and a sort of rational expectation that can be widely generalized. But we move very far from the many spirits of the golden rule, wishful and ideal. We move from expanding self-regard other-directedly to hedging our bets, which makes great moral difference.

Similar problems of interpretation rise for the “as” in the related principle, love thy neighbor as thyself (Matthew 20:34). In ethical philosophy, as noted, “self-love” has been identified traditionally with self-interest or self-preference. In psychology, by contrast, it has been identified with self-esteem and locus of control. These are quite different orientations, setting different generalizable expectations in oneself and in others. It is not clear that generalizing self-love captures appropriate other-love. Common opinion has it that love of others should be more disinterested and charitable than love of self, or self-interest. We feel that it is fine to be hard on ourselves on occasion, but more rarely hard on others. We are our own business, but they are not. They are their own business. It seems morally appropriate to sacrifice our own interests but not those of others even when they are willing. We should not urge or perhaps even ask for such sacrifice, instead taking burdens on ourselves. Joys can be shared, but not burdens quite as much.

We are to be nicer, fairer, and more respectful of others than of ourselves. In fact, ethics is about treating others well, and doing so directly. To treat ourselves ethically is a kind of metaphor since only one person is involved in the exchange, and the exchange can only be indirect. We are not held blameworthy for running our self-esteem down when we think we deserve it, but we are to esteem others even when they have not earned it.

Kant, by contrast, poses equal respect for self and other, with little distinction. We are to treat humanity, whether in ourselves or others, as an end in itself and of infinite value. He also poses second-rung duties to self and other toward the pursuit of happiness—a rational, and so self-expressively autonomous, approach to goods. This might be thought to raise a serious question for altruism—the benefiting of others at our expense. Given duties to self and duties to others, even pertaining to the pursuit of happiness, it is not clear what the grounds would be for preferring others to oneself. Yet one would be honored as generous, the other selfish. And this is so even if we have the perfect right to act autonomously in a generous way, therefore not using ourselves as a mere means to others’ happiness. Throughout his ethical works and essays on religion, however, Kant speaks of philanthropy, kindness, and generosity in praising terms without giving like credit to self-interest.

Some would criticize this penchant for treating others better than ourselves as a Christian bias against self-interest, too often cast as selfishness. But it seems in line with the very purposes of ethics, which is how to interact with others, not oneself. In any case, Yeshua’s conception of love was radically different from the traditional notion of his time as it is from our current common sense.

Most of the population originally introduced to the golden-rule family of rules was uneducated and highly superstitious, even as most may be today. The message greets most of us in childhood. Its Christian trappings growing most, at present, in politically oppressive third-world oligarchies where (sophisticated) education is hard to come by. Likely the rule was designed for such audiences. It was designed to serve them, both as an uplifting inspiration and form of edification, raising their moral consciousness. Yet in these circumstances, the real possibility exists of conceiving the rule as, “if you’re willing to take it (bad treatment) you can blithely dish it out.” Vengeance is also a well-respected principle tied to lex talonis. A related misinterpretation puts us in another’s position with our particular interests in tact, asking ourselves what we in particular would prefer. “If I were you, do you know what I would do in that situation?” Decades of research suggests that these are the interpretations most of us develop spontaneously as we are trying to figure out the golden rule and the place of its rationale in more reasoning over childhood and adolescence (Kohlberg 1982).

We can scoff at the obtuseness of these renderings, but even sophisticates may know less about others’ perspectives than they typically assume. Many have great difficulty imagining strangers’ perspectives from the inside, instead making unwarranted assumptions biased to their own preference. (Selman 1980). Otherwise well-educated and experienced folk can be remarkably unskilled at such perspective-taking tasks. Indeed, feminist psychologists demonstrate this inadequacy empirically in psychological males, especially where it involves empathy or spontaneously “feeling with” others. (Hoffman 1987) (In class, when I’ve fully distinguished empathy from cognitive role-taking, many of my brilliant male students confess, “I don’t think I’ve ever done or experienced that.”) Recent empathy programs designed to stop dangerous bullying in American public schools have acknowledged the absence of empathy in many children. Schools have resorted to bringing babies into the classroom to invoke hopefully deep-seated instincts for emotional identification (or “fellow-feeling”) with other members of our human species (Kohlberg 1969).

How we properly balance empathy with cognitive role-taking is a greater sticking point, plaguing psychological females and feminist authors as much as the rest. (The balance, again, is between feeling with, and imaginatively structuring the person’s conceptual space and point of view.) Such integration problems make it unclear how to follow the golden rules properly in most circumstances. And that is quite a drawback for a moral guideline, if the rule is an action guideline. We might then be advised to seek a different approach such as an interpersonal form of participatory democracy, as was previously noted.

Again, these are precisely the sorts of uncertainties and questions that philosophical analysis and theory is supposed to help answer by moving from common sense to uncommonly good sense. To a certain extent, Kantian and Utilitarian theory does just that, better defining the role of careful thought and estimation (reason), moral personality (the components of “self” and “other” that most count) and how these ground equal consideration. But at some point they move to considerations that serve distinctly theoretical and intellectual purposes, removed from everyday thinking and choice. Kant’s “neumonal self” (composed of reason and free will) and the Bentham-Mill “Util-carrier” (an experience processor for pleasure and pain) are not the selves or others we care about when golden-ruling. Their morally relevant qualities cannot compete in importance with our other personal features. Indeed, we cannot identify with, much less respect these one-sided, disembodied essences enough to overrule the array of motivations and personal qualities that match our sense of moral character and concern.

The theoretical rationality of maximizing good, even with prudence built in, is obviously extremist and over-generalized. Research in more practical-minded economics shows this clearly in coming up with concepts like “satisficing” (seeking enough goods in certain categories of those goods most important to us). But as philosophers say, the logics of good and reason in Utilitarianism cannot help but extend to maximization—it is simply irrational, all things considered, to pursue less of a good thing when one can acquire more good at little effort. If so, then perhaps all the worse generalization and consistency, which will be avoided by being reasonable and personable. Many of us wish theory to upgrade common sense, not throw it out the window with the golden rationale in tow.

8. Ethical Reductionism

Both present and likely future philosophical accounts may be unhelpful in bringing clarity to the golden rule in its own terms, rather distorting it through overgeneralization. Still, the crafting of general theory in ethics is an important project. It exposes ever deeper and broader logics underlying our common rationales, the golden rule being one. (It is important for some to review these fundamental issues for treating the golden rule philosophically.)

Relative to a commonsense understanding of the golden rule, it is a heady conceptual experience to see this simple rule of thumb universalized–inflated to epic proportions that encompass the entire blueprint for ethical virtue, reasoning, and behavior for humankind. Such is the case with Kantian and Utilitarian super-principles. To increase the complexity of the rule’s implications while retaining its simplicity, transformed to theoretical elegance, is no mean trick. Paul’s revelation that the golden rule is catholic achieved a like headiness in faith. Now to see that faith reinforced by the most rigorous standards of secular reasoning is quite an affirmation. It can also be recruited as a powerful ally in fending off secular criticism.

Often we fail to recognize that extreme reductionism is the centerpiece of the mainstream general theory project. The whole point is to render the seemingly diverse logics of even conflicting moral concepts and phenomena into a single one, or perhaps two. It is very surprising to find how far a rationale can be extended to cover types of cases beyond its seeming ken—to see how much the virtues of golden kindness or respect, for example, can be recast as mere components of a choice process. Character traits, as states of being, appear radically different from processes of deliberation, problem solving, and behavior after all. But the most salient psychological features of virtuous traits fade into the amoral background once the principled source of their moral relevance and legitimacy is redefined. Golden rule compassion becomes virtuous because it allows us to better consider an “other” as a “self,” not necessarily in itself, its expression, or in the good it does.

The project of general theory also exposes how the implications of golden rule’s basic structure fall short when fully extended. Universalization reveals how the basically sound rationale of the golden rule can go unexpectedly awry at full tilt. This shows a hidden chink in its armor. But reducing principles also can overcome the skepticism of those who see the rule as a narrow slogan from the start. The rule can do much more than expected, it turns out, when its far-reaching implications are made explicit. And by exposing the rule’s shortfall and flaws, we can identify the precise sorts of added components or remedies needed to complement it, thus setting back on the right path.